Chapter 11 SEM: Model Respecifications

In the prior two lessons we engaged in the first of two steps structural equation modeling. We first established the measurement model. Next we specified and evaluated a structural model, which included testing alternative models. In this lesson we consider how to use model statistics to consider respecifying the model through building and trimming. Further, we learn how to compare these models to determine if the fit is improved (i.e., a goal when we free parameters to be in relation with each other), stayed the same (i.e., a goal when we trim paths, thereby fixing their relation to 0.00), or deteriorated.

11.2 Respecifying Structural Models

There is general consensus ((Byrne, 2016b; Chou & Bentler, 2002)) that of the three types of approaches to model building (i.e., strictly confirmatory, alternative models, model generating (Joreskog, 1993)), that model generating is the most commonly used. This means that researchers will respecify the model by either adding paths or covariances, trimming paths or covariances, or both. At the risk of oversimplification, modelers generally take a model building or model trimming approaches. But of course – there is complexity and nuance that we should unravel.

11.2.1 Model Building

In model building, the researcher starts with a more parsimonious model and proceeds to a more general model. Chou and Bentler (2002) termed this forward searching. In general, this requires specifying a model that is overidentified (i.e., it has positive degrees of freedom). If the model does not fit the data well, we will request modification indices. These values are presented in the metric of the chi-square test and will tell us “by how much the chi-square value will decrease” if the two elements listed in each (of a long list) of the results is freed to relate (i.e., by a path or covariance). In a one-degree chi-square test, statistically significant change occurs when the modification index is greater than 3.841.

Freeing parameters is an activity that should be done with caution. Freeing a single parameter by adding a directional path or bidirectional covariance will reduce the degrees of freedom in the structural model by 1. Correspondingly, this respecified model will be the nesting model and will (in all likelihood) have better fit. The chi-square value will decrease by the amount indicated in the modification index and, presuming the parameter was freed because it would result in a statistically significant decrease in the unit of the chi-square, the respecified model with the additional path(s) may look as if it is a superior model. When fit is increasing incrementally, it can be tempting to keep freeing parameters until degrees of freedom are zero and the model is just identified. Doing so, risks overparameterization. This means that the model is overfit. Although it appears to fit the sample data well, it may fail to generalize to a different set of data that represents the population of interest. That is, the model is sample-specific which makes it less useful in making predictions or generalizing more broadly.

Thus, there are are cautions about model the forward searching approach:

- Any paths that are added (i.e., any parameters that are freed) should have strong theoretical justification.

- Any paths that are added should be statistically sensible. The procedure we use to produce modification indices, will produce them for every unsaturated paths. Sensible relations would generally include

- directional paths between variables in the structural model, and

- error covariances in the measurement model (for more on this see the lesson, CFA: Hierarchical and Nested Models

- Avoid adding paths that are not sensible. An example would be a path between an indicator in the measurement model and a structural variable.

- but do think about why the modification index might be suggesting such; perhaps it is a clue to an error.

11.2.2 Model Trimming

In model trimming, the researcher starts with a more general model or saturated (i.e., zero degrees of freedom in the structural model) and, on the basis of statistical criteria, trims non-significant (i.e., and low regression weight) paths from the model. Chou and Bentler (2002) termed this backward searching. The general process is to identify and delete non-essential paths one at a time and evaluate the “hit to the fit.” Given that the more saturated model is the nesting model, one can expect that trimmed, nested model will have fit that is incrementally lower. The hope is that the difference does not bring the global fit indices into thresholds that indicate poor fit and that, when compared statistically, the nested model is not statistically significantly different than the nesting model.

Several prominent researchers appear to favor the model trimming/backward searching approach. Chou and Bentler (2002) acknowledged that while the forward searching approach is commonly utilized its ability to find the true model has been questioned. Their Monte Carlo based test study demonstrated that a backward search approach that imposed constraints with the z and Wald(W) tests identified the true model with greater than 60% accuracy. Kenny’s (Kenny, 2012) SEM website and workshops have also advocated for a model trimming approach that generally starts with a just-identified (i.e., saturated, df = 0) model and trims paths, one-at-a-time, to see if the adequately fitting result resembled the theoretical model they identified, apriorily.

11.2.3 Restating Approaches to Respecification

The terms, concepts, and logic related to nesting, saturation, and respecification can be confusing. Hopefully, this table can help clarify their meaning and role.

| Approach to Respecification | Hypothesized (Original model) is | Comparison model(s) is | Typical Hope of \(\Delta\chi^2\) test |

|---|---|---|---|

| Model building, forward search | overidentified, df > 0 | more paths, fewer df | significant indicating additional path improved model fit |

| Model trimming, backward search | just-identified, df = 0 | fewer paths, more df | non-significant indicating deleted path did not lead to poorer model fit |

In today’s lesson, we will start with a rather complex, over-identified model. Following the example Byrne’s (Byrne, 2016a) chapter, we will first use modification indices to see about freeing parameters (i.e., adding paths) and then inspect nonsignificant paths for possible constraint (e.g., deletion).

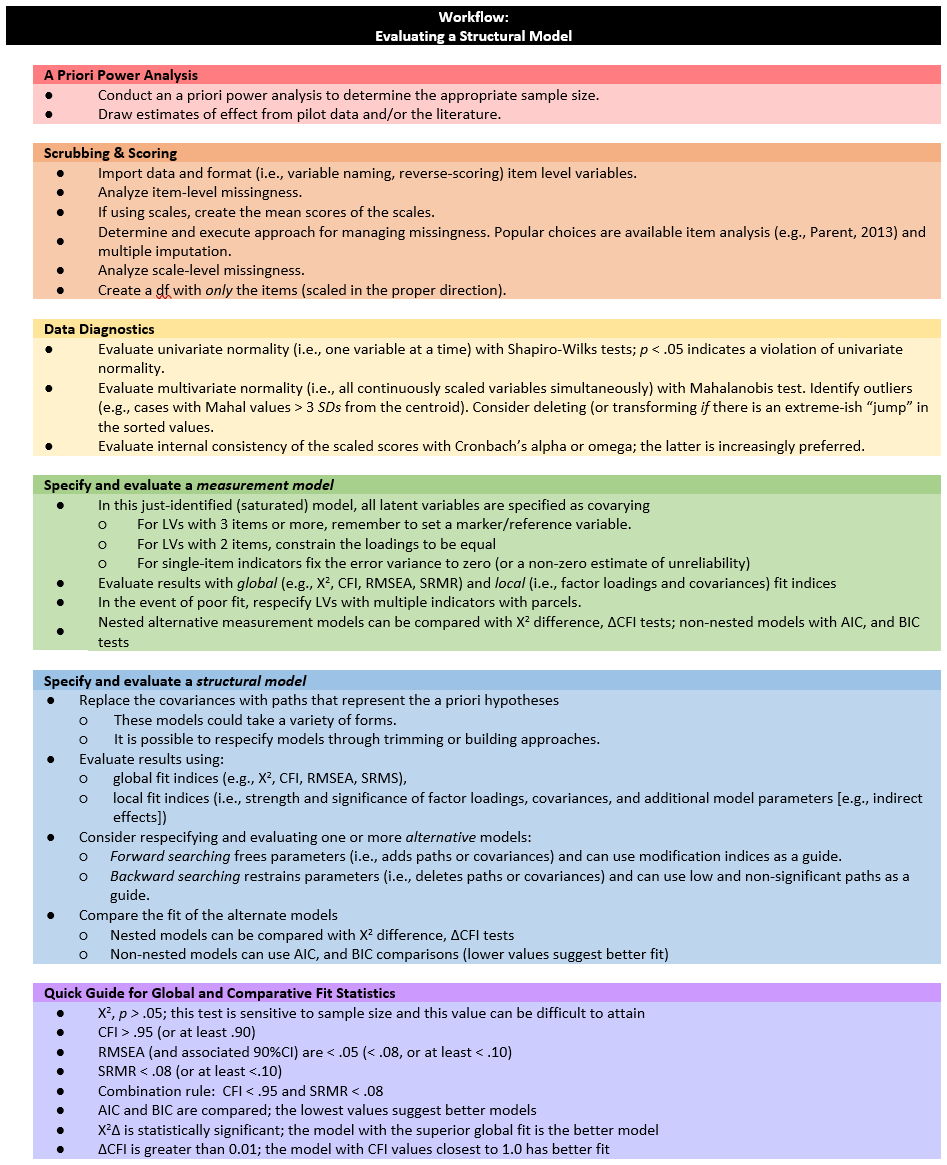

11.3 Workflow for Evaluating a Structural Model

Below is the overall workflow for evaluating a structural model. Today our focus is the specification and evaluation of the structural model.

Evaluating a structural model involves the following steps:

Evaluating a structural model involves the following steps:

- A Priori Power Analysis

- Conduct an a priori power analysis to determine the appropriate sample size. _ Draw estimates of effect from pilot data and/or the literature.

- Scrubbing & Scoring

- Import data and format (i.e., variable naming, reverse-scoring) item level variables.

- Analyze item-level missingness.

- If using scales, create the mean scores of the scales.

- Determine and execute approach for managing missingness. Popular choices are available item analysis (e.g., Parent, 2013) and multiple imputation.

- Analyze scale-level missingness.

- Create a df with only the items (scaled in the proper direction).

- Data Diagnostics

- Evaluate univariate normality (i.e., one variable at a time) with Shapiro-Wilks tests; p < .05 indicates a violation of univariate normality.

- Evaluate multivariate normality (i.e., all continuously scaled variables simultaneously) with Mahalanobis test. Identify outliers (e.g., cases with Mahal values > 3 SDs from the centroid). Consider deleting (or transforming if there is an extreme-ish “jump” in the sorted values.

- Evaluate internal consistency of the scaled scores with Cronbach’s alpha or omega; the latter is increasingly preferred. Specify and evaluate a measurement model

- In this just-identified (saturated) model, all latent variables are specified as covarying.

- For LVs with 3 items or more, remember to set a marker/reference variable,

- For LVs with 2 items, constrain the loadings to be equal,

- For single-item indicators fix the error variance to zero (or a non-zero estimate of unreliability).

- Evaluate results with global (e.g., X2, CFI, RMSEA, SRMR) and local (i.e., factor loadings and covariances) fit indices.

- In the event of poor fit, respecify LVs with multiple indicators with parcels.

- Nested alternative measurement models can be compared with Χ2 difference, ΔCFI tests; non-nested models with AIC, and BIC tests .

- Specify and evaluate a structural model.

- Replace the covariances with paths that represent the a priori hypotheses.

- These models could take a variety of forms.

- It is possible to respecify models through trimming or building approaches.

- Evaluate results using

- global fit indices (e.g., X2, CFI, RMSEA, SRMS),

- local fit indices (i.e., strength and significance of factor loadings, covariances, and additional model parameters [e.g., indirect effects]).

- Consider respecifying and evaluating one or more alternative models.

- Forward searching involves freeing parameters (adding paths or covariances) and can use modification indices as a guide.

- Backward searching involves restraining parameters (deleting paths or covariances) and can use low and non-significant paths as a guide.

- Compare the fit of the alternate models.

- Nested models can be compared with Χ2 difference and ΔCFI tests.

- Non-nested models can be compared with AIC and BIC (lower values suggest better fit).

- Replace the covariances with paths that represent the a priori hypotheses.

- Quick Guide for Global and Comparative Fit Statistics.

- \(\chi^2\), p < .05; this test is sensitive to sample size and this value can be difficult to attain

- CFI > .95 (or at least .90)

- RMSEA (and associated 90%CI) are < .05 ( < .08, or at least < .10)

- SRMR < .08 (or at least <.10)

- Combination rule: CFI < .95 and SRMR < .08

- AIC and BIC are compared; the lowest values suggest better models

- \(\chi^2\Delta\) is statistically significant; the model with the superior fit is the better model

- \(\delta CFI\) is greater than 0.01; the model with CFI values closest to 1.0 has better fit

11.4 Research Vignette

Once again the research vignette comes from the Lewis, Williams, Peppers, and Gadson’s (2017) study titled, “Applying Intersectionality to Explore the Relations Between Gendered Racism and Health Among Black Women.” The study was published in the Journal of Counseling Psychology. Participants were 231 Black women who completed an online survey.

Variables used in the study included:

GRMS: Gendered Racial Microaggressions Scale (J. A. Lewis & Neville, 2015) is a 26-item scale that assesses the frequency of nonverbal, verbal, and behavioral negative racial and gender slights experienced by Black women. Scaling is along six points ranging from 0 (never) to 5 (once a week or more). Higher scores indicate a greater frequency of gendered racial microaggressions. An example item is, “Someone has made a sexually inappropriate comment about my butt, hips, or thighs.”

MntlHlth and PhysHlth: Short Form Health Survey - Version 2 (Ware et al., 1995) is a 12-item scale used to report self-reported mental (six items) and physical health (six items). Higher scores indicate higher mental health (e.g., little or no psychological ldistress) and physical health (e.g., little or no reported symptoms in physical functioning). An example of an item assessing mental health was, “How much of the time during the last 4 weeks have you felt calm and peaceful?”; an example of a physical health item was, “During the past 4 weeks, how much did pain interfere with your normal work?”

Sprtlty, SocSup, Engmgt, and DisEngmt are four subscales from the Brief Coping with Problems Experienced Inventory (Carver, 1997). The 28 items on this scale are presented on a 4-point scale ranging from 1 (I usually do not do this at all) to 4(I usually do this a lot). Higher scores indicate a respondents’ tendency to engage in a particular strategy. Instructions were modified to ask how the female participants responded to recent experiences of racism and sexism as Black women. The four subscales included spirituality (religion, acceptance, planning), interconnectedness/social support (vent emotions, emotional support,instrumental social support), problem-oriented/engagement coping (active coping, humor, positive reinterpretation/positive reframing), and disengagement coping (behavioral disengagement, substance abuse, denial, self-blame, self-distraction).

GRIcntlty: The Multidimensional Inventory of Black Identity Centrality subscale (Sellers et al., n.d.) was modified to measure the intersection of racial and gender identity centrality. The scale included 10 items scaled from 1 (strongly disagree) to 7 (strongly agree). An example item was, “Being a Black woman is important to my self-image.” Higher scores indicated higher levels of gendered racial identity centrality.

Today we will use the simulated data to evaluate a model that was suggested by a figure in the journal (one IV, four mediators, two dependent variables) but was not tested as a single structural model. Rather, the authors ran a series of four simple mediations per dependent variable for a total of eight separate analyses. The authors do not elaborate on their rationale for this appraoch. My guess is that they were limited in design by their use of ordinary least squares with the PROCESS macro in SPSS. Additionally, they may have been concerned about power when they considered a more complicated, latent variable, design.

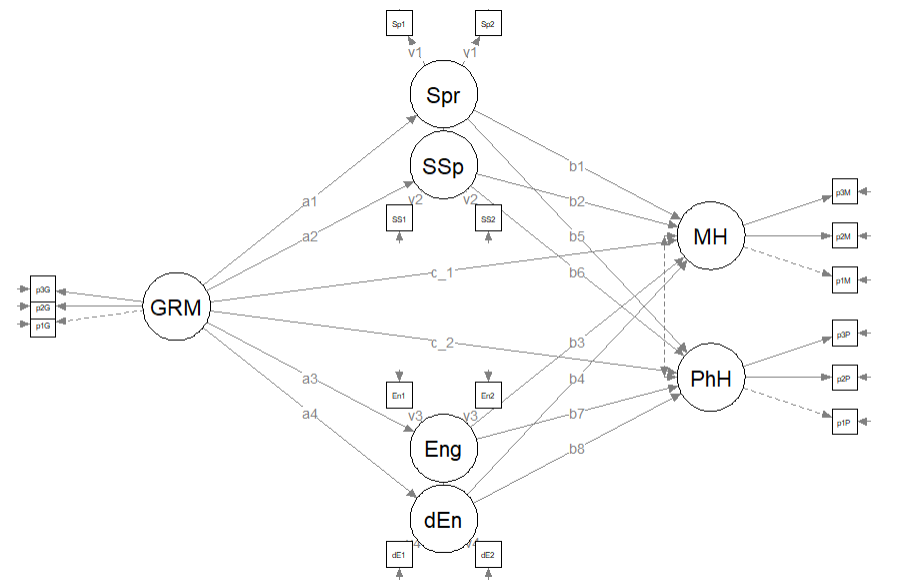

Specifically, we will specify a parallel mediation model where two dependent variables (i.e., mental health, physical health) are predicted directly from gendered racial microaggressions and indirectly through four coping strategies (i.e., spirituality, social support, engagement, disengagement).

11.4.1 Simulating the data from the journal article

The lavaan::simulateData function was used. If you have taken psychometrics, you may recognize the code as one that creates latent variables form item-level data. In trying to be as authentic as possible, we retrieved factor loadings from psychometrically oriented articles that evaluated the measures (Nadal, 2011; Veit & Ware, 1983). For all others we specified a factor loading of 0.80. We then approximated the measurement model by specifying the correlations between the latent variable. We sourced these from the correlation matrix from the research vignette (J. A. Lewis et al., 2017). The process created data with multiple decimals and values that exceeded the boundaries of the variables. For example, in all scales there were negative values. Therefore, the final element of the simulation was a linear transformation that rescaled the variables back to the range described in the journal article and rounding the values to integer (i.e., with no decimal places).

#Entering the intercorrelations, means, and standard deviations from the journal article

Lewis_generating_model <- '

#measurement model

GRMS =~ .69*Ob1 + .69*Ob2 + .60*Ob3 + .59*Ob4 + .55*Ob5 + .55*Ob6 + .54*Ob7 + .50*Ob8 + .41*Ob9 + .41*Ob10 + .93*Ma1 + .81*Ma2 + .69*Ma3 + .67*Ma4 + .61*Ma5 + .58*Ma6 + .54*Ma7 + .59*St1 + .55*St2 + .54*St3 + .54*St4 + .51*St5 + .70*An1 + .69*An2 + .68*An3

MntlHlth =~ .8*MH1 + .8*MH2 + .8*MH3 + .8*MH4 + .8*MH5 + .8*MH6

PhysHlth =~ .8*PhH1 + .8*PhH2 + .8*PhH3 + .8*PhH4 + .8*PhH5 + .8*PhH6

Spirituality =~ .8*Spirit1 + .8*Spirit2

SocSupport =~ .8*SocS1 + .8*SocS2

Engagement =~ .8*Eng1 + .8*Eng2

Disengagement =~ .8*dEng1 + .8*dEng2

GRIC =~ .8*Cntrlty1 + .8*Cntrlty2 + .8*Cntrlty3 + .8*Cntrlty4 + .8*Cntrlty5 + .8*Cntrlty6 + .8*Cntrlty7 + .8*Cntrlty8 + .8*Cntrlty9 + .8*Cntrlty10

#Means

GRMS ~ 1.99*1

Spirituality ~2.82*1

SocSupport ~ 2.48*1

Engagement ~ 2.32*1

Disengagement ~ 1.75*1

GRIC ~ 5.71*1

MntlHlth ~3.56*1 #Lewis et al used sums instead of means, I recast as means to facilitate simulation

PhysHlth ~ 3.51*1 #Lewis et al used sums instead of means, I recast as means to facilitate simulation

#Correlations

GRMS ~ 0.20*Spirituality

GRMS ~ 0.28*SocSupport

GRMS ~ 0.30*Engagement

GRMS ~ 0.41*Disengagement

GRMS ~ 0.19*GRIC

GRMS ~ -0.32*MntlHlth

GRMS ~ -0.18*PhysHlth

Spirituality ~ 0.49*SocSupport

Spirituality ~ 0.57*Engagement

Spirituality ~ 0.22*Disengagement

Spirituality ~ 0.12*GRIC

Spirituality ~ -0.06*MntlHlth

Spirituality ~ -0.13*PhysHlth

SocSupport ~ 0.46*Engagement

SocSupport ~ 0.26*Disengagement

SocSupport ~ 0.38*GRIC

SocSupport ~ -0.18*MntlHlth

SocSupport ~ -0.08*PhysHlth

Engagement ~ 0.37*Disengagement

Engagement ~ 0.08*GRIC

Engagement ~ -0.14*MntlHlth

Engagement ~ -0.06*PhysHlth

Disengagement ~ 0.05*GRIC

Disengagement ~ -0.54*MntlHlth

Disengagement ~ -0.28*PhysHlth

GRIC ~ -0.10*MntlHlth

GRIC ~ 0.14*PhysHlth

MntlHlth ~ 0.47*PhysHlth

'

set.seed(230925)

dfLewis <- lavaan::simulateData(model = Lewis_generating_model,

model.type = "sem",

meanstructure = T,

sample.nobs=231,

standardized=FALSE)

#used to retrieve column indices used in the rescaling script below

#col_index <- as.data.frame(colnames(dfLewis))

for(i in 1:ncol(dfLewis)){ # for loop to go through each column of the dataframe

if(i >= 1 & i <= 25){ # apply only to GRMS variables

dfLewis[,i] <- scales::rescale(dfLewis[,i], c(0, 5))

}

if(i >= 26 & i <= 37){ # apply only to mental and physical health variables

dfLewis[,i] <- scales::rescale(dfLewis[,i], c(0, 6))

}

if(i >= 38 & i <= 45){ # apply only to coping variables

dfLewis[,i] <- scales::rescale(dfLewis[,i], c(1, 4))

}

if(i >= 46 & i <= 55){ # apply only to GRIC variables

dfLewis[,i] <- scales::rescale(dfLewis[,i], c(1, 7))

}

}

#rounding to integers so that the data resembles that which was collected

library(tidyverse)

dfLewis <- dfLewis %>% round(0)

#quick check of my work

#psych::describe(dfLewis) The script below allows you to store the simulated data as a file on your computer. This is optional – the entire lesson can be worked with the simulated data.

If you prefer the .rds format, use this script (remove the hashtags). The .rds format has the advantage of preserving any formatting of variables. A disadvantage is that you cannot open these files outside of the R environment.

Script to save the data to your computer as an .rds file.

Once saved, you could clean your environment and bring the data back in from its .csv format.

If you prefer the .csv format (think “Excel lite”) use this script (remove the hashtags). An advantage of the .csv format is that you can open the data outside of the R environment. A disadvantage is that it may not retain any formatting of variables

Script to save the data to your computer as a .csv file.

Once saved, you could clean your environment and bring the data back in from its .csv format.

11.5 Scrubbing, Scoring, and Data Diagnostics

Because the focus of this lesson is on the specific topic of specifying and evaluating a structural model for SEM and have used simulated data, we are skipping many of the steps in scrubbing, scoring and data diagnostics. If this were real, raw, data, it would be important to scrub, if needed score, and conduct data diagnostics to evaluate the suitability of the data for the proposes analyses.

11.6 Script for Specifying Models in lavaan

SEM in lavaan requires fluency with the R script. Below is a brief overview of the operators we use most frequently:

- Latent variables (factors) must be defined by their manifest or latent indicators.

- the special operator (=~, is measured/defined by) is used for this

- Example: f1 =~ y1 + y2 + y3

- Regression equations use the single tilda (~, is regressed on)

- place DV (y) on left of operator

- place IVs, separate by + on the right

- Example: y ~ f1 + f2 + x1 + x2

- f is a latent variable in this example

- y, x1, and x2 are observed variables in this example

- An asterisk can affix a label in subsequent calculations and in interpreting output

- Variances and covariances are specified with a double tilde operator (~~, is correlated with)

- Example of variance: y1 ~~ y1 (the relationship with itself)

- Example of covariance: y1 ~~ y2 (relationship with another variable)

- Example of covariance of a factor: f1 ~~ f2 *Intercepts (~ 1) for observed and LVs are simple, intercept-only regression formulas

- Example of variable intercept: y1 ~ 1

- Example of factor intercept: f1 ~ 1

A complete lavaan model is a combination of these formula types, enclosed between single quotation models. Readibility of model syntax is improved by:

- splitting formulas over multiple lines

- using blank lines within single quote

- labeling with the hashtag

11.7 Quick Specification of the Measurement Model

Recall that the first step in establishing a structural model is to specify, evaluate, and if necessary re-specify the measurement model. For this data I have specified the measurement model as follows:

- Gendered Racial Microaggressions Scale (GRMS): randomly assign the 26 items to 3 parcels.

- Mental health (MH): randomly assign the 6 items to 3 parcels.

- Physical health (PhH): randomly assign the 6 items to 3 parcels.

- Coping strategies (spiritual/Spr, social support (SSp), engagement (Eng), disengagement (dEng): Constrain the loadings for each of the two variables per construct to be equal.

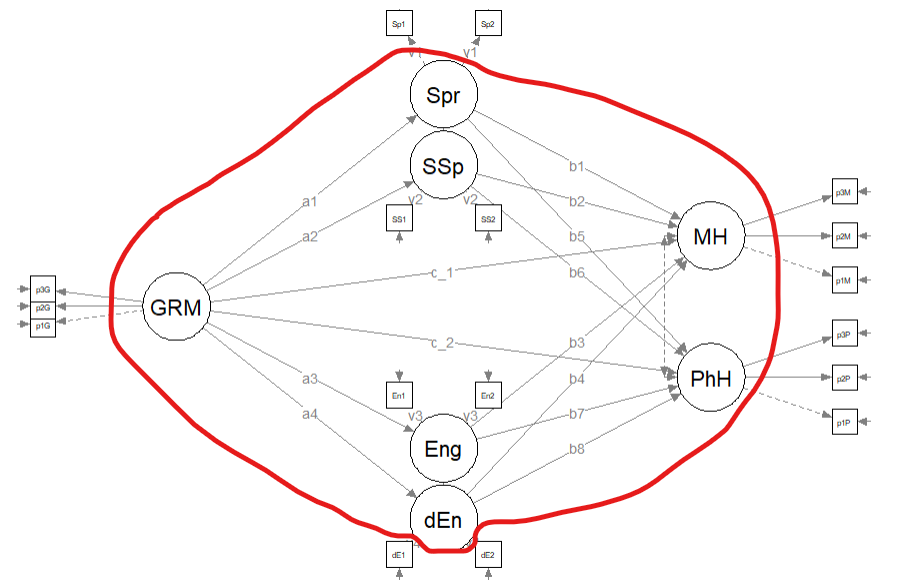

For more information on establishing measurement models please visit the lesson on establishing the measurement model. Here is a representation of the measurement model we are specifying.

To proceed with this approach, I first need to create parcels for the GRMS, MH, and PhH scales. This code randomly assigns the GRMS items to three parcels.

To proceed with this approach, I first need to create parcels for the GRMS, MH, and PhH scales. This code randomly assigns the GRMS items to three parcels.

set.seed(230916)

items <- c("Ob1", "Ob2", "Ob3", "Ob4", "Ob5", "Ob6", "Ob7", "Ob8", "Ob9",

"Ob10", "Ma1", "Ma2", "Ma3", "Ma4", "Ma5", "Ma6", "Ma7", "St1", "St2",

"St3", "St4", "St5", "An1", "An2")

parcels <- c("GRMS_p1", "GRMS_p_2", "GRMS_p3")

data.frame(items = sample(items), parcel = rep(parcels, length = length(items)))## items parcel

## 1 Ma3 GRMS_p1

## 2 Ob7 GRMS_p_2

## 3 Ob9 GRMS_p3

## 4 Ma7 GRMS_p1

## 5 Ma5 GRMS_p_2

## 6 Ob2 GRMS_p3

## 7 Ma1 GRMS_p1

## 8 An1 GRMS_p_2

## 9 Ob4 GRMS_p3

## 10 Ob1 GRMS_p1

## 11 St3 GRMS_p_2

## 12 Ob6 GRMS_p3

## 13 St2 GRMS_p1

## 14 Ob5 GRMS_p_2

## 15 Ob10 GRMS_p3

## 16 Ob8 GRMS_p1

## 17 Ma6 GRMS_p_2

## 18 St5 GRMS_p3

## 19 An2 GRMS_p1

## 20 Ma2 GRMS_p_2

## 21 Ob3 GRMS_p3

## 22 Ma4 GRMS_p1

## 23 St1 GRMS_p_2

## 24 St4 GRMS_p3We can now create the parcels using the same scoring procedure as we did for the REMS and CMI instruments.

GRMS_p1_vars <- c("Ma3", "Ma7", "Ma1", "Ob1", "St2", "Ob8", "An2", "Ma4")

GRMS_p2_vars <- c("Ob7", "Ma5", "An1", "St3", "Ob5", "Ma6", "Ma2", "St1")

GRMS_p3_vars <- c("Ob9", "Ob2", "Ob4", "Ob6", "Ob10", "St5", "Ob3", "St4")

dfLewis$p1GRMS <- sjstats::mean_n(dfLewis[, GRMS_p1_vars], 0.75)

dfLewis$p2GRMS <- sjstats::mean_n(dfLewis[, GRMS_p2_vars], 0.75)

dfLewis$p3GRMS <- sjstats::mean_n(dfLewis[, GRMS_p3_vars], 0.75)

# If the scoring code above does not work for you, try the format

# below which involves inserting to periods in front of the variable

# list. One example is provided. dfLewis$p3PWB <-

# sjstats::mean_n(dfLewis[, ..PWB_p3_vars], .75)This code randomly assigns the mental health items to three parcels.

set.seed(230916)

items <- c("MH1", "MH2", "MH3", "MH4", "MH5", "MH6")

parcels <- c("MH_p1", "MH_p2", "MH_p3")

data.frame(items = sample(items), parcel = rep(parcels, length = length(items)))## items parcel

## 1 MH5 MH_p1

## 2 MH1 MH_p2

## 3 MH4 MH_p3

## 4 MH6 MH_p1

## 5 MH3 MH_p2

## 6 MH2 MH_p3This code provides means for each of the three REMS parcels.

MH_p1_vars <- c("MH5", "MH6")

MH_p2_vars <- c("MH1", "MH3")

MH_p3_vars <- c("MH4", "MH2")

dfLewis$p1MH <- sjstats::mean_n(dfLewis[, MH_p1_vars], 0.75)

dfLewis$p2MH <- sjstats::mean_n(dfLewis[, MH_p2_vars], 0.75)

dfLewis$p3MH <- sjstats::mean_n(dfLewis[, MH_p3_vars], 0.75)

# If the scoring code above does not work for you, try the format

# below which involves inserting to periods in front of the variable

# list. One example is provided. dfLewis$p3REMS <-

# sjstats::mean_n(dfLewis[, ..REMS_p3_vars], .80)This code randomly assigns the physical health items to three parcels.

set.seed(230916)

items <- c("PhH1", "PhH2", "PhH3", "PhH4", "PhH5", "PhH6")

parcels <- c("PhH_p1", "PhH_p2", "PhH_p3")

data.frame(items = sample(items), parcel = rep(parcels, length = length(items)))## items parcel

## 1 PhH5 PhH_p1

## 2 PhH1 PhH_p2

## 3 PhH4 PhH_p3

## 4 PhH6 PhH_p1

## 5 PhH3 PhH_p2

## 6 PhH2 PhH_p3This code provides means for each of the three REMS parcels.

PhH_p1_vars <- c("PhH5", "PhH6")

PhH_p2_vars <- c("PhH1", "PhH3")

PhH_p3_vars <- c("PhH4", "PhH2")

dfLewis$p1PhH <- sjstats::mean_n(dfLewis[, PhH_p1_vars], 0.75)

dfLewis$p2PhH <- sjstats::mean_n(dfLewis[, PhH_p2_vars], 0.75)

dfLewis$p3PhH <- sjstats::mean_n(dfLewis[, PhH_p3_vars], 0.75)

# If the scoring code above does not work for you, try the format

# below which involves inserting to periods in front of the variable

# list. One example is provided. dfLewis$p3REMS <-

# sjstats::mean_n(dfLewis[, ..REMS_p3_vars], .80)Below is code for specifying the measurement model. Each of the latent variables/factors (REMS, CMI, PWB) is identified by three parcels. Each of the latent variables is allowed to covary with the others.

msmt_mod <- "

##measurement model

GRMS =~ p1GRMS + p2GRMS + p3GRMS

MH =~ p1MH + p2MH + p3MH

PhH =~ p1PhH + p2PhH + p3PhH

Spr =~ v1*Spirit1 + v1*Spirit2

SSp =~ v2*SocS1 + v2*SocS2

Eng =~ v3*Eng1 + v3*Eng2

dEng =~ v4*dEng1 + v4*dEng2

# Covariances

GRMS ~~ MH

GRMS ~~ PhH

GRMS ~~ Spr

GRMS ~~ SSp

GRMS ~~ Eng

GRMS ~~ dEng

MH ~~ PhH

MH ~~ Spr

MH ~~ SSp

MH ~~ Eng

MH ~~ dEng

PhH ~~ Spr

PhH ~~ SSp

PhH ~~ Eng

PhH ~~ dEng

Spr ~~ SSp

Spr ~~ Eng

Spr ~~ dEng

SSp ~~ Eng

SSp ~~ dEng

Eng ~~ dEng

"

set.seed(230916)

msmt_fit <- lavaan::cfa(msmt_mod, data = dfLewis, missing = "fiml")

msmt_fit_sum <- lavaan::summary(msmt_fit, fit.measures = TRUE, standardized = TRUE)

msmt_fit_pEsts <- lavaan::parameterEstimates(msmt_fit, boot.ci.type = "bca.simple",

standardized = TRUE)

msmt_fit_sum## lavaan 0.6.17 ended normally after 106 iterations

##

## Estimator ML

## Optimization method NLMINB

## Number of model parameters 68

##

## Number of observations 231

## Number of missing patterns 1

##

## Model Test User Model:

##

## Test statistic 94.122

## Degrees of freedom 102

## P-value (Chi-square) 0.698

##

## Model Test Baseline Model:

##

## Test statistic 2104.157

## Degrees of freedom 136

## P-value 0.000

##

## User Model versus Baseline Model:

##

## Comparative Fit Index (CFI) 1.000

## Tucker-Lewis Index (TLI) 1.005

##

## Robust Comparative Fit Index (CFI) 1.000

## Robust Tucker-Lewis Index (TLI) 1.005

##

## Loglikelihood and Information Criteria:

##

## Loglikelihood user model (H0) -3376.747

## Loglikelihood unrestricted model (H1) -3329.686

##

## Akaike (AIC) 6889.494

## Bayesian (BIC) 7123.579

## Sample-size adjusted Bayesian (SABIC) 6908.057

##

## Root Mean Square Error of Approximation:

##

## RMSEA 0.000

## 90 Percent confidence interval - lower 0.000

## 90 Percent confidence interval - upper 0.028

## P-value H_0: RMSEA <= 0.050 1.000

## P-value H_0: RMSEA >= 0.080 0.000

##

## Robust RMSEA 0.000

## 90 Percent confidence interval - lower 0.000

## 90 Percent confidence interval - upper 0.028

## P-value H_0: Robust RMSEA <= 0.050 1.000

## P-value H_0: Robust RMSEA >= 0.080 0.000

##

## Standardized Root Mean Square Residual:

##

## SRMR 0.034

##

## Parameter Estimates:

##

## Standard errors Standard

## Information Observed

## Observed information based on Hessian

##

## Latent Variables:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## GRMS =~

## p1GRMS 1.000 0.726 0.956

## p2GRMS 1.014 0.030 34.037 0.000 0.736 0.961

## p3GRMS 0.904 0.030 30.069 0.000 0.656 0.934

## MH =~

## p1MH 1.000 0.624 0.765

## p2MH 1.237 0.128 9.696 0.000 0.773 0.728

## p3MH 1.241 0.112 11.044 0.000 0.775 0.776

## PhH =~

## p1PhH 1.000 0.600 0.662

## p2PhH 0.997 0.125 7.959 0.000 0.598 0.714

## p3PhH 1.060 0.139 7.643 0.000 0.636 0.727

## Spr =~

## Spirit1 (v1) 1.000 0.488 0.730

## Spirit2 (v1) 1.000 0.488 0.762

## SSp =~

## SocS1 (v2) 1.000 0.425 0.704

## SocS2 (v2) 1.000 0.425 0.654

## Eng =~

## Eng1 (v3) 1.000 0.409 0.612

## Eng2 (v3) 1.000 0.409 0.701

## dEng =~

## dEng1 (v4) 1.000 0.410 0.684

## dEng2 (v4) 1.000 0.410 0.652

##

## Covariances:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## GRMS ~~

## MH -0.290 0.042 -6.868 0.000 -0.639 -0.639

## PhH -0.185 0.039 -4.698 0.000 -0.425 -0.425

## Spr 0.289 0.034 8.445 0.000 0.815 0.815

## SSp 0.212 0.030 7.160 0.000 0.689 0.689

## Eng 0.204 0.029 7.054 0.000 0.689 0.689

## dEng 0.202 0.029 7.008 0.000 0.680 0.680

## MH ~~

## PhH 0.200 0.040 5.041 0.000 0.533 0.533

## Spr -0.155 0.030 -5.177 0.000 -0.507 -0.507

## SSp -0.113 0.027 -4.222 0.000 -0.427 -0.427

## Eng -0.090 0.025 -3.558 0.000 -0.353 -0.353

## dEng -0.168 0.028 -6.022 0.000 -0.656 -0.656

## PhH ~~

## Spr -0.090 0.028 -3.205 0.001 -0.307 -0.307

## SSp -0.069 0.026 -2.699 0.007 -0.271 -0.271

## Eng -0.052 0.025 -2.111 0.035 -0.212 -0.212

## dEng -0.101 0.026 -3.830 0.000 -0.411 -0.411

## Spr ~~

## SSp 0.153 0.023 6.739 0.000 0.738 0.738

## Eng 0.140 0.022 6.409 0.000 0.704 0.704

## dEng 0.129 0.022 5.998 0.000 0.646 0.646

## SSp ~~

## Eng 0.076 0.019 4.005 0.000 0.439 0.439

## dEng 0.070 0.019 3.693 0.000 0.400 0.400

## Eng ~~

## dEng 0.088 0.019 4.657 0.000 0.525 0.525

##

## Intercepts:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## .p1GRMS 2.592 0.050 51.897 0.000 2.592 3.415

## .p2GRMS 2.545 0.050 50.567 0.000 2.545 3.327

## .p3GRMS 2.579 0.046 55.829 0.000 2.579 3.673

## .p1MH 3.582 0.054 66.695 0.000 3.582 4.388

## .p2MH 2.866 0.070 41.068 0.000 2.866 2.702

## .p3MH 3.035 0.066 46.200 0.000 3.035 3.040

## .p1PhH 3.128 0.060 52.418 0.000 3.128 3.449

## .p2PhH 2.652 0.055 48.093 0.000 2.652 3.164

## .p3PhH 3.067 0.058 53.298 0.000 3.067 3.507

## .Spirit1 2.511 0.044 57.110 0.000 2.511 3.758

## .Spirit2 2.437 0.042 57.882 0.000 2.437 3.808

## .SocS1 2.550 0.040 64.254 0.000 2.550 4.228

## .SocS2 2.758 0.043 64.576 0.000 2.758 4.249

## .Eng1 2.437 0.044 55.427 0.000 2.437 3.647

## .Eng2 2.515 0.038 65.479 0.000 2.515 4.308

## .dEng1 2.502 0.039 63.518 0.000 2.502 4.179

## .dEng2 2.455 0.041 59.357 0.000 2.455 3.905

##

## Variances:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## .p1GRMS 0.050 0.008 6.600 0.000 0.050 0.086

## .p2GRMS 0.044 0.007 6.015 0.000 0.044 0.076

## .p3GRMS 0.063 0.008 8.289 0.000 0.063 0.128

## .p1MH 0.277 0.037 7.437 0.000 0.277 0.415

## .p2MH 0.528 0.067 7.935 0.000 0.528 0.469

## .p3MH 0.396 0.055 7.225 0.000 0.396 0.397

## .p1PhH 0.463 0.058 7.996 0.000 0.463 0.562

## .p2PhH 0.344 0.049 7.098 0.000 0.344 0.490

## .p3PhH 0.360 0.054 6.723 0.000 0.360 0.471

## .Spirit1 0.208 0.026 8.152 0.000 0.208 0.467

## .Spirit2 0.171 0.023 7.433 0.000 0.171 0.419

## .SocS1 0.183 0.025 7.225 0.000 0.183 0.504

## .SocS2 0.241 0.029 8.235 0.000 0.241 0.572

## .Eng1 0.279 0.032 8.627 0.000 0.279 0.625

## .Eng2 0.173 0.025 6.893 0.000 0.173 0.509

## .dEng1 0.191 0.025 7.482 0.000 0.191 0.532

## .dEng2 0.227 0.028 8.127 0.000 0.227 0.575

## GRMS 0.527 0.054 9.796 0.000 1.000 1.000

## MH 0.390 0.062 6.241 0.000 1.000 1.000

## PhH 0.360 0.074 4.854 0.000 1.000 1.000

## Spr 0.238 0.032 7.412 0.000 1.000 1.000

## SSp 0.180 0.028 6.378 0.000 1.000 1.000

## Eng 0.167 0.028 6.042 0.000 1.000 1.000

## dEng 0.168 0.027 6.208 0.000 1.000 1.000# msmt_fit_pEsts #although creating the object is useful to export as

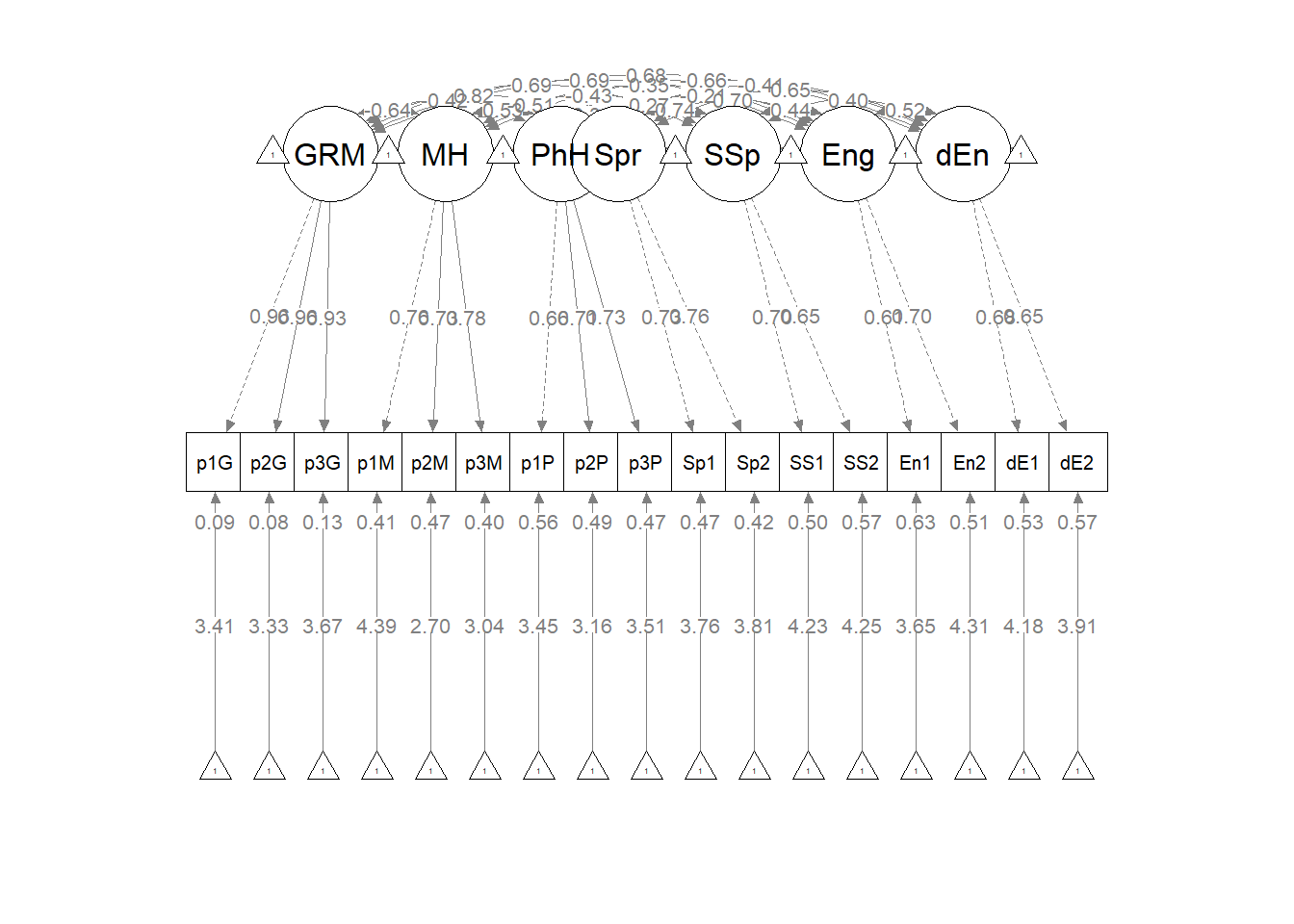

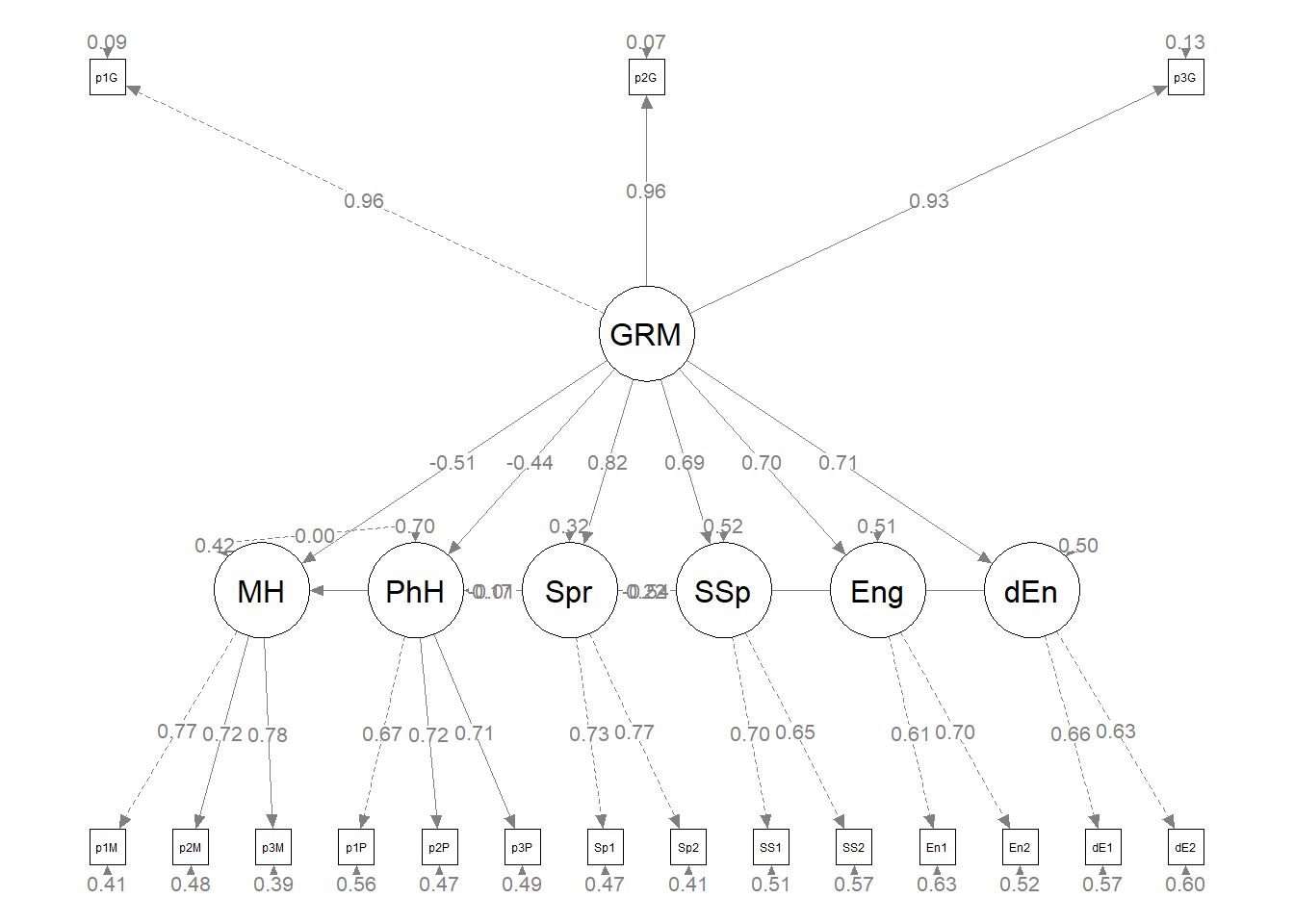

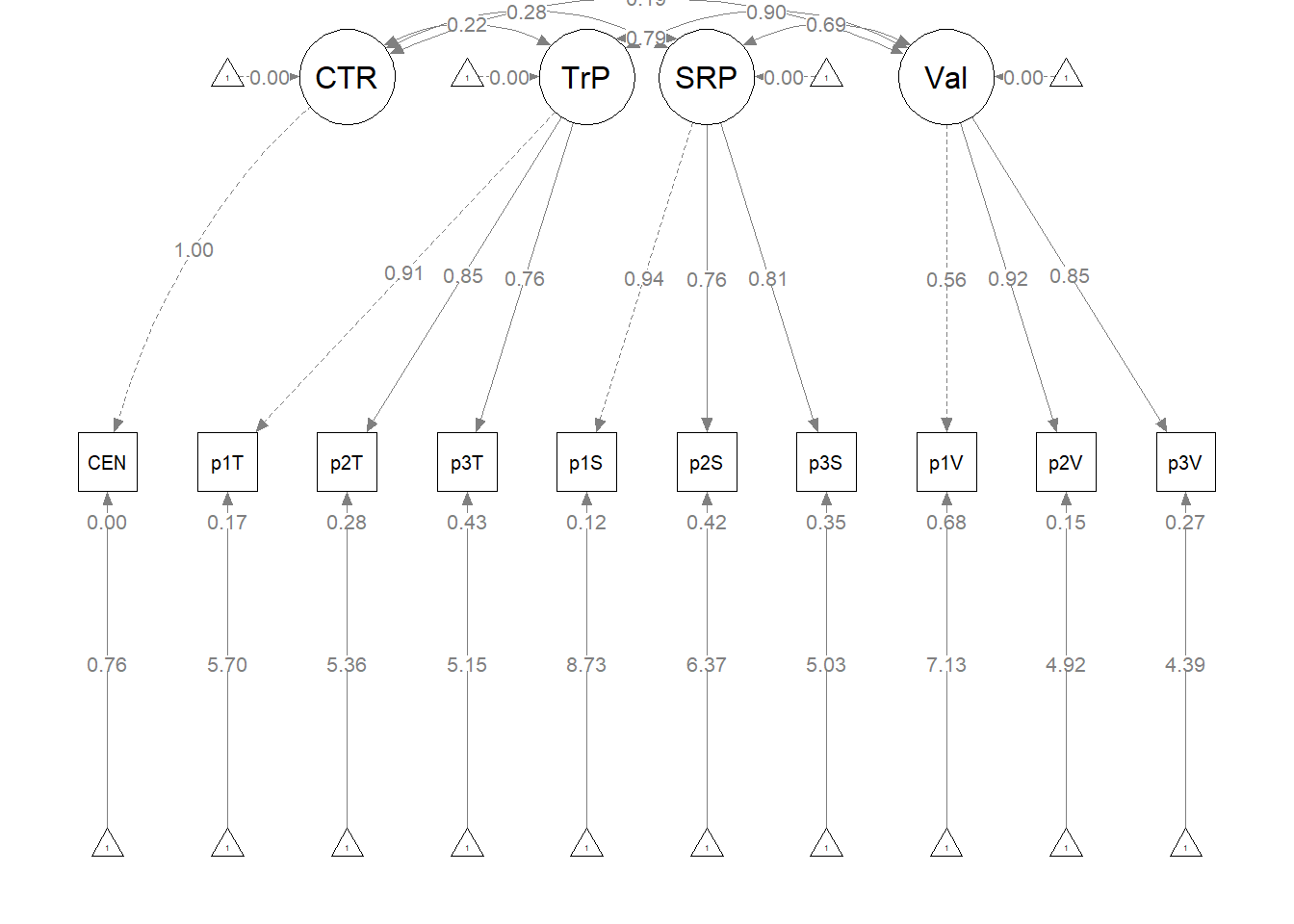

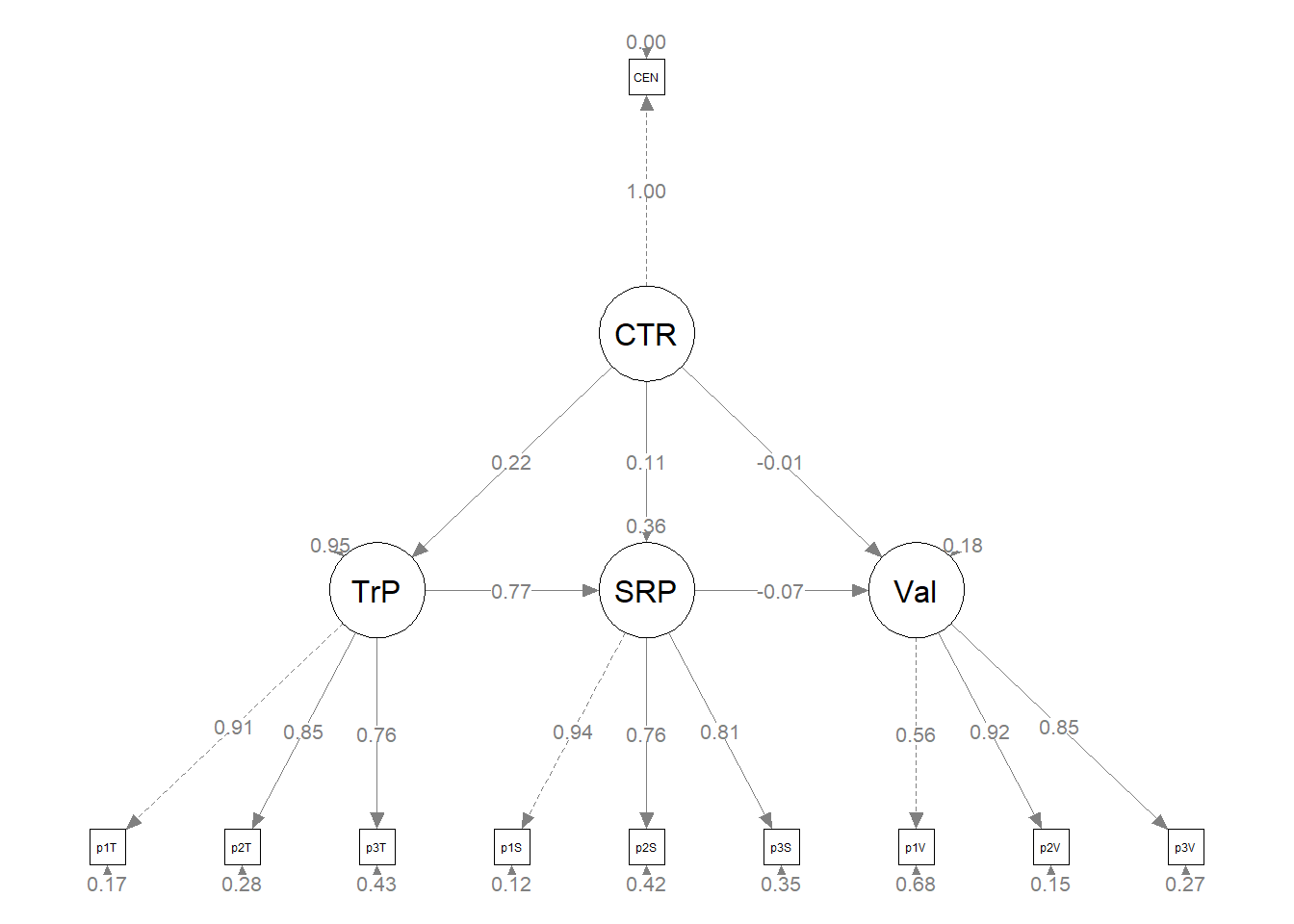

# a .csv I didn't ask it to print into the bookThe factor loadings were all strong, statistically significant, and properly valenced. Further, global fit statistics were within acceptable thresholds (\(\chi^2(102) = 94.122, p = 0.698, CFI = 1.000, RMSEA = 0.000, 90CI[0.000, 0.028], SRMR = 0.034\)).

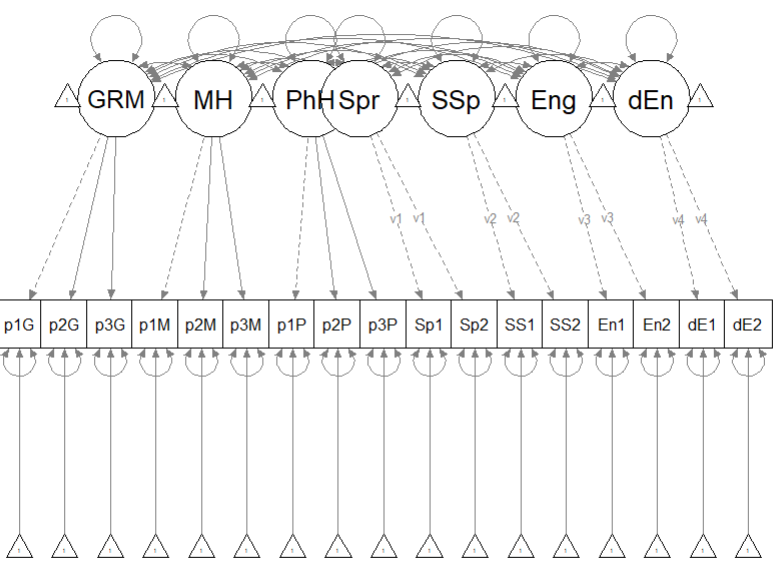

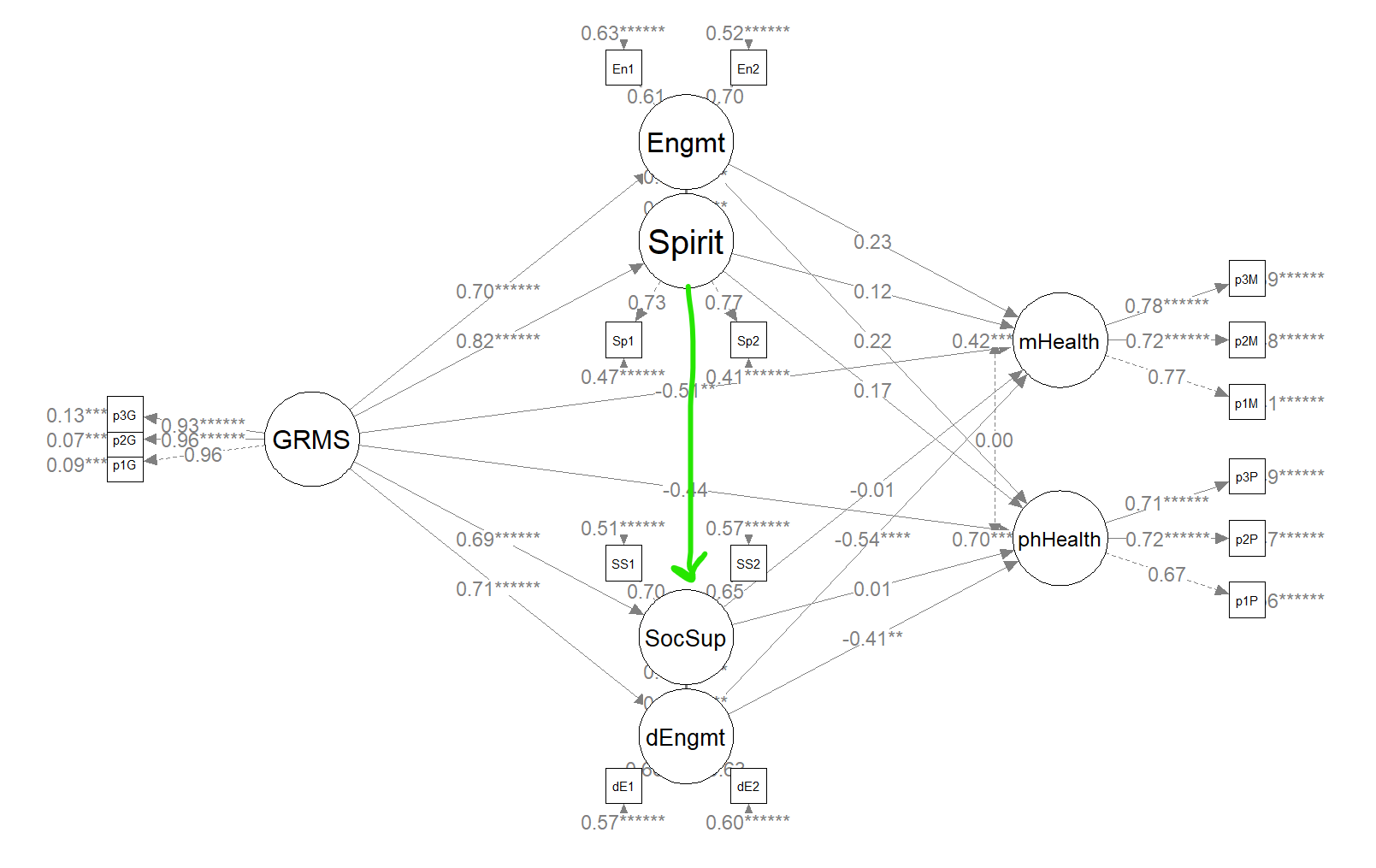

The figure below is an illustration of our measurement model with its results. It also conveys that each latent variable is indicated by three parcels and all of the latent variables are allowed to covary.

semPlot::semPaths(msmt_fit, what = "col", whatLabels = "stand", sizeMan = 5,

node.width = 1, edge.label.cex = 0.75, style = "lisrel", mar = c(5,

5, 5, 5))

# semPlot::semPaths(msmt_fit) #ignore -- used to create a no-results

# figure earlier in the chapterBelow is script that will export the global fit indices (via tidySEM::table_fit) and the parameter estimates (e.g., factor loadings, structural regression weights, and parameters we requested such as the indirect effect) to .csv files that you can manipulate outside of R.

## Registered S3 method overwritten by 'tidySEM':

## method from

## predict.MxModel OpenMxwrite.csv(msmt_fitstats, file = "msmt_fitstats.csv")

# the code below writes the parameter estimates into a .csv file

write.csv(msmt_fit_pEsts, file = "msmt_pEsts.csv")Here’s how I might write an APA style summary of establishing the measurement model.

Analyzing our proposed multiple mediator model followed the two-step procedure of first evaluating a measurement model with acceptable fit to the data and then proceeding to test the structural model. Given that different researchers recommend somewhat differing thresholds to determine the adequacy of fit, We used the following as evidence of good fit: comparative fit indix (CFI) \(\geq 0.95\), root-mean-square error of approximation (RMSEA) \(\leq 0.06\), and the standard root-mean-square residual (SRMR) \(\leq 0.08\). To establish aceptable fit, we used CFI \(\geq 0.90\), RMSEA \(\leq 0.10\), and SRMR \(\leq 0.10\) (Weston & Gore, 2006).

To evaluate the measurement model we followed recommendations by Little et al. (T. D. Little et al., 2002, 2013). Specifically, each latent variable with six or more indicators was represented by three parcels. Parcels were created by randomly assigning scale items to the parcels and then calculating the mean, if at least 65% of the items were non-missing. For the four latent variables with only two indicators each, we constrained the factor loadings to be equal. Factor loadings were all strong, statistically significant, and properly valenced. Global fit statistics were within acceptable thresholds (\(\chi^2(102) = 94.122, p = 0.698, CFI = 1.000, RMSEA = 0.000, 90CI[0.000, 0.028], SRMR = 0.034\)). Thus, we proceeded to testing the structural model.

Table 1

| Factor Loadings for the Measurement Model |

|---|

| Latent variable and indicator | est | SE | p | est_std |

| Gendered Racial Microaggressions | ||||

| Parcel 1 | 1.000 | 0.000 | 0.956 | |

| Parcel 2 | 1.014 | 0.030 | <0.001 | 0.961 |

| Parcel 3 | 1.904 | 0.030 | <0.001 | 0.934 |

| Mental Health | ||||

| Parcel 1 | 1.000 | 0.000 | 0.765 | |

| Parcel 2 | 1.237 | 0.128 | <0.001 | 0.728 |

| Parcel 3 | 1.241 | 0.112 | <0.001 | 0.776 |

| Physical Health | ||||

| Parcel 1 | 1.000 | 0.000 | 0.662 | |

| Parcel 2 | 0.997 | 0.125 | <0.001 | 0.714 |

| Parcel 3 | 1.060 | 0.139 | <0.001 | 0.727 |

| Spiritual Coping | ||||

| Item 1 | 1.000 | 0.000 | 0.730 | |

| Item 2 | 1.000 | 0.000 | 0.762 | |

| Social Support Coping | ||||

| Item 1 | 1.000 | 0.000 | 0.704 | |

| Item 2 | 1.000 | 0.000 | 0.654 | |

| Engagement Coping | ||||

| Item 1 | 1.000 | 0.000 | 0.612 | |

| Item 2 | 1.000 | 0.000 | 0.701 | |

| Disengagement Coping | ||||

| Item 1 | 1.000 | 0.000 | 0.684 | |

| Item 2 | 1.000 | 0.000 | 0.652 |

Having established that our measurement model is adequate, we are ready to replace the covariances between latent variables with the paths (directional) and covariances (bidirectional) we hypothesize. These paths and covariances are soft hypotheses. That is, we are “freeing” them to relate. In SEM, hard hypotheses are where no path/covariance exists and the relationship between these variables is “fixed” to zero. This is directly related to degrees of freedom and the identification status (just-identified, over-identified, underidentified) of the model.

11.8 Specifying and Evaluating the Hypothesized Structural Model

As described more completely in the lesson on specifying and evaluating the structural model, the structural model evaluates the hypothesized relations between the latent variables. The structural model is typically more parsimonious (i.e., not saturated) than the measurement model and is characterized by directional paths (not covariances) between some (not all) of the variables.

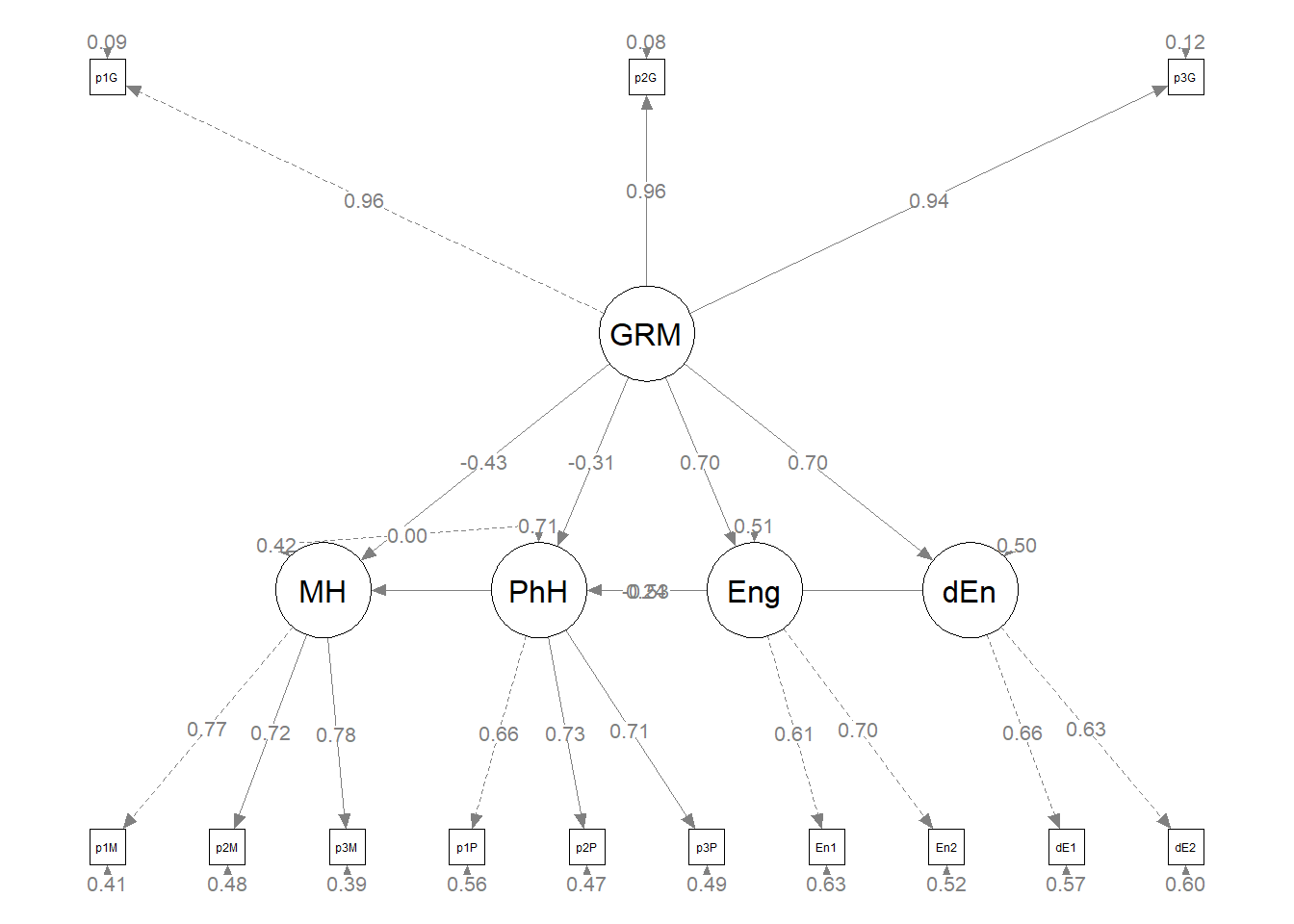

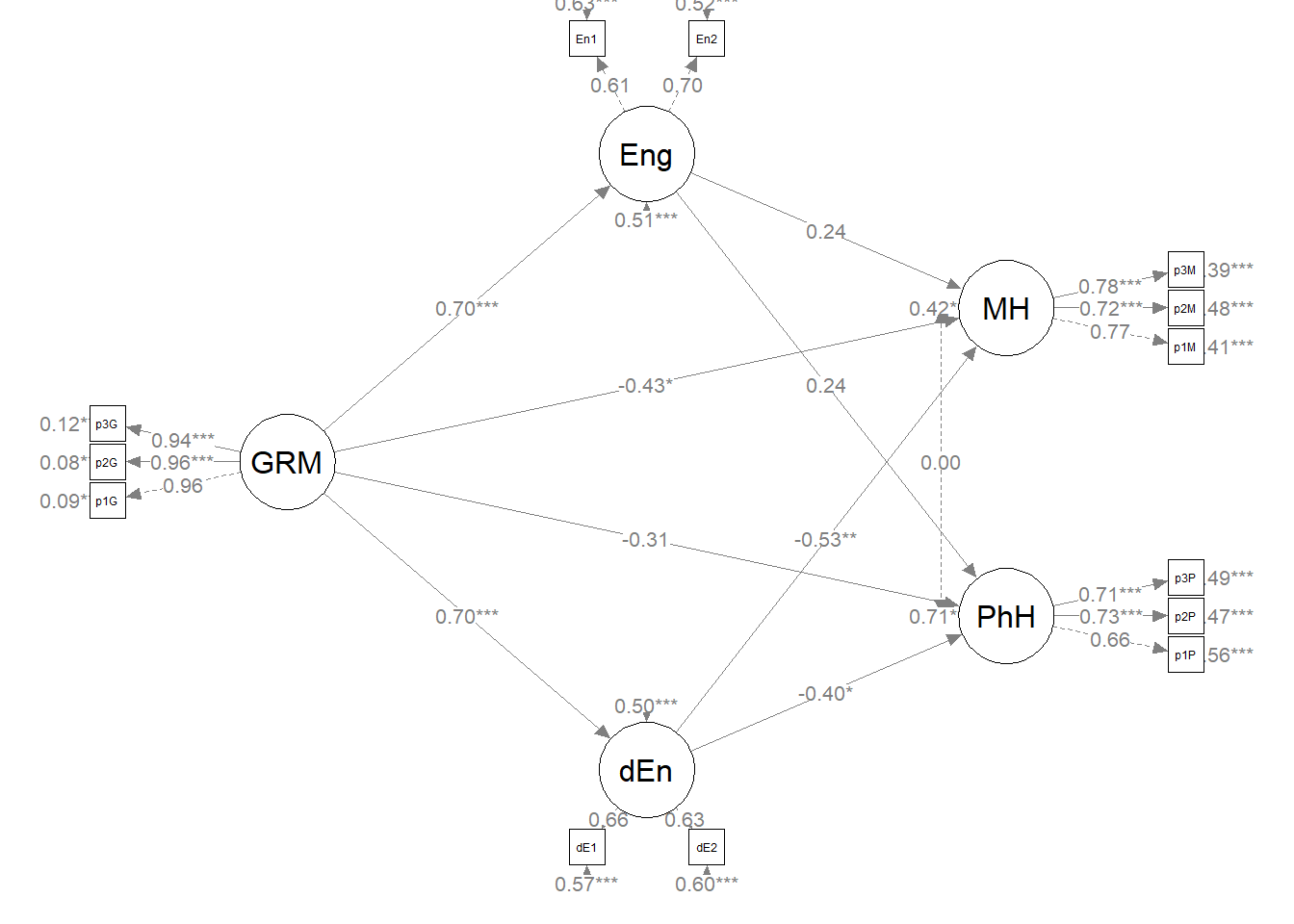

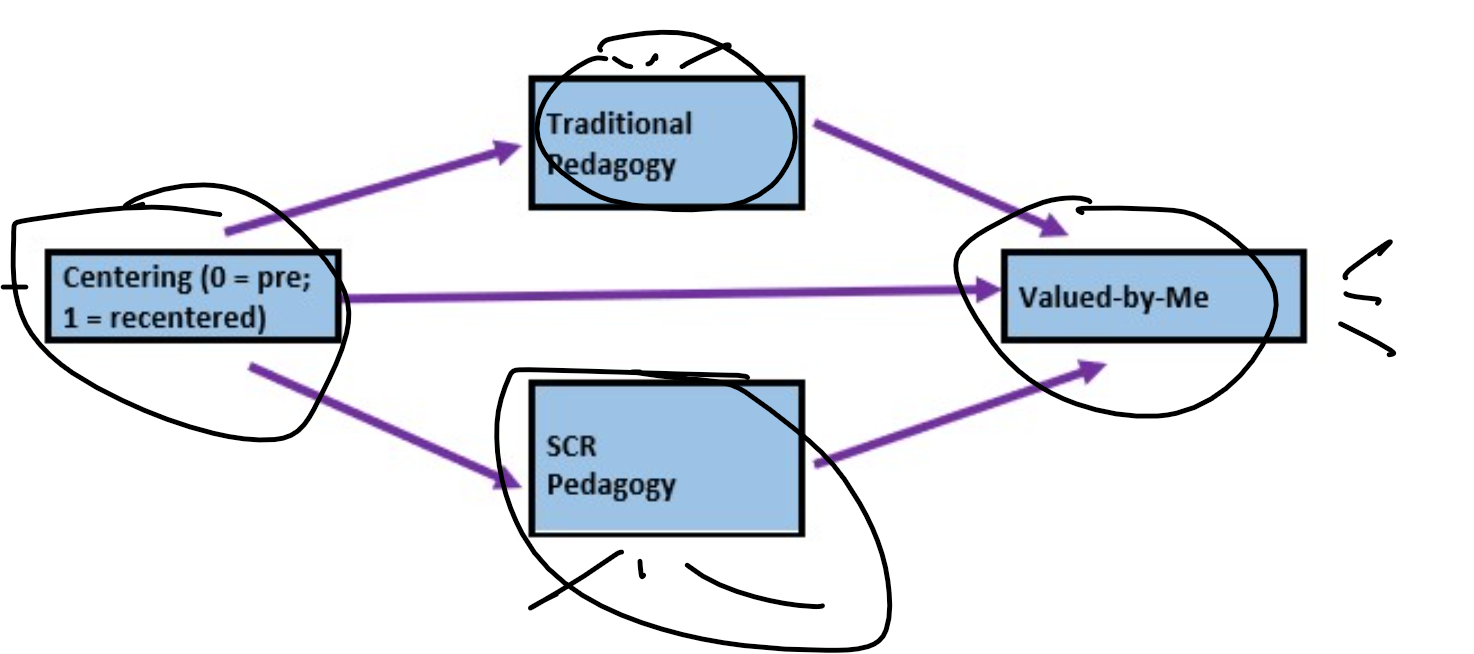

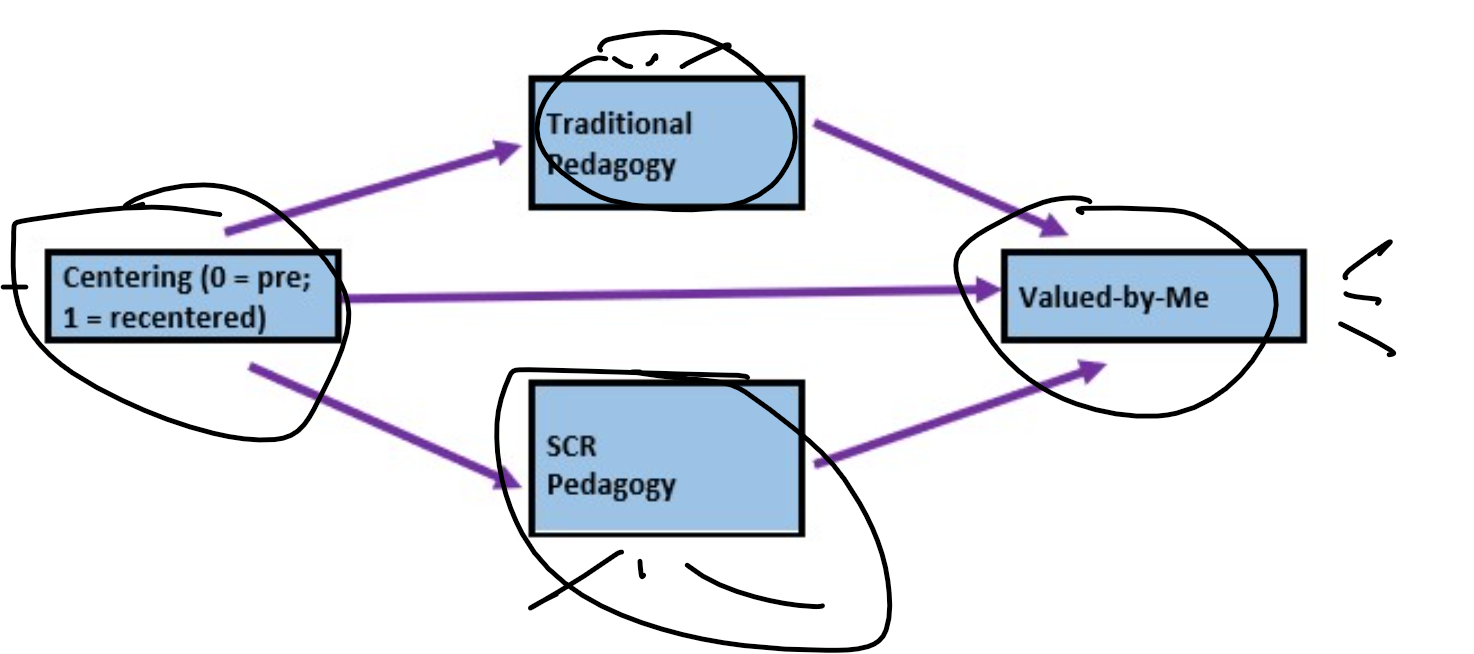

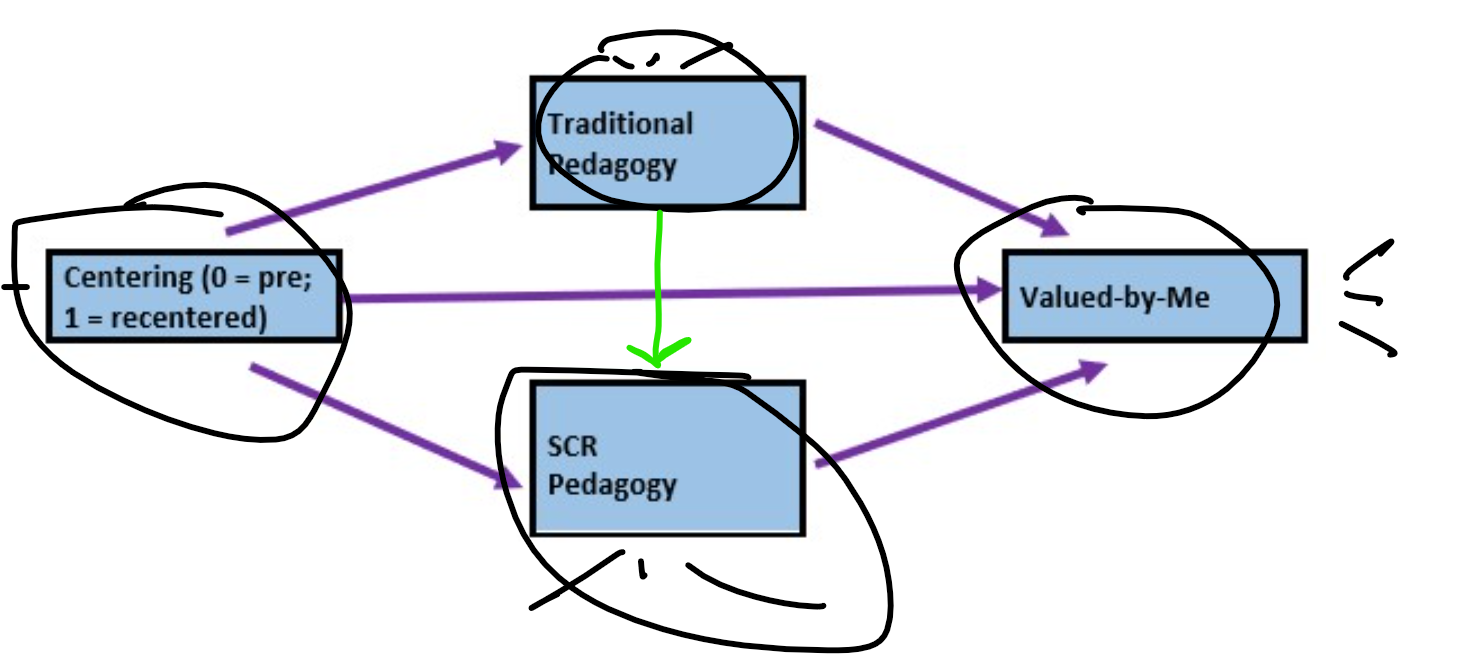

Here’s a quick reminder of the hypothesized model we are testing. Using data simulated from the Lewis et al. (2017)article, we are evaluating a parallel mediation model, predicting mental and physical health directly from gendered racial microaggressions and indirectly through four approaches to coping (i.e., spiritual, social support, engagement, disengagement). This model is hybrid because it include measurement models (i.e., latent variables indicated by their parcels), plus the hypothesized paths.

11.8.1 Model Identification for the Hypothesized (Original) Model

In order to be evaluated, structural models need to be just identifed (\(df_M = 0\)) or overidentified (\(df_M > 0\)). Computer programs are not (yet) good at estimating identification status because it is based on symbolism and not numbers. Therefore, we researchers must do the mental math to ensure that our knowns (measured/observed variables) are equal (just-identified) or greater than (overidentified) our unknowns (parameters that will be estimated). Model identification is described more completely in the lesson on specifying and evaluating the structural model,

Knowns: \(\frac{k(k+1)}{2}\) where k is the number of constructs (humoR: konstructs?)in the model. In our case, we have seven constructs. Deploying the formula below, we learn that we have 21 knowns.

## [1] 28Unknowns: are calculated with the following

- Exogenous (predictor) variables (1 variance estimated for each): we have 1 (GRMS)

- Endogenous (predicted) variables (1 disturbance variance for each): we have 6 (Spr, SSp, Eng, dEng, MH, PhH)

- Correlations between variables (1 covariance for each pairing): we have 0

- Regression paths (arrows linking exogenous variables to endogenous variables): we have 14

With 28 knowns and 20 unknowns, we have 8 degrees of freedom in the structural portion of the model. This is an over-identified model.

11.8.1.1 Specifying and Evaluating the Structural Model

Specifying our structural model in lavaan includes script for the measurement model, the structural model, and any additional model parameters (e.g., indirect and total effects) that we might add. In the script below you will see each of these elements.

- the mediating variables (Spr, SSp, Eng, dEng) are predicted by the independent variable (GRMS),

- the dependent variables (MH, PhH) are predicted by the independent variable (GRMS) and the mediating variables (Spr, SSp, Eng, dEng),

- labels are assigned to represent the \(a\), \(b\), and \(c'\) paths

- calculations that use the labels will estimate the indirect, direct, and total paths

In the model specification below, there are more elements to note. I have chosen to specify the dependent variables with all of the variables that predict them in a single line of code. In the script below I wrote:

It is equally acceptable to list them specify them from fewer predictors at a time. For example, I could have written

Because lavaan has elements of randomness in its algorithms (particularly around its version of bias-corrected, bootstrapped confidence intervals), including a set.seed function will facilitate the reproducibility of results.

If the data contain missing values, the default behavior in lavaan::sem is listwise deletion. If we can presume that the missing mechanism is MCAR or MAR (e.g., there is no systematic missingness), we can specify a full information maximum likelihood (FIML) estimation procedure with the missing = “fiml” argument. Recall that we retained cases if they had 20% or less missing. Using the “fiml” option is part of the AIA approach (Parent, 2013).

In the lavaan::summary function, we will want to retrieve the global fit indices with the fit.measures=TRUE. Because SEM figures are often represented with standardized values, we will want standardized = TRUE. And if we wish to know the proportion of variance predicted in our endogenous variables, we will include rsq = TRUE.

In the lavaan::parameterEstimates we can obtain lavaan’s version of bias-corrected bootstrapped confidence intervals (they aren’t quite the same) by including boot.ci.type = “bca.simple”.

struct_mod1 <- "

##measurement model

GRMS =~ p1GRMS + p2GRMS + p3GRMS

MH =~ p1MH + p2MH + p3MH

PhH =~ p1PhH + p2PhH + p3PhH

Spr =~ v1*Spirit1 + v1*Spirit2

SSp =~ v2*SocS1 + v2*SocS2

Eng =~ v3*Eng1 + v3*Eng2

dEng =~ v4*dEng1 + v4*dEng2

#structural model with labels for calculation of the indirect effect

Eng ~ a1*GRMS

Spr ~ a2*GRMS

SSp ~ a3*GRMS

dEng ~ a4*GRMS

MH ~ c_p1*GRMS + b1*Eng + b2*Spr + b3*SSp + b4*dEng

PhH ~ c_p2*GRMS + b5*Eng + + b6*Spr + b7*SSp + b8*dEng

#cov

MH ~~ 0*PhH #prevents MH and PhD from correlating

#calculations

indirect.EngMH := a1*b1

indirect.SprMH := a2*b2

indirect.SSpMH := a3*b3

indirect.dEngMH := a4*b4

indirect.EngPhH := a1*b5

indirect.SprPhH := a2*b6

indirect.SSpPhH := a3*b7

indirect.dEngPhH := a4*b8

direct.MH := c_p1

direct.PhH := c_p2

total.MH := c_p1 + (a1*b1) + (a2*b2) + (a3*b3) + (a4*b4)

total.PhH := c_p2 + (a1*b5) + (a1*b6) + (a1*b7) + (a1*b8)

"

set.seed(230916) #needed for reproducibility especially when specifying bootstrapped confidence intervals

struct_fit1 <- lavaan::sem(struct_mod1, data = dfLewis, missing = "fiml")

struct_summary1 <- lavaan::summary(struct_fit1, fit.measures = TRUE, standardized = TRUE,

rsq = TRUE)

struct_pEsts1 <- lavaan::parameterEstimates(struct_fit1, boot.ci.type = "bca.simple",

standardized = TRUE)

struct_summary1## lavaan 0.6.17 ended normally after 117 iterations

##

## Estimator ML

## Optimization method NLMINB

## Number of model parameters 61

##

## Number of observations 231

## Number of missing patterns 1

##

## Model Test User Model:

##

## Test statistic 120.324

## Degrees of freedom 109

## P-value (Chi-square) 0.216

##

## Model Test Baseline Model:

##

## Test statistic 2104.157

## Degrees of freedom 136

## P-value 0.000

##

## User Model versus Baseline Model:

##

## Comparative Fit Index (CFI) 0.994

## Tucker-Lewis Index (TLI) 0.993

##

## Robust Comparative Fit Index (CFI) 0.994

## Robust Tucker-Lewis Index (TLI) 0.993

##

## Loglikelihood and Information Criteria:

##

## Loglikelihood user model (H0) -3389.848

## Loglikelihood unrestricted model (H1) -3329.686

##

## Akaike (AIC) 6901.696

## Bayesian (BIC) 7111.683

## Sample-size adjusted Bayesian (SABIC) 6918.348

##

## Root Mean Square Error of Approximation:

##

## RMSEA 0.021

## 90 Percent confidence interval - lower 0.000

## 90 Percent confidence interval - upper 0.041

## P-value H_0: RMSEA <= 0.050 0.995

## P-value H_0: RMSEA >= 0.080 0.000

##

## Robust RMSEA 0.021

## 90 Percent confidence interval - lower 0.000

## 90 Percent confidence interval - upper 0.041

## P-value H_0: Robust RMSEA <= 0.050 0.995

## P-value H_0: Robust RMSEA >= 0.080 0.000

##

## Standardized Root Mean Square Residual:

##

## SRMR 0.044

##

## Parameter Estimates:

##

## Standard errors Standard

## Information Observed

## Observed information based on Hessian

##

## Latent Variables:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## GRMS =~

## p1GRMS 1.000 0.725 0.955

## p2GRMS 1.015 0.030 34.097 0.000 0.736 0.962

## p3GRMS 0.903 0.030 29.818 0.000 0.655 0.932

## MH =~

## p1MH 1.000 0.628 0.769

## p2MH 1.218 0.126 9.663 0.000 0.765 0.721

## p3MH 1.246 0.113 11.045 0.000 0.783 0.783

## PhH =~

## p1PhH 1.000 0.604 0.666

## p2PhH 1.007 0.128 7.878 0.000 0.608 0.725

## p3PhH 1.036 0.135 7.664 0.000 0.625 0.715

## Spr =~

## Spirit1 (v1) 1.000 0.488 0.726

## Spirit2 (v1) 1.000 0.488 0.766

## SSp =~

## SocS1 (v2) 1.000 0.425 0.703

## SocS2 (v2) 1.000 0.425 0.655

## Eng =~

## Eng1 (v3) 1.000 0.406 0.607

## Eng2 (v3) 1.000 0.406 0.696

## dEng =~

## dEng1 (v4) 1.000 0.396 0.658

## dEng2 (v4) 1.000 0.396 0.633

##

## Regressions:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## Eng ~

## GRMS (a1) 0.392 0.041 9.506 0.000 0.701 0.701

## Spr ~

## GRMS (a2) 0.554 0.040 13.869 0.000 0.824 0.824

## SSp ~

## GRMS (a3) 0.406 0.042 9.684 0.000 0.694 0.694

## dEng ~

## GRMS (a4) 0.386 0.041 9.400 0.000 0.707 0.707

## MH ~

## GRMS (c_p1) -0.445 0.205 -2.178 0.029 -0.514 -0.514

## Eng (b1) 0.355 0.223 1.594 0.111 0.229 0.229

## Spr (b2) 0.155 0.219 0.705 0.481 0.120 0.120

## SSp (b3) -0.012 0.187 -0.062 0.950 -0.008 -0.008

## dEng (b4) -0.850 0.303 -2.801 0.005 -0.536 -0.536

## PhH ~

## GRMS (c_p2) -0.369 0.224 -1.650 0.099 -0.444 -0.444

## Eng (b5) 0.330 0.250 1.322 0.186 0.222 0.222

## Spr (b6) 0.215 0.254 0.845 0.398 0.174 0.174

## SSp (b7) 0.016 0.215 0.076 0.939 0.012 0.012

## dEng (b8) -0.624 0.295 -2.115 0.034 -0.409 -0.409

##

## Covariances:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## .MH ~~

## .PhH 0.000 0.000 0.000

##

## Intercepts:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## .p1GRMS 2.592 0.050 51.897 0.000 2.592 3.415

## .p2GRMS 2.545 0.050 50.567 0.000 2.545 3.327

## .p3GRMS 2.579 0.046 55.829 0.000 2.579 3.673

## .p1MH 3.582 0.054 66.615 0.000 3.582 4.383

## .p2MH 2.866 0.070 41.024 0.000 2.866 2.699

## .p3MH 3.035 0.066 46.142 0.000 3.035 3.036

## .p1PhH 3.128 0.060 52.388 0.000 3.128 3.447

## .p2PhH 2.652 0.055 48.061 0.000 2.652 3.162

## .p3PhH 3.067 0.058 53.263 0.000 3.067 3.504

## .Spirit1 2.511 0.044 56.810 0.000 2.511 3.738

## .Spirit2 2.437 0.042 58.200 0.000 2.437 3.829

## .SocS1 2.550 0.040 64.175 0.000 2.550 4.222

## .SocS2 2.758 0.043 64.662 0.000 2.758 4.254

## .Eng1 2.437 0.044 55.380 0.000 2.437 3.644

## .Eng2 2.515 0.038 65.524 0.000 2.515 4.311

## .dEng1 2.502 0.040 63.179 0.000 2.502 4.157

## .dEng2 2.455 0.041 59.610 0.000 2.455 3.922

##

## Variances:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## .p1GRMS 0.050 0.007 6.754 0.000 0.050 0.087

## .p2GRMS 0.044 0.007 6.098 0.000 0.044 0.075

## .p3GRMS 0.064 0.008 8.400 0.000 0.064 0.131

## .p1MH 0.273 0.037 7.381 0.000 0.273 0.409

## .p2MH 0.542 0.067 8.057 0.000 0.542 0.480

## .p3MH 0.387 0.055 7.082 0.000 0.387 0.387

## .p1PhH 0.459 0.058 7.914 0.000 0.459 0.557

## .p2PhH 0.334 0.049 6.817 0.000 0.334 0.474

## .p3PhH 0.375 0.054 6.994 0.000 0.375 0.489

## .Spirit1 0.214 0.026 8.135 0.000 0.214 0.473

## .Spirit2 0.167 0.023 7.223 0.000 0.167 0.413

## .SocS1 0.184 0.026 7.155 0.000 0.184 0.506

## .SocS2 0.240 0.029 8.140 0.000 0.240 0.571

## .Eng1 0.282 0.033 8.558 0.000 0.282 0.631

## .Eng2 0.175 0.026 6.694 0.000 0.175 0.515

## .dEng1 0.205 0.029 7.114 0.000 0.205 0.567

## .dEng2 0.235 0.030 7.701 0.000 0.235 0.599

## GRMS 0.526 0.054 9.787 0.000 1.000 1.000

## .MH 0.164 0.042 3.930 0.000 0.416 0.416

## .PhH 0.255 0.058 4.403 0.000 0.700 0.700

## .Spr 0.076 0.019 4.051 0.000 0.321 0.321

## .SSp 0.094 0.021 4.413 0.000 0.519 0.519

## .Eng 0.084 0.022 3.808 0.000 0.509 0.509

## .dEng 0.078 0.024 3.330 0.001 0.500 0.500

##

## R-Square:

## Estimate

## p1GRMS 0.913

## p2GRMS 0.925

## p3GRMS 0.869

## p1MH 0.591

## p2MH 0.520

## p3MH 0.613

## p1PhH 0.443

## p2PhH 0.526

## p3PhH 0.511

## Spirit1 0.527

## Spirit2 0.587

## SocS1 0.494

## SocS2 0.429

## Eng1 0.369

## Eng2 0.485

## dEng1 0.433

## dEng2 0.401

## MH 0.584

## PhH 0.300

## Spr 0.679

## SSp 0.481

## Eng 0.491

## dEng 0.500

##

## Defined Parameters:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## indirect.EngMH 0.139 0.089 1.564 0.118 0.161 0.161

## indirect.SprMH 0.086 0.122 0.703 0.482 0.099 0.099

## indirect.SSpMH -0.005 0.076 -0.062 0.950 -0.005 -0.005

## indirct.dEngMH -0.328 0.123 -2.680 0.007 -0.379 -0.379

## indirct.EngPhH 0.130 0.099 1.305 0.192 0.156 0.156

## indirct.SprPhH 0.119 0.141 0.842 0.400 0.143 0.143

## indirct.SSpPhH 0.007 0.087 0.076 0.939 0.008 0.008

## indrct.dEngPhH -0.241 0.117 -2.059 0.039 -0.289 -0.289

## direct.MH -0.445 0.205 -2.178 0.029 -0.514 -0.514

## direct.PhH -0.369 0.224 -1.650 0.099 -0.444 -0.444

## total.MH -0.554 0.062 -8.932 0.000 -0.639 -0.639

## total.PhH -0.394 0.083 -4.768 0.000 -0.445 -0.445# struct_pEsts #although creating the object is useful to export as a

# .csv I didn't ask it to print into the bookBelow is script that will export the global fit indices (via tidySEM::table_fit) and the parameter estimates (e.g., factor loadings, structural regression weights, and parameters we requested such as the indirect effect) to .csv files that you can manipulate outside of R.

11.8.1.2 Interpreting the Output

Plotting the results can be useful in checking our work and, if correct, understanding the relations between the variables. The semPlot::semPaths function will produce an initial guess at what we might like that can be further tweaked.

plot_struct1 <- semPlot::semPaths(struct_fit1, what = "col", whatLabels = "stand",

sizeMan = 3, node.width = 1, edge.label.cex = 0.75, style = "lisrel",

mar = c(2, 2, 2, 2), structural = FALSE, curve = FALSE, intercepts = FALSE) Although the code below may look daunting, I find it to be a fairly straightforward way to obtain figures that convey the model we are testing. We first start by identifying the desired location of our latent variables, using numbers to represent their position by “(column, row)”. In the table below, I have mapped my variables.

Although the code below may look daunting, I find it to be a fairly straightforward way to obtain figures that convey the model we are testing. We first start by identifying the desired location of our latent variables, using numbers to represent their position by “(column, row)”. In the table below, I have mapped my variables.

| Grid for Plotting semplot::sempath | ||

|---|---|---|

| (1,1) | (1,2) Eng | (1,3) |

| (2,1) | (2,2) Spr | (2,3) |

| (3,1) | (3,2) | (3,3) MH |

| (4,1) GRM | (4,2) | (4,3) |

| (5,1) | (5,2) | (5,3) PhH |

| (6,1) | (6,2) Ssp | (6,3) |

| (7,1) | (7,2) dEng | (7,3) |

We place these values along with the names of our latent variables in to the semptools::layout_matrix function.

# IMPORTANT: Must use the node names (take directly from the SemPlot)

# assigned by SemPlot You can change them as the last thing

m1_msmt <- semptools::layout_matrix(GRM = c(4, 1), Eng = c(1, 2), Spr = c(2,

2), SSp = c(6, 2), dEn = c(7, 2), MH = c(3, 3), PhH = c(5, 3))Next we provide instruction on the direction (up, down, left, right) we want the indicator/observed variables to face. We identify the direction by the location of each of our latent variables. For example, in the code below we want the indicators for the REM variable (2,1) to face left.

# tell where you want the indicators to face

m1_point_to <- semptools::layout_matrix(left = c(4, 1), up = c(1, 2), down = c(2,

2), up = c(6, 2), down = c(7, 2), right = c(3, 3), right = c(5, 3))The next two sets of code work together to specify the order of the observed variables for each factor. in the top set of code the variable names indicate the order in which they will appear (i.e., p1R, p2R, p3R). In the second set of code, the listing the variable name three times (i.e., REM, REM, REM) serves as a placeholder for each of the indicators.

It is critical to note that we need to use the abbreviated variable names assigned by semTools::semPaths and not necessarily the names that are in the dataframe.

# the next two codes -- indicator_order and indicator_factor are

# paired together, they specify the order of observed variables for

# each factor

m1_indicator_order <- c("p1G", "p2G", "p3G", "p1M", "p2M", "p3M", "p1P",

"p2P", "p3P", "Sp1", "Sp2", "SS1", "SS2", "En1", "En2", "dE1", "dE2")

m1_indicator_factor <- c("GRM", "GRM", "GRM", "MH", "MH", "MH", "PhH",

"PhH", "PhH", "Spr", "Spr", "SSp", "SSp", "Eng", "Eng", "dEn", "dEn")The next two sets of codes provide some guidance about how far away the indicator (square/rectangular) variables should be away from the latent (oval/circular) variables. Subsequently, the next set of values indicate how far away each of the indicator (square/rectangular) variables should be spread apart.

# next set of code pushes the indicator variables away from the

# factor

m1_indicator_push <- c(GRM = 0.5, MH = 1, PhH = 1, Spr = 2, SSp = 1.5,

Eng = 1.5, dEn = 2)

# spreading the boxes away from each other

m1_indicator_spread <- c(GRM = 0.5, MH = 2.5, PhH = 2.5, Spr = 1, SSp = 1,

Eng = 1, dEn = 1)Finally, we can feed all of the objects that whole these instructions into the semptools::sem_set_layout function. If desired, we can use the semptools::change_node_label function to rename the latent variables. Again, make sure to use the variable names that semPlot::semPaths has assigned.

plot1 <- semptools::set_sem_layout(plot_struct1, indicator_order = m1_indicator_order,

indicator_factor = m1_indicator_factor, factor_layout = m1_msmt, factor_point_to = m1_point_to,

indicator_push = m1_indicator_push, indicator_spread = m1_indicator_spread)

# changing node labels

plot1 <- semptools::change_node_label(plot1, c(GRM = "GRMS", MH = "mHealth",

PhH = "phHealth", Eng = "Engmt", dEn = "dEngmt", Spr = "Spirit", SSp = "SocSup"),

label.cex = 1.1)

# adding stars to indicate significant paths

plot1 <- semptools::mark_sig(plot1, struct_fit1)

plot(plot1)

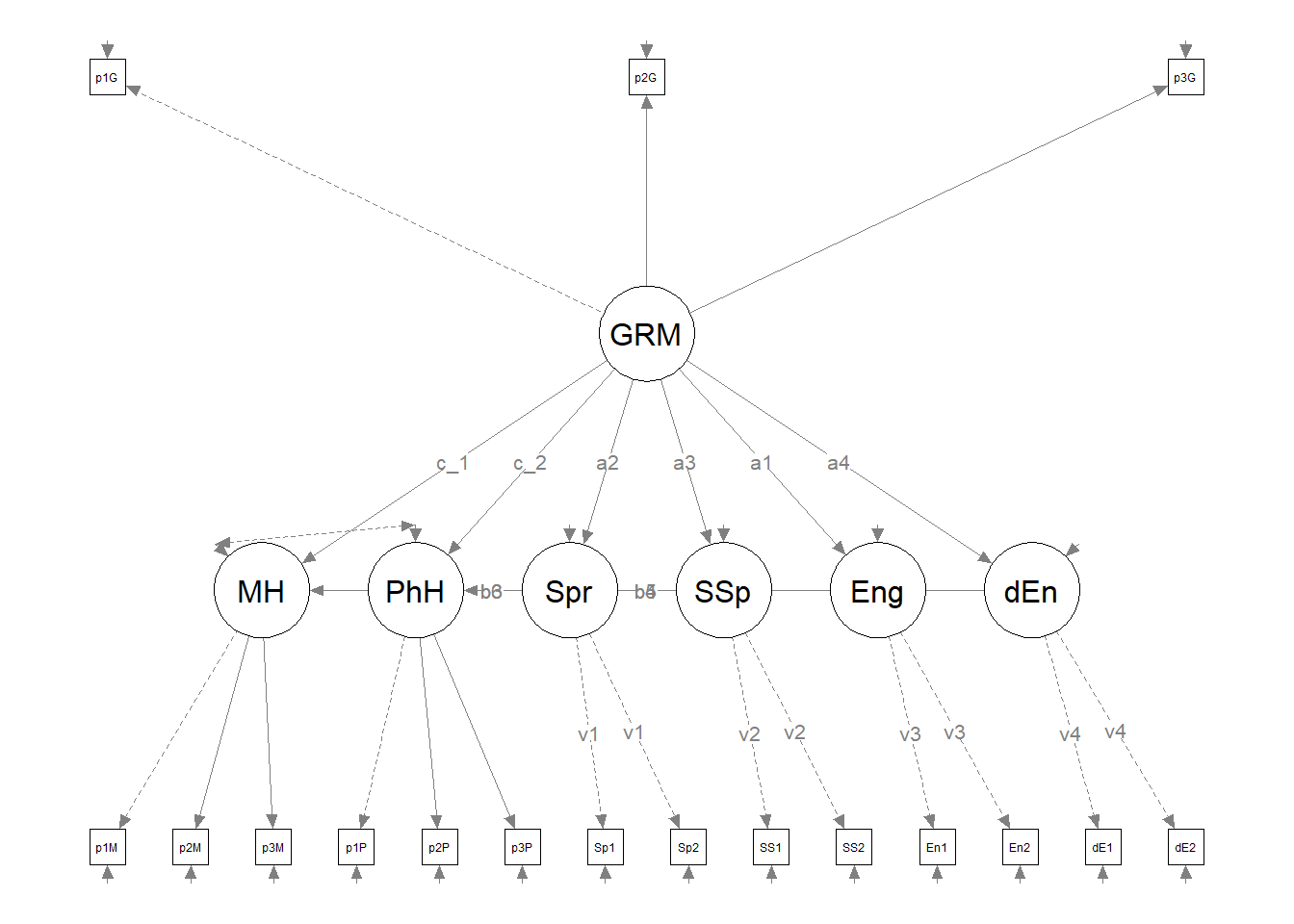

It can be useful to have a representation of the model without the results. This set of code produces those results. It does so by including only the name of the fitted object into the semPlot::semPaths function. Then it uses all the objects we just created as instructions for the figure’s appearance.

# Code to plot the theoretical model (in case you don't want to print

# the results on the graph):

plot1_theoretical <- semPlot::semPaths(struct_fit1, sizeMan = 3, node.width = 1,

edge.label.cex = 0.75, style = "lisrel", mar = c(2, 2, 2, 2), structural = FALSE,

curve = FALSE, intercepts = FALSE)

plot1_theoretical <- semptools::set_sem_layout(plot1_theoretical, indicator_order = m1_indicator_order,

indicator_factor = m1_indicator_factor, factor_layout = m1_msmt, factor_point_to = m1_point_to,

indicator_push = m1_indicator_push, indicator_spread = m1_indicator_spread)

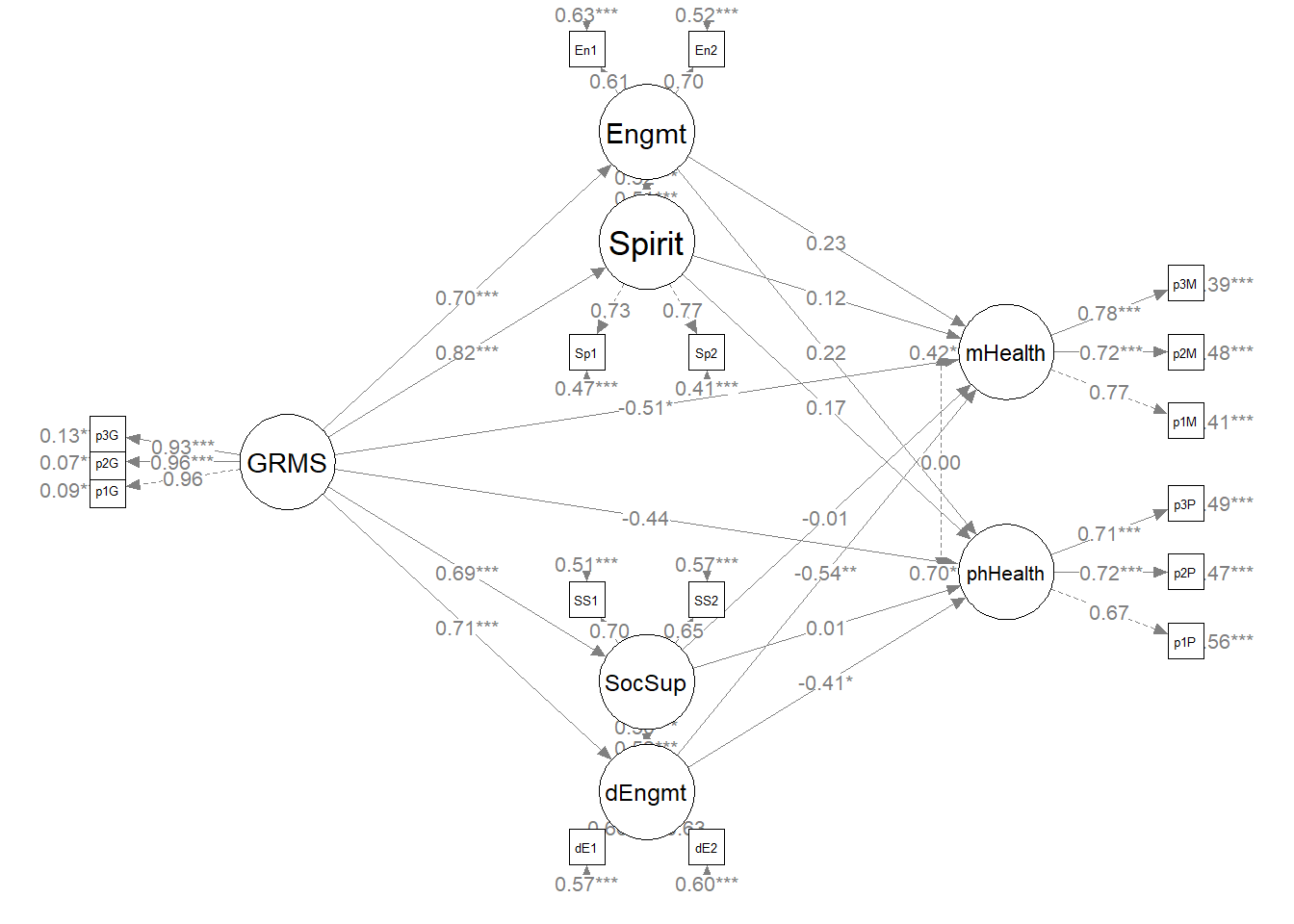

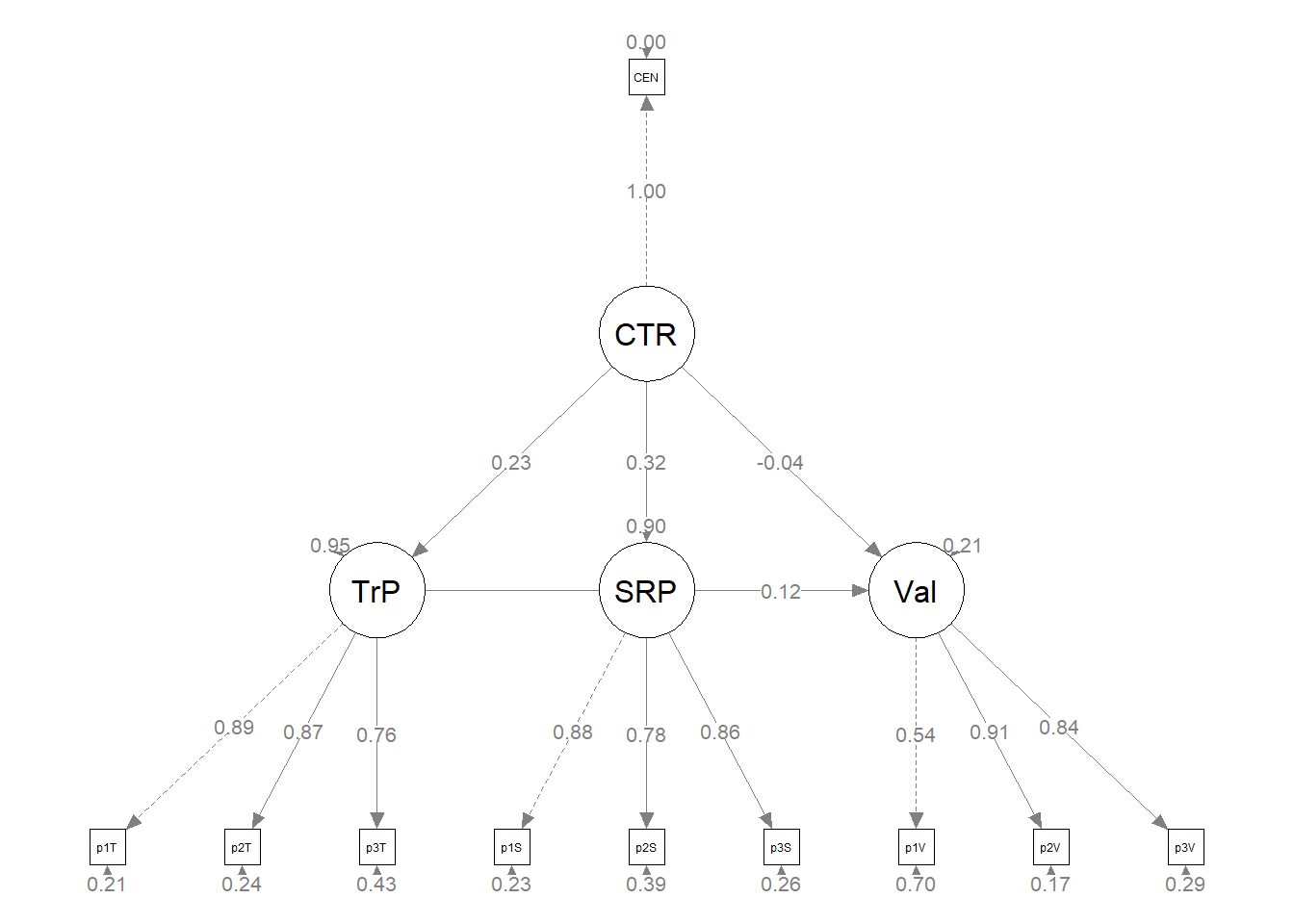

plot(plot1_theoretical) The statistical string for the global fit indices can be represented this way: \(\chi^2(109) = 120.324, p = 0.216, CFI = 0.994, RMSEA = 0.021, 90CI[0.000, 0.041], SRMR = 0.044\).

The statistical string for the global fit indices can be represented this way: \(\chi^2(109) = 120.324, p = 0.216, CFI = 0.994, RMSEA = 0.021, 90CI[0.000, 0.041], SRMR = 0.044\).

Tabling the regression weights will assist us in understanding the relations between the variables. To be consistent with my figure, in this table I have included the standardized results (i.e., \(\beta\)).

Table 2

| Model Coefficients Assessing the Effect of Gendered Racial Microaggressions on Mental and Physical Health through Coping Strategies |

|---|

| Predictor | \(B\) | \(SE_{B}\) | \(p\) | \(\beta\) | \(R^2\) |

|---|---|---|---|---|---|

| Engagement (M1) | .49 | ||||

| Gendered Racial Microaggressions (\(a_1\)) | 0.392 | 0.041 | < 0.001 | 0.701 | |

| Spirituality (M2) | .68 | ||||

| Gendered Racial Microaggressions (\(a_2\)) | 0.554 | 0.040 | < 0.001 | 0.824 | |

| Social Support (M3) | .48 | ||||

| Gendered Racial Microaggressions (\(a_3\)) | 0.406 | 0.042 | < 0.001 | 0.694 | |

| Disengagement (M4) | .50 | ||||

| Gendered Racial Microaggressions (\(a_4\)) | 0.386 | 0.041 | < 0.001 | 0.707 | |

| Mental Health (DV1) | .58 | ||||

| Engagement (\(b_1\)) | 0.355 | 0.223 | 0.111 | 0.229 | |

| Spirituality (\(b_1\)) | 0.155 | 0.219 | 0.481 | 0.120 | |

| Social Support (\(b_3\)) | -0.012 | 0.187 | 0.950 | -0.008 | |

| Disengagement (\(b_4\)) | -0.850 | 0.303 | 0.005 | -0.536 | |

| Gendered Racial Microaggressions (\(c'_1\)) | -0.445 | 0.205 | 0.029 | -0.514 | |

| Physical Health (DV2) | .30 | ||||

| Engagement (\(b_5\)) | 0.330 | 0.250 | 0.186 | 0.222 | |

| Spirituality (\(b_6\)) | 0.215 | 0.254 | 0.398 | 0.174 | |

| Social Support (\(b_7\)) | 0.016 | 0.215 | 0.939 | 0.012 | |

| Disengagement (\(b_8\)) | -0.624 | 0.295 | 0.034 | -0.409 | |

| Gendered Racial Microaggressions (\(c'_2\)) | -0.369 | 0.224 | 0.099 | -0.444 |

| Effects | \(B\) | \(SE_{B}\) | \(p\) | \(\beta\) | 95% CI |

|---|---|---|---|---|---|

| Indirect 3(\(a_1*b_1\)) | 0.139 | 0.089 | 0.118 | 0.161 | -0.035, 0.314 |

| Indirect 1(\(a_2*b_2\)) | 0.086 | 0.122 | 0.482 | 0.099 | -0.153, 0.325 |

| Indirect 2(\(a_3*b_3\)) | -0.005 | 0.076 | 0.950 | -0.005 | -0.154, 0.144 |

| Indirect 4(\(a_4*b_4\)) | -0.328 | 0.123 | 0.007 | -0.379 | -0.569, -0.088 |

| Direct 1 (\(c'_1\)) | -0.445 | 0.205 | 0.029 | -0.514 | -0.846, -0.045 |

| Total 1 (\(c_1\)) | -0.554 | 0.062 | < 0.001 | -0.639 | -0.675, -0.432 |

| Indirect 7(\(a_1*b_5\)) | 0.130 | 0.099 | 0.192 | 0.156 | -0.065, 0.324 |

| Indirect 5(\(a_2*b_6\)) | 0.119 | 0.141 | 0.400 | 0.143 | -0.158, 0.396 |

| Indirect 6(\(a_3*b_7\)) | 0.007 | 0.087 | 0.939 | 0.008 | -0.165, 0.178 |

| Indirect 8(\(a_4*b_8\)) | -0.241 | 0.117 | 0.039 | -0.289 | -0.470, -0.012 |

| Direct 2 (\(c'_2\)) | -0.369 | 0.224 | 0.099 | -0.444 | -0.808, 0.069 |

| Total 2 (\(c_2\)) | -0.394 | 0.083 | 0.000 | -0.445 | -0.556, -0.232 |

| Note. The significance of the indirect effects was calculated with bootstrapped, bias-corrected, confidence intervals (.95). |

As we can see, our model accounts for 48-68% of the variance in the mediators and 58% and 30% of the variance in the mental and physical health variables, respectively. Only one indirect effect, gendered racial microagressions to mental health, via disengagement coping. Inspection of the specific paths may provide some insight into this. Specifically, all of the a paths are statistically significant (i.e., gendered racial microaggressions predicting each of the four coping approaches) but only two of the b paths (i.e., disengagement coping to mental and physical health, respectively).

Here’s how I might write up this section of the analysis:

Our structural model was a parallel mediation, predicting mental and physical health directly from gendered racial microaggressions and indirectly through four coping strategies (i.e., spirituality, social support, engagement, disengagement). Results of the global fit indices suggested adequate fit \(\chi^2(109) = 120.324, p = 0.216, CFI = 0.994, RMSEA = 0.021, 90CI[0.000, 0.041], SRMR = 0.044\). As shown in Table 2 only two of the eight indirect effects was statistically significant. For both, disengagement coping mediated the effect of gendered racial microaggressions on mental health \((B = -0.328, p = 0.007)\) and physical health \((B = -0.241, p = 0.039)\). The model accunted for 48-68% of the variance in the mediators and 58% and 30% of the variance in mental and physical health, respectively.

With such strong overall fit, if I were the researcher, I would be inclined to stop here. Given that this is a lecture on model building and trimming, let’s proceed to see if respecification is indicated.

11.9 Model Building

Recall that in model building we start with a more parsimonious model and consider “adding paths.” This requires that our original model be overidentified with positive degrees of freedom. Earlier we determined that we had 8 degrees of freedom.

Modification indices are a tool that can help us determine where diretional paths or bidirectional covariances might improve model fit. A modification index is produced for each element of the model that is constrained to be zero (i.e., does not have a path or covariance). These modification indices are presented in the metric of the chi-square test and will tell us “by how much the chi-square value will decrease” if the two elements are freed to relate (i.e., by a path or covariance). In a one-degree chi-square test, statistically significant change occurs when the modification index is greater than 3.841. Thus, when researchers use the lavaan::modindices() function, they typically set a minimum value of 4.0 (rounding up from 3.841).

When inspecting the results, we will look for the parameters with the highest values (that are clearly above 4.0) that would be theoretically defensible. Be aware that this procedure may “suggest” many nonsensible relations.

## lhs op rhs mi epc sepc.lv sepc.all sepc.nox

## 125 PhH =~ p2MH 12.407 0.432 0.261 0.246 0.246

## 264 p2MH ~~ dEng2 8.859 -0.087 -0.087 -0.243 -0.243

## 362 SSp ~ Spr 8.339 0.468 0.537 0.537 0.537

## 345 Spr ~~ SSp 8.339 0.036 0.422 0.422 0.422

## 357 Spr ~ SSp 8.339 0.381 0.332 0.332 0.332

## 159 SSp =~ Spirit1 8.328 0.304 0.129 0.192 0.192

## 184 dEng =~ p2MH 7.683 -0.944 -0.374 -0.352 -0.352

## 303 Spirit1 ~~ Spirit2 7.539 2.239 2.239 11.841 11.841

## 325 Eng1 ~~ Eng2 7.538 0.635 0.635 2.853 2.853

## 372 PhH ~ MH 7.538 0.453 0.471 0.471 0.471

## 330 dEng1 ~~ dEng2 7.538 0.140 0.140 0.639 0.639

## 32 MH ~~ PhH 7.538 0.074 0.363 0.363 0.363

## 371 MH ~ PhH 7.538 0.292 0.280 0.280 0.280

## 133 PhH =~ dEng1 7.349 0.210 0.127 0.210 0.210

## 196 p1GRMS ~~ p3GRMS 7.107 0.022 0.022 0.384 0.384

## 245 p1MH ~~ Spirit1 6.205 -0.052 -0.052 -0.215 -0.215

## 119 MH =~ dEng1 6.170 0.186 0.117 0.194 0.194

## 241 p1MH ~~ p3MH 5.439 0.110 0.110 0.337 0.337

## 137 Spr =~ p3GRMS 5.237 -0.238 -0.116 -0.165 -0.165

## 185 dEng =~ p3MH 5.180 0.758 0.300 0.301 0.301

## 232 p3GRMS ~~ Spirit1 5.110 -0.022 -0.022 -0.188 -0.188

## 221 p2GRMS ~~ SocS2 5.098 0.022 0.022 0.213 0.213

## 351 Eng ~ Spr 4.830 0.351 0.421 0.421 0.421

## 346 Spr ~~ Eng 4.830 0.027 0.334 0.334 0.334

## 356 Spr ~ Eng 4.830 0.318 0.265 0.265 0.265

## 304 Spirit1 ~~ SocS1 4.473 0.036 0.036 0.183 0.183

## 16 dEng =~ dEng1 4.197 -0.290 -0.115 -0.191 -0.191

## 17 dEng =~ dEng2 4.197 0.290 0.115 0.183 0.183Because we used the command, “sort=TRUE” the modification indices (in the “mi” column) are presented from highest to lowest. Having familiarity with lavaan syntax can be useful in deciphering what is being suggested.

The first modification index (12.40) relates to the factor loadings in the measurement model. We know this because of the “=~” operator. It suggests that we free p2MH (the second parcel for mental health) to PhH (the physical health latent variable). I would consider this “nonsensible.” While this might improve model fit, this suggestion relates to our measurement model – and our measurement model had terrific fit with no need for further improvement.

The second modification index (8.859) is accompanied by the “~~” operator. This means it is suggesting that we free the second parcel for mental health to covary (correlate) with item 2 for disengagement coping. This is not a sensible suggestion. In fact the only sensible suggested covariation is to free the dependent variables (PhH and MH) to covary (correlate).

The single tilda (~) provides suggestions for regression paths. Note that the third and fifth recommendations are to either predict spiritual coping from social support coping or the vice versa. Further, the fourth recommendation is to allow those two variables to covary. Either of these three options would reduce our chi-square by 8.339. Keep in mind that the lavaan::modindices package will produce all possible combinations of ways to improve model fit, even if they are redundant.

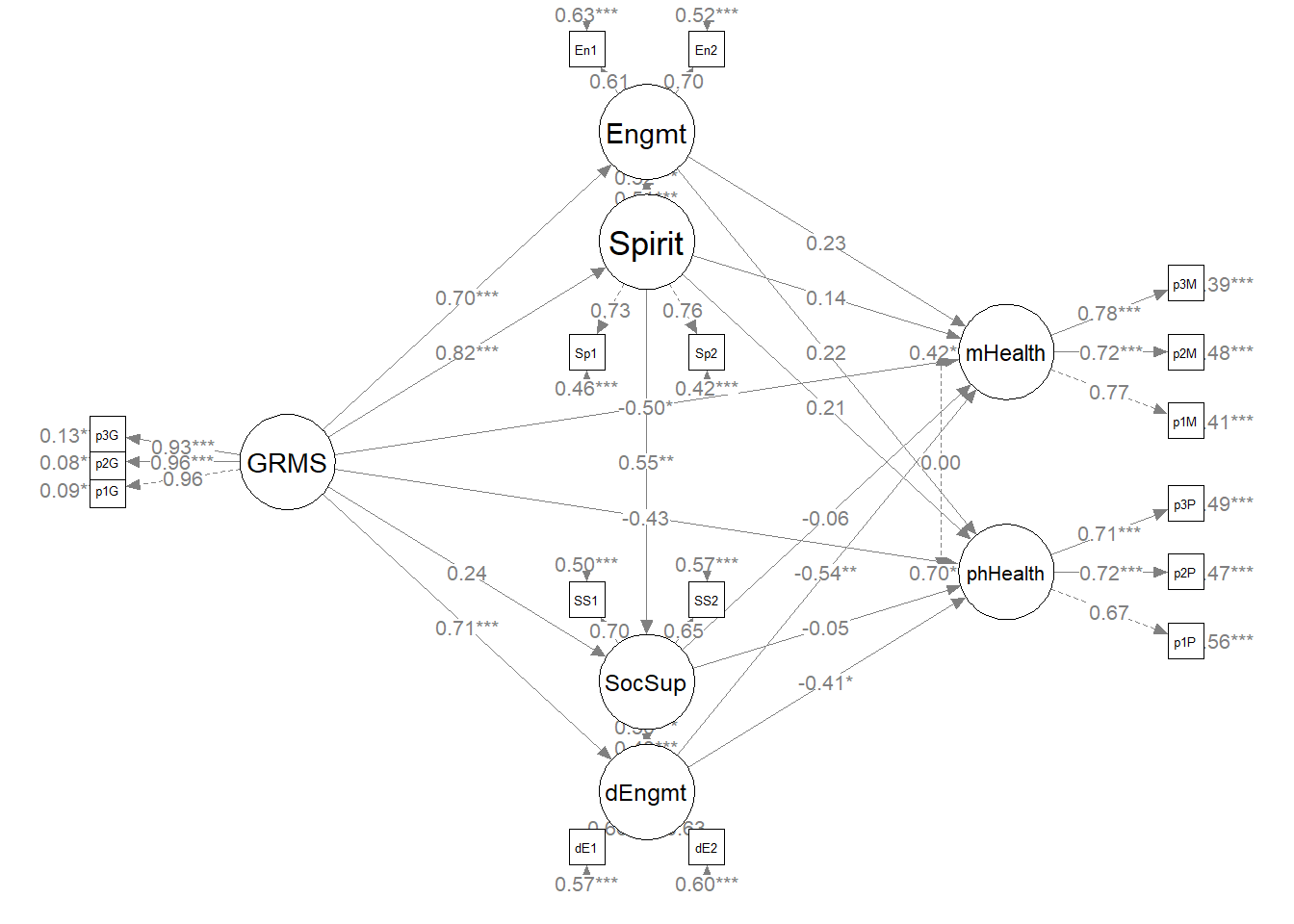

I am intrigued by this suggested relation between spiritual coping and social support. If we added this path, we could test for the significance of an serially mediated effect from gendered racial microaggressions, to spiritual support, through social support, to mental and physical health, respectively.

struct_mod2 <- "

##measurement model

GRMS =~ p1GRMS + p2GRMS + p3GRMS

MH =~ p1MH + p2MH + p3MH

PhH =~ p1PhH + p2PhH + p3PhH

Spr =~ v1*Spirit1 + v1*Spirit2

SSp =~ v2*SocS1 + v2*SocS2

Eng =~ v3*Eng1 + v3*Eng2

dEng =~ v4*dEng1 + v4*dEng2

#structural model with labels for calculation of the indirect effect

Eng ~ a1*GRMS

Spr ~ a2*GRMS

SSp ~ a3*GRMS

dEng ~ a4*GRMS

MH ~ c_p1*GRMS + b1*Eng + b2*Spr + b3*SSp + b4*dEng

PhH ~ c_p2*GRMS + b5*Eng + + b6*Spr + b7*SSp + b8*dEng

SSp ~ d1*Spr

#cov

MH ~~ 0*PhH

#calculations

indirect.EngMH := a1*b1

indirect.SprMH := a2*b2

indirect.SSpMH := a3*b3

indirect.dEngMH := a4*b4

indirect.EngPhH := a1*b5

indirect.SprPhH := a2*b6

indirect.SSpPhH := a3*b7

indirect.dEngPhH := a4*b8

serial.MH := a2*d1*b3

serial.PH := a2*d1*b7

direct.MH := c_p1

direct.PhH := c_p2

total.MH := c_p1 + (a1*b1) + (a2*b2) + (a3*b3) + (a4*b4) + (a2*d1*b3)

total.PhH := c_p2 + (a1*b5) + (a1*b6) + (a1*b7) + (a1*b8) + (a2*d1*b7)

"

set.seed(230916) #needed for reproducibility especially when specifying bootstrapped confidence intervals

struct_fit2 <- lavaan::sem(struct_mod2, data = dfLewis, missing = "fiml")

struct_summary2 <- lavaan::summary(struct_fit2, fit.measures = TRUE, standardized = TRUE,

rsq = TRUE)

struct_pEsts2 <- lavaan::parameterEstimates(struct_fit2, boot.ci.type = "bca.simple",

standardized = TRUE)

struct_summary2## lavaan 0.6.17 ended normally after 121 iterations

##

## Estimator ML

## Optimization method NLMINB

## Number of model parameters 62

##

## Number of observations 231

## Number of missing patterns 1

##

## Model Test User Model:

##

## Test statistic 111.657

## Degrees of freedom 108

## P-value (Chi-square) 0.385

##

## Model Test Baseline Model:

##

## Test statistic 2104.157

## Degrees of freedom 136

## P-value 0.000

##

## User Model versus Baseline Model:

##

## Comparative Fit Index (CFI) 0.998

## Tucker-Lewis Index (TLI) 0.998

##

## Robust Comparative Fit Index (CFI) 0.998

## Robust Tucker-Lewis Index (TLI) 0.998

##

## Loglikelihood and Information Criteria:

##

## Loglikelihood user model (H0) -3385.514

## Loglikelihood unrestricted model (H1) -3329.686

##

## Akaike (AIC) 6895.029

## Bayesian (BIC) 7108.458

## Sample-size adjusted Bayesian (SABIC) 6911.954

##

## Root Mean Square Error of Approximation:

##

## RMSEA 0.012

## 90 Percent confidence interval - lower 0.000

## 90 Percent confidence interval - upper 0.036

## P-value H_0: RMSEA <= 0.050 0.999

## P-value H_0: RMSEA >= 0.080 0.000

##

## Robust RMSEA 0.012

## 90 Percent confidence interval - lower 0.000

## 90 Percent confidence interval - upper 0.036

## P-value H_0: Robust RMSEA <= 0.050 0.999

## P-value H_0: Robust RMSEA >= 0.080 0.000

##

## Standardized Root Mean Square Residual:

##

## SRMR 0.042

##

## Parameter Estimates:

##

## Standard errors Standard

## Information Observed

## Observed information based on Hessian

##

## Latent Variables:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## GRMS =~

## p1GRMS 1.000 0.725 0.955

## p2GRMS 1.014 0.030 34.076 0.000 0.736 0.962

## p3GRMS 0.903 0.030 29.918 0.000 0.655 0.933

## MH =~

## p1MH 1.000 0.628 0.769

## p2MH 1.217 0.126 9.664 0.000 0.765 0.721

## p3MH 1.245 0.113 11.045 0.000 0.783 0.783

## PhH =~

## p1PhH 1.000 0.604 0.666

## p2PhH 1.006 0.128 7.878 0.000 0.608 0.725

## p3PhH 1.036 0.135 7.662 0.000 0.626 0.715

## Spr =~

## Spirit1 (v1) 1.000 0.487 0.731

## Spirit2 (v1) 1.000 0.487 0.759

## SSp =~

## SocS1 (v2) 1.000 0.425 0.704

## SocS2 (v2) 1.000 0.425 0.654

## Eng =~

## Eng1 (v3) 1.000 0.406 0.607

## Eng2 (v3) 1.000 0.406 0.696

## dEng =~

## dEng1 (v4) 1.000 0.396 0.658

## dEng2 (v4) 1.000 0.396 0.633

##

## Regressions:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## Eng ~

## GRMS (a1) 0.392 0.041 9.498 0.000 0.700 0.700

## Spr ~

## GRMS (a2) 0.552 0.040 13.784 0.000 0.822 0.822

## SSp ~

## GRMS (a3) 0.138 0.109 1.266 0.205 0.236 0.236

## dEng ~

## GRMS (a4) 0.386 0.041 9.400 0.000 0.707 0.707

## MH ~

## GRMS (c_p1) -0.431 0.193 -2.236 0.025 -0.498 -0.498

## Eng (b1) 0.351 0.222 1.579 0.114 0.227 0.227

## Spr (b2) 0.185 0.277 0.668 0.504 0.144 0.144

## SSp (b3) -0.082 0.237 -0.347 0.729 -0.055 -0.055

## dEng (b4) -0.852 0.303 -2.815 0.005 -0.537 -0.537

## PhH ~

## GRMS (c_p2) -0.354 0.210 -1.690 0.091 -0.425 -0.425

## Eng (b5) 0.325 0.249 1.303 0.192 0.219 0.219

## Spr (b6) 0.257 0.322 0.797 0.425 0.207 0.207

## SSp (b7) -0.071 0.273 -0.259 0.795 -0.050 -0.050

## dEng (b8) -0.626 0.294 -2.129 0.033 -0.411 -0.411

## SSp ~

## Spr (d1) 0.477 0.180 2.646 0.008 0.548 0.548

##

## Covariances:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## .MH ~~

## .PhH 0.000 0.000 0.000

##

## Intercepts:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## .p1GRMS 2.592 0.050 51.897 0.000 2.592 3.415

## .p2GRMS 2.545 0.050 50.567 0.000 2.545 3.327

## .p3GRMS 2.579 0.046 55.829 0.000 2.579 3.673

## .p1MH 3.582 0.054 66.611 0.000 3.582 4.383

## .p2MH 2.866 0.070 41.023 0.000 2.866 2.699

## .p3MH 3.035 0.066 46.140 0.000 3.035 3.036

## .p1PhH 3.128 0.060 52.384 0.000 3.128 3.447

## .p2PhH 2.652 0.055 48.056 0.000 2.652 3.162

## .p3PhH 3.067 0.058 53.258 0.000 3.067 3.504

## .Spirit1 2.511 0.044 57.292 0.000 2.511 3.770

## .Spirit2 2.437 0.042 57.677 0.000 2.437 3.795

## .SocS1 2.550 0.040 64.289 0.000 2.550 4.230

## .SocS2 2.758 0.043 64.537 0.000 2.758 4.246

## .Eng1 2.437 0.044 55.375 0.000 2.437 3.643

## .Eng2 2.515 0.038 65.530 0.000 2.515 4.312

## .dEng1 2.502 0.040 63.177 0.000 2.502 4.157

## .dEng2 2.455 0.041 59.612 0.000 2.455 3.922

##

## Variances:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## .p1GRMS 0.050 0.007 6.695 0.000 0.050 0.087

## .p2GRMS 0.044 0.007 6.058 0.000 0.044 0.075

## .p3GRMS 0.064 0.008 8.355 0.000 0.064 0.129

## .p1MH 0.273 0.037 7.377 0.000 0.273 0.409

## .p2MH 0.542 0.067 8.062 0.000 0.542 0.481

## .p3MH 0.387 0.055 7.083 0.000 0.387 0.387

## .p1PhH 0.459 0.058 7.914 0.000 0.459 0.557

## .p2PhH 0.334 0.049 6.824 0.000 0.334 0.475

## .p3PhH 0.375 0.054 6.984 0.000 0.375 0.489

## .Spirit1 0.206 0.026 8.020 0.000 0.206 0.465

## .Spirit2 0.175 0.024 7.399 0.000 0.175 0.424

## .SocS1 0.183 0.025 7.198 0.000 0.183 0.504

## .SocS2 0.241 0.029 8.222 0.000 0.241 0.573

## .Eng1 0.282 0.033 8.560 0.000 0.282 0.631

## .Eng2 0.175 0.026 6.694 0.000 0.175 0.515

## .dEng1 0.205 0.029 7.123 0.000 0.205 0.567

## .dEng2 0.235 0.030 7.705 0.000 0.235 0.599

## GRMS 0.526 0.054 9.790 0.000 1.000 1.000

## .MH 0.164 0.042 3.926 0.000 0.416 0.416

## .PhH 0.255 0.058 4.392 0.000 0.698 0.698

## .Spr 0.077 0.019 4.044 0.000 0.324 0.324

## .SSp 0.078 0.022 3.576 0.000 0.431 0.431

## .Eng 0.084 0.022 3.819 0.000 0.510 0.510

## .dEng 0.078 0.024 3.333 0.001 0.500 0.500

##

## R-Square:

## Estimate

## p1GRMS 0.913

## p2GRMS 0.925

## p3GRMS 0.871

## p1MH 0.591

## p2MH 0.519

## p3MH 0.613

## p1PhH 0.443

## p2PhH 0.525

## p3PhH 0.511

## Spirit1 0.535

## Spirit2 0.576

## SocS1 0.496

## SocS2 0.427

## Eng1 0.369

## Eng2 0.485

## dEng1 0.433

## dEng2 0.401

## MH 0.584

## PhH 0.302

## Spr 0.676

## SSp 0.569

## Eng 0.490

## dEng 0.500

##

## Defined Parameters:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## indirect.EngMH 0.138 0.089 1.549 0.121 0.159 0.159

## indirect.SprMH 0.102 0.154 0.667 0.505 0.118 0.118

## indirect.SSpMH -0.011 0.032 -0.350 0.726 -0.013 -0.013

## indirct.dEngMH -0.329 0.122 -2.691 0.007 -0.380 -0.380

## indirct.EngPhH 0.127 0.099 1.287 0.198 0.153 0.153

## indirct.SprPhH 0.142 0.178 0.794 0.427 0.170 0.170

## indirct.SSpPhH -0.010 0.037 -0.264 0.792 -0.012 -0.012

## indrct.dEngPhH -0.242 0.117 -2.072 0.038 -0.290 -0.290

## serial.MH -0.022 0.064 -0.336 0.737 -0.025 -0.025

## serial.PH -0.019 0.074 -0.253 0.800 -0.022 -0.022

## direct.MH -0.431 0.193 -2.236 0.025 -0.498 -0.498

## direct.PhH -0.354 0.210 -1.690 0.091 -0.425 -0.425

## total.MH -0.554 0.062 -8.934 0.000 -0.639 -0.639

## total.PhH -0.418 0.134 -3.120 0.002 -0.472 -0.472# struct_pEsts2 #although creating the object is useful to export as

# a .csv I didn't ask it to print into the bookCreating a figure will help us with the conceptual understanding of what we’ve just done (and will also help us check our work).

Let’s first create a fresh plot from se

plot_struct2 <- semPlot::semPaths(struct_fit2, what = "col", whatLabels = "stand",

sizeMan = 3, node.width = 1, edge.label.cex = 0.75, style = "lisrel",

mar = c(2, 2, 2, 2), structural = FALSE, curve = FALSE, intercepts = FALSE) Because we haven’t added or deleted variables (only 1 path) and we want them to stay in the same location with the same orientation of paths and covariances, we should be able to recycle some of the diagram code we created earlier

Because we haven’t added or deleted variables (only 1 path) and we want them to stay in the same location with the same orientation of paths and covariances, we should be able to recycle some of the diagram code we created earlier

plot2 <- semptools::set_sem_layout(plot_struct2, indicator_order = m1_indicator_order,

indicator_factor = m1_indicator_factor, factor_layout = m1_msmt, factor_point_to = m1_point_to,

indicator_push = m1_indicator_push, indicator_spread = m1_indicator_spread)

# changing node labels