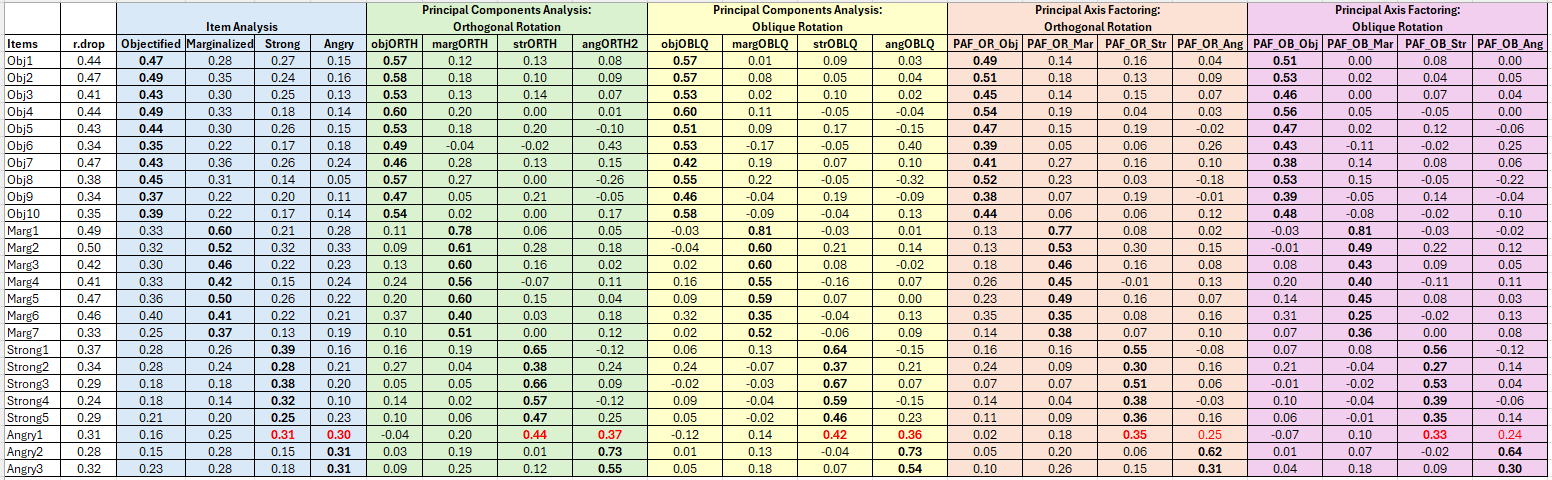

Chapter 9 Principal Axis Factoring

This is the second lesson of exploratory principal components analysis (PCA) and factor analysis (EFA/PAF). This time the focus is on actual factor analysis. There are numerous approaches to this process (e.g., principal components analysis, parallel analyses). In this lesson I will demonstrate principal axis factoring (PAF).

9.2 Exploratory Factor Analysis (with a quick contrast to PCA)

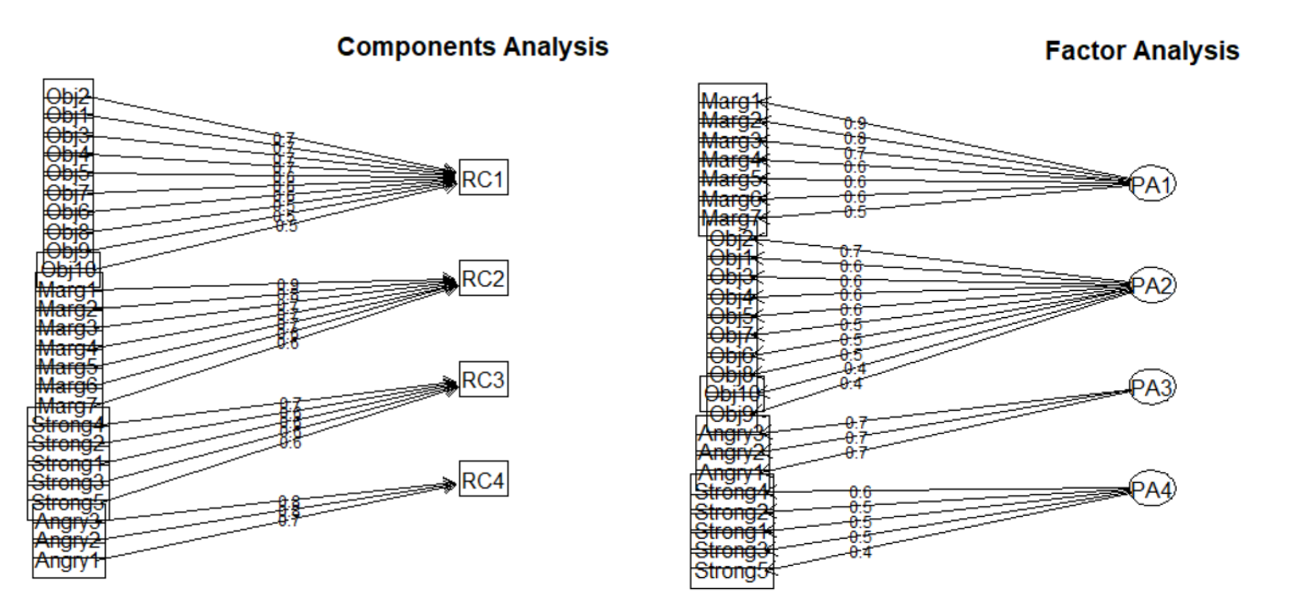

Whereas principal components analysis (PCA) is a regression analysis technique, principal factor analysis is a latent variable model (revelle_william_chapter_nodate?).

Exploratory factor analysis has a rich history. In 1904, Spearman used it for a single factor. In 1947, Thurstone generalized it to multiple factors. Factor analysis is frequently used and controversial.

Factor analysis and principal components are commonly confused:

Principal components is

- linear sums of variables,

- solved with an eigenvalue or singular decomposition,

- represented by an \(n*n\) matrix in terms of the first k components and attempts to reproduce all of the \(R\) matrix, and

- paths which point from the items to a total scale score – all represented as observed/manifest (square) variables.

Factor analysis is

- linear sums of unknown factors,

- estimated as best fitting solutions, normally through iterative procedures, and

- controversial. Because:

- At the structural level (i.e., covariance or correlation matrix), there are normally more observed variables than parameters to estimate them and the procedure seeks to find the best fitting solution using ordinary least squares, weighted least squares, or maximum likelihood.

- At the data level, although scores can be estimated, the model is indeterminate.

- This leads some to argue for using principal components; however, fans of factor analysis suggest that it is useful for constructing and evaluating theories.

- an attempt to model only the common part of the matrix, which means all of the off-diagonal elements and the common part of the diagonal (the communalities); the uniquenesses are the non-common (leftover) part

- Stated another way, the factor model partitions the correlation or covariance matrix into

- common factors, \(FF'\), and

- that which is unique, \(U^2\) (the diagonal matrix of uniquenesses)

- Stated another way, the factor model partitions the correlation or covariance matrix into

- paths which point from the latent variable (LV) representing the factor (oval) to the items (squares) illustrating that the factor/LV “causes” the item’s score

Our focus today is on the PAF approach to scale construction. By utilizing the same research vignette as in the PCA lesson, we can identify similarities in differences in the approach, results, and interpretation. Let’s first take a look at the workflow for PAF.

9.3 PAF Workflow

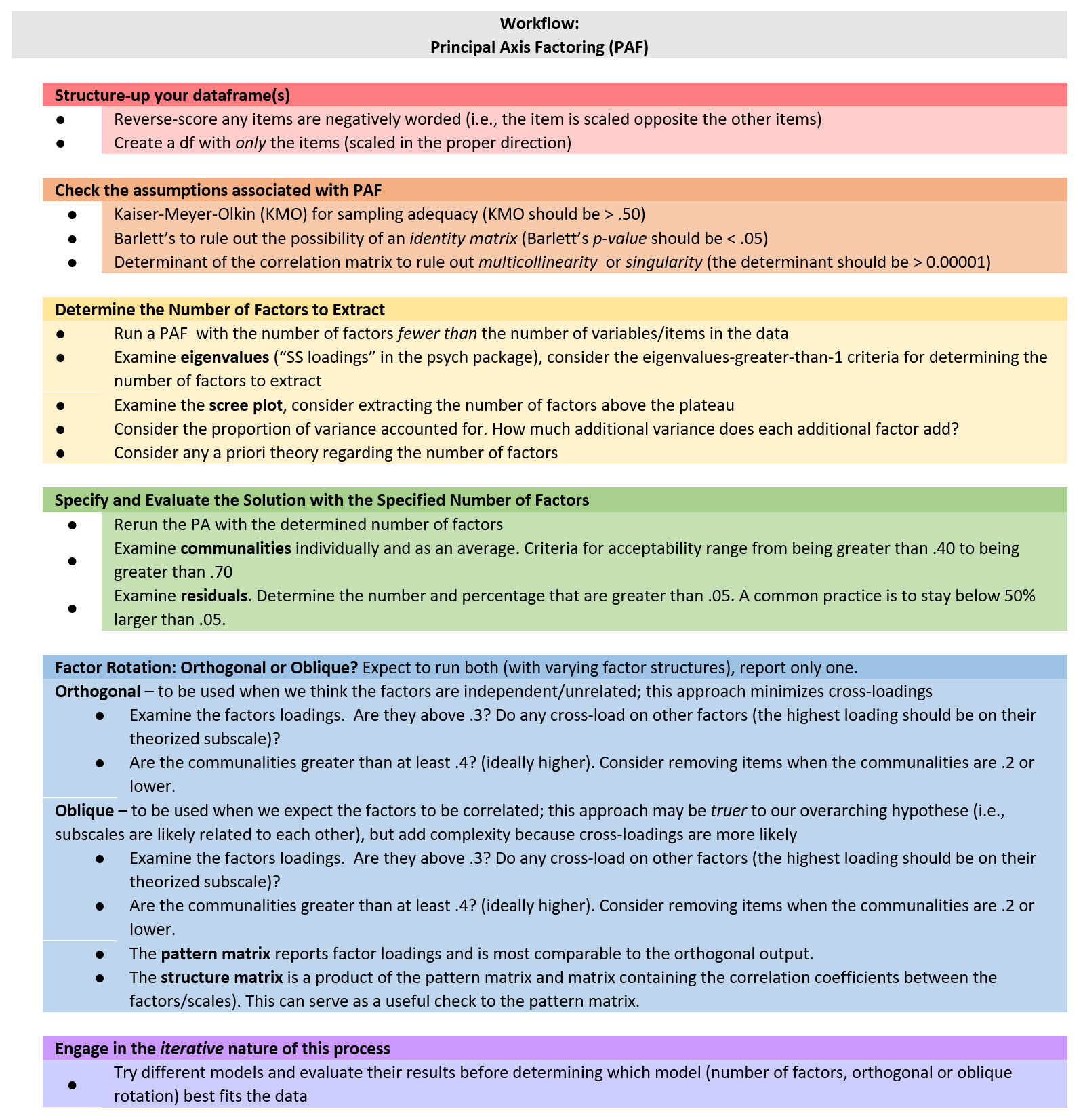

Below is a screenshot of the workflow. The original document is located in the GitHub site that hosts the ReCentering Psych Stats: Psychometrics OER. You may find it refreshing that, with the exception of the change from “components” to “factors,” the workflow for PCA and PAF are quite similar.

Steps in the process include:

- Creating an items only dataframe where all items are scaled in the same direction (i.e., negatively worded items are reverse scored).

- Conducting tests that assess the statistical assumptions of PAF to ensure that the data is appropriate for PAF.

- Determining the number of factors (think “subscales”) to extract.

- Conducting the factor extraction – this process will likely occur iteratively,

- exploring orthogonal (uncorrelated/independent) and oblique (correlated) factors, and

- changing the number of factors to extract

Because the intended audience for the ReCentering Psych Stats OER is the scientist-practitioner-advocate, this lesson focuses on the workflow and decisions. As you might guess, the details of PAF can be quite complex. Some important notions to consider that may not be obvious from lesson, are these:

- The values of factor loadings are directly related to the correlation matrix.

- Although I do not explain this in detail, nearly every analytic step attempts to convey this notion by presenting equivalent analytic options using the raw data and correlation matrix.

- PAF (like PCA and related EFA procedures) is about dimension reduction – our goal is fewer factors (think subscales) than there are items.

- In this lesson’s vignette there are 25 items on the scale, and we will have 4 subscales.

- As a latent variable procedure, PAF is both exploratory and factor analysis. This is in contrast to our prior PCA lesson. Recall that PCA is a regression-based model and therefore not “factor analysis.”

- Matrix algebra (e.g., using the transpose of a matrix, multiplying matrices together) plays a critical role in the analytic solution.

9.4 Research Vignette

This lesson’s research vignette emerges from Lewis and Neville’s Gendered Racial Microaggressions Scale for Black Women (2015). The article reports on two separate studies that comprised the development, refinement, and psychometric evaluation of two parallel versions (stress appraisal, frequency) of the scale. Below, I simulate data from the final construction of the stress appraisal version as the basis of the lecture. Items were on a 6-point Likert scale ranging from 0 (not at all stressful) to 5 (extremely stressful).

Lewis and Neville (2015) reported support for a total scale score (25 items) and four subscales. Below, I list the four subscales along with the items and their abbreviation. At the outset, let me provide a content advisory. For those who hold this particular identity (or related identities) the content in the items may be upsetting. In other lessons, I often provide a variable name that gives an indication of the primary content of the item. In the case of the GRMS, I will simply provide an abbreviation of the subscale name and its respective item number. This will allow us to easily inspect the alignment of the item with its intended factor, and hopefully minimize discomfort.

If you are not a member of this particular identity, I encourage you to learn about these microaggressions by reading the article in its entirety. Please do not ask members of this group to explain why these microaggressions are harmful or ask if they have encountered them. The four factors, number of items, and sample item are as follows:

- Assumptions of Beauty and Sexual Objectification (10 items)

- Unattractive because of size of butt (Obj1)

- Negative comments about size of facial features (Obj2)

- Imitated the way they think Black women speak (Obj3)

- Someone made me feel unattractive (Obj4)

- Negative comment about skin tone (Obj5)

- Someone assumed I speak a certain way (Obj6)

- Objectified me based on physical features(Obj7)

- Someone assumed I have a certain body type (Obj8; stress only)

- Made a sexually inappropriate comment (Obj9)

- Negative comments about my hair when natural (Obj10)

- Assumed I was sexually promiscuous (frequency only; not used in this simulation)

- Silenced and Marginalized (7 items)

- I have felt unheard (Marg1)

- My comments have been ignored (Marg2)

- Someone challenged my authority (Marg3)

- I have been disrespected in workplace (Marg4)

- Someone has tried to “put me in my place” (Marg5)

- Felt excluded from networking opportunities (Marg6)

- Assumed I did not have much to contribute to the conversation (Marg7)

- Strong Black Woman Stereotype (5 items)

- Someone assumed I was sassy and straightforward (Str1; stress only)

- I have been told that I am too independent (Str2)

- Someone made me feel exotic as a Black woman (Str2; stress only)

- I have been told that I am too assertive

- Assumed to be a strong Black woman

- Angry Black Woman Stereotype (3 items)

- Someone has told me to calm down (Ang1)

- Perceived to be “angry Black woman” (Ang2)

- Someone accused me of being angry when speaking calm (Ang3)

Three additional scales were reported in the Lewis and Neville article (2015). Because (a) the focus of this lesson is on exploratory factor analytic approaches and, therefore, only requires item-level data for the scale, and (b) the article does not include correlations between the subscales/scales of all involved measures, I only simulated item-level data for the GRMS items.

Below, I walk through the data simulation. This is not an essential portion of the lesson, but I will lecture it in case you are interested. None of the items are negatively worded (relative to the other items), so there is no need to reverse-score any items.

Simulating the data involved using factor loadings, means, standard deviations, and correlations between the scales. Because the simulation will produce “out-of-bounds” values, the code below rescales the scores into the range of the Likert-type scaling and rounds them to whole values.

# Entering the intercorrelations means and standard deviations from

# the journal article

LewisGRMS_generating_model <- "

#measurement model

Objectification =~ .69*Obj1 + .69*Obj2 + .60*Obj3 + .59*Obj4 + .55*Obj5 + .55*Obj6 + .54*Obj7 + .50*Obj8 + .41*Obj9 + .41*Obj10

Marginalized =~ .93*Marg1 + .81*Marg2 +.69*Marg3 + .67*Marg4 + .61*Marg5 + .58*Marg6 +.54*Marg7

Strong =~ .59*Str1 + .55*Str2 + .54*Str3 + .54*Str4 + .51*Str5

Angry =~ .70*Ang1 + .69*Ang2 + .68*Ang3

#Means

Objectification ~ 1.85*1

Marginalized ~ 2.67*1

Strong ~ 1.61*1

Angry ~ 2.29*1

#Correlations

Objectification ~~ .63*Marginalized

Objectification ~~ .66*Strong

Objectification ~~ .51*Angry

Marginalized ~~ .59*Strong

Marginalized ~~ .62*Angry

Strong ~~ .61*Angry

"

set.seed(240311)

dfGRMS <- lavaan::simulateData(model = LewisGRMS_generating_model, model.type = "sem",

meanstructure = T, sample.nobs = 259, standardized = FALSE)

# used to retrieve column indices used in the rescaling script below

col_index <- as.data.frame(colnames(dfGRMS))

# The code below loops through each column of the dataframe and

# assigns the scaling accordingly Rows 1 thru 26 are the GRMS items

for (i in 1:ncol(dfGRMS)) {

if (i >= 1 & i <= 25) {

dfGRMS[, i] <- scales::rescale(dfGRMS[, i], c(0, 5))

}

}

# rounding to integers so that the data resembles that which was

# collected

library(tidyverse)

dfGRMS <- dfGRMS %>%

round(0)

# quick check psych::describe(dfGRMS)The optional script below will let you save the simulated data to your computing environment as either an .rds object (preserves any formatting you might do) or a .csv file (think “Excel lite”).

An .rds file preserves all formatting to variables prior to the export and re-import. For the purpose of this chapter, you don’t need to do either. That is, you can re-simulate the data each time you work the problem.

# to save the df as an .rds (think 'R object') file on your computer;

# it should save in the same file as the .rmd file you are working

# with saveRDS(dfGRMS, 'dfGRMS.rds') bring back the simulated dat

# from an .rds file dfGRMS <- readRDS('dfGRMS.rds')If you save the .csv file and bring it back in, you will lose any formatting (e.g., ordered factors will be interpreted as character variables).

# write the simulated data as a .csv write.table(dfGRMS,

# file='dfGRMS.csv', sep=',', col.names=TRUE, row.names=FALSE) bring

# back the simulated dat from a .csv file dfGRMS <- read.csv

# ('dfGRMS.csv', header = TRUE)Before moving on, I want to acknowledge that (at their first drafting), I try to select research vignettes that have been published within the prior 5 years. With a publication date of 2015, this article clearly falls outside that range. I have continued to include it because (a) the scholarship is superior – especially as the measure captures an intersectional identity, (b) the article has been a model for research that follows (e.g., Keum et al’s (2018) Gendered Racial Microaggression Scale for Asian American Women), and (c) there is often a time lag between the initial publication of a psychometric scale and its use. A key reason I have retained the GRMS as a psychometrics research vignette is that in ReCentering Psych Stats: Multivariate Modeling, GRMS scales are used in a couple of more recently published research vignettes.

9.5 Working the Vignette

It may be useful to recall how we might understand factors in the psychometric sense:

- clusters of correlated items in an \(R\)-matrix

- statistical entities that can be plotted as classification axes where coordinates of variables along each axis represent the strength of the relationship between that variable to each factor.

- mathematical equations, resembling regression equations, where each variable is represented according to its relative weight

9.5.1 Data Prep

Since the first step is data preparation, let’s start by:

- reverse coding any items that are phrased in the opposite direction

- creating a df (as an object) that only contains the items in their properly scored direction (i.e., you might need to replace the original item with the reverse-coded item); there should be no other variables (e.g., ID, demographic variables, other scales) in this df

- because the GRMS has no items like this we can skip these two steps

Our example today requires no reverse coding and the dataset I simulated only has item-level data (with no ID and no other variables). This means we are ready to start the PAF process.

Let’s take a look at (and make an object of) the correlation matrix.

GRMSr <- cor(dfGRMS) #correlation matrix (with the negatively scored item already reversed) created and saved as object

round(GRMSr, 2) Obj1 Obj2 Obj3 Obj4 Obj5 Obj6 Obj7 Obj8 Obj9 Obj10 Marg1 Marg2 Marg3

Obj1 1.00 0.35 0.25 0.27 0.28 0.25 0.28 0.35 0.15 0.24 0.19 0.25 0.17

Obj2 0.35 1.00 0.31 0.25 0.27 0.23 0.31 0.28 0.26 0.24 0.22 0.21 0.25

Obj3 0.25 0.31 1.00 0.24 0.28 0.28 0.20 0.25 0.21 0.22 0.17 0.23 0.17

Obj4 0.27 0.25 0.24 1.00 0.39 0.23 0.28 0.30 0.26 0.28 0.22 0.18 0.14

Obj5 0.28 0.27 0.28 0.39 1.00 0.15 0.18 0.29 0.25 0.20 0.17 0.20 0.23

Obj6 0.25 0.23 0.28 0.23 0.15 1.00 0.20 0.14 0.21 0.12 0.10 0.14 0.05

Obj7 0.28 0.31 0.20 0.28 0.18 0.20 1.00 0.31 0.19 0.28 0.30 0.21 0.20

Obj8 0.35 0.28 0.25 0.30 0.29 0.14 0.31 1.00 0.19 0.23 0.27 0.14 0.14

Obj9 0.15 0.26 0.21 0.26 0.25 0.21 0.19 0.19 1.00 0.20 0.10 0.12 0.21

Obj10 0.24 0.24 0.22 0.28 0.20 0.12 0.28 0.23 0.20 1.00 0.09 0.12 0.17

Marg1 0.19 0.22 0.17 0.22 0.17 0.10 0.30 0.27 0.10 0.09 1.00 0.43 0.41

Marg2 0.25 0.21 0.23 0.18 0.20 0.14 0.21 0.14 0.12 0.12 0.43 1.00 0.35

Marg3 0.17 0.25 0.17 0.14 0.23 0.05 0.20 0.14 0.21 0.17 0.41 0.35 1.00

Marg4 0.19 0.18 0.24 0.26 0.20 0.10 0.25 0.24 0.07 0.12 0.38 0.23 0.32

Marg5 0.17 0.22 0.21 0.27 0.25 0.16 0.23 0.19 0.19 0.11 0.41 0.40 0.25

Marg6 0.18 0.27 0.16 0.23 0.22 0.26 0.28 0.26 0.15 0.26 0.35 0.27 0.25

Marg7 0.13 0.19 0.14 0.19 0.06 0.17 0.16 0.14 0.10 0.11 0.31 0.33 0.20

Str1 0.22 0.18 0.14 0.06 0.23 0.07 0.25 0.17 0.19 0.10 0.19 0.25 0.20

Str2 0.19 0.18 0.19 0.19 0.12 0.15 0.13 0.06 0.18 0.19 0.12 0.18 0.17

Str3 0.10 0.09 0.09 0.08 0.11 0.09 0.19 0.05 0.12 0.10 0.13 0.18 0.10

Str4 0.09 0.14 0.18 0.15 0.12 0.08 0.07 0.13 0.05 0.02 0.08 0.12 0.08

Str5 0.20 0.15 0.15 0.08 0.19 0.11 0.15 0.04 0.07 0.09 0.10 0.23 0.12

Ang1 0.06 0.07 0.07 0.09 0.12 0.04 0.15 0.07 0.17 0.06 0.16 0.23 0.18

Ang2 0.06 0.15 0.08 0.06 0.09 0.20 0.13 -0.03 0.00 0.14 0.17 0.19 0.19

Ang3 0.21 0.13 0.11 0.14 0.11 0.16 0.23 0.07 0.06 0.08 0.28 0.28 0.11

Marg4 Marg5 Marg6 Marg7 Str1 Str2 Str3 Str4 Str5 Ang1 Ang2 Ang3

Obj1 0.19 0.17 0.18 0.13 0.22 0.19 0.10 0.09 0.20 0.06 0.06 0.21

Obj2 0.18 0.22 0.27 0.19 0.18 0.18 0.09 0.14 0.15 0.07 0.15 0.13

Obj3 0.24 0.21 0.16 0.14 0.14 0.19 0.09 0.18 0.15 0.07 0.08 0.11

Obj4 0.26 0.27 0.23 0.19 0.06 0.19 0.08 0.15 0.08 0.09 0.06 0.14

Obj5 0.20 0.25 0.22 0.06 0.23 0.12 0.11 0.12 0.19 0.12 0.09 0.11

Obj6 0.10 0.16 0.26 0.17 0.07 0.15 0.09 0.08 0.11 0.04 0.20 0.16

Obj7 0.25 0.23 0.28 0.16 0.25 0.13 0.19 0.07 0.15 0.15 0.13 0.23

Obj8 0.24 0.19 0.26 0.14 0.17 0.06 0.05 0.13 0.04 0.07 -0.03 0.07

Obj9 0.07 0.19 0.15 0.10 0.19 0.18 0.12 0.05 0.07 0.17 0.00 0.06

Obj10 0.12 0.11 0.26 0.11 0.10 0.19 0.10 0.02 0.09 0.06 0.14 0.08

Marg1 0.38 0.41 0.35 0.31 0.19 0.12 0.13 0.08 0.10 0.16 0.17 0.28

Marg2 0.23 0.40 0.27 0.33 0.25 0.18 0.18 0.12 0.23 0.23 0.19 0.28

Marg3 0.32 0.25 0.25 0.20 0.20 0.17 0.10 0.08 0.12 0.18 0.19 0.11

Marg4 1.00 0.30 0.26 0.16 0.10 0.21 0.05 0.06 0.03 0.12 0.22 0.17

Marg5 0.30 1.00 0.29 0.28 0.16 0.13 0.16 0.14 0.18 0.12 0.14 0.21

Marg6 0.26 0.29 1.00 0.20 0.13 0.18 0.15 0.13 0.08 0.11 0.21 0.12

Marg7 0.16 0.28 0.20 1.00 0.14 0.05 0.04 0.02 0.12 0.17 0.13 0.09

Str1 0.10 0.16 0.13 0.14 1.00 0.21 0.30 0.23 0.23 0.18 0.05 0.10

Str2 0.21 0.13 0.18 0.05 0.21 1.00 0.20 0.20 0.12 0.16 0.12 0.16

Str3 0.05 0.16 0.15 0.04 0.30 0.20 1.00 0.27 0.18 0.20 0.07 0.15

Str4 0.06 0.14 0.13 0.02 0.23 0.20 0.27 1.00 0.12 0.15 0.03 0.02

Str5 0.03 0.18 0.08 0.12 0.23 0.12 0.18 0.12 1.00 0.22 0.15 0.11

Ang1 0.12 0.12 0.11 0.17 0.18 0.16 0.20 0.15 0.22 1.00 0.24 0.23

Ang2 0.22 0.14 0.21 0.13 0.05 0.12 0.07 0.03 0.15 0.24 1.00 0.25

Ang3 0.17 0.21 0.12 0.09 0.10 0.16 0.15 0.02 0.11 0.23 0.25 1.00In case you want to examine it in sections (easier to view):

As with PCA, we can analyze the data with either raw data or correlation matrix. I will do both to demonstrate (a) that it’s possible and to (b) continue emphasizing that this is a structural analysis. That is, we are trying to see if our more parsimonious extraction reproduces this original correlation matrix.

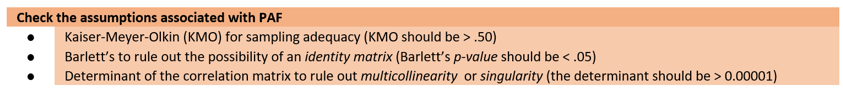

9.5.1.1 Three Diagnostic Tests to Evaluate the Appropriateness of the Data for Factor (or Component))Analysis

Here’s a snip of our location in the PAF workflow.

9.5.1.1.1 Is my sample adequate for PAF?

We return to the KMO (Kaiser-Meyer-Olkin), an index of sampling adequacy that can be used with the actual sample to let us know if the sample size is sufficient (or if we should collect more data).

Kaiser’s 1974 recommendations were:

- bare minimum of .5

- values between .5 and .7 are mediocre

- values between .7 and .8 are good

- values above .9 are superb

We use the KMO() function from the psych package with either raw or matrix dat.

Kaiser-Meyer-Olkin factor adequacy

Call: psych::KMO(r = dfGRMS)

Overall MSA = 0.85

MSA for each item =

Obj1 Obj2 Obj3 Obj4 Obj5 Obj6 Obj7 Obj8 Obj9 Obj10 Marg1 Marg2 Marg3

0.87 0.91 0.88 0.85 0.85 0.80 0.90 0.85 0.81 0.85 0.86 0.89 0.86

Marg4 Marg5 Marg6 Marg7 Str1 Str2 Str3 Str4 Str5 Ang1 Ang2 Ang3

0.86 0.90 0.89 0.84 0.83 0.85 0.82 0.74 0.84 0.78 0.76 0.81 # psych::KMO(GRMSr) #for the KMO function, do not specify sample size

# if using the matrix form of the dataWe examine the KMO values for both the overall matrix and the individual items.

At the matrix level, our \(KMO = .85\), which falls in between Kaiser’s definitions of good and superb.

At the item level, the KMO should be > .50. Variables with values below .5 should be evaluated for exclusion from the analysis (or run the analysis with and without the variable and compare the difference). Because removing/adding variables impacts the KMO, be sure to re-evaluate.

At the item level, our KMO values range between .74 (Str4) and .91 (Obj2).

Considering both item- and matrix- levels, we conclude that the sample size and the data are adequate for factor (or component) analysis.

9.5.1.1.2 Are the correlations among the variables big enough to be analyzed?

Bartlett’s lets us know if a matrix is an identity matrix. In an identity matrix all correlation coefficients (everything on the off-diagonal) would be 0.0 (and everything on the diagonal would be 1.0).

A significant Barlett’s (i.e., \(p < .05\)) tells that the \(R\)-matrix is not an identity matrix. That is, there are some relationships between variables that can be analyzed.

The cortest.bartlett() function in the psych package and can be run either from the raw data or R matrix formats.

R was not square, finding R from data$chisq

[1] 1217.508

$p.value

[1] 0.00000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000001107085

$df

[1] 300# raw data produces the warning 'R was not square, finding R from

# data.' This means nothing other than we fed it raw data and the

# function is creating a matrix from which to do the analysis.

# psych::cortest.bartlett(GRMSr, n = 259) #if using the matrix, must

# specify sample sizeOur Bartlett’s test is significant: \(\chi^{2}(300)=1217.508, p < .001\). This supports a factor (or component) analytic approach for investigating the data.

9.5.1.1.3 Is there multicollinearity or singularity in my data?

The determinant of the correlation matrix should be greater than 0.00001 (that would be 4 zeros before the 1). If it is smaller than 0.00001 then we may have an issue with multicollinearity (i.e., variables that are too highly correlated) or singularity (variables that are perfectly correlated).

The determinant function comes from base R. It is easiest to compute when the correlation matrix is the object. However, it is also possible to specify the command to work with the raw data.

[1] 0.007499909With a value of 0.0075, our determinant is greater than the 0.00001 requirement. If it were not, then we could identify problematic variables (i.e., those correlating too highly with others and those not correlating sufficiently with others) and re-run the diagnostic statistics.

9.5.1.2 APA Style Summary So Far

Data screening were conducted to determine the suitability of the data for principal axis factoring. The Kaiser-Meyer-Olkin measure of sampling adequacy (KMO; Kaiser, 1970) represents the ratio of the squared correlation between variables to the squared partial correlation between variables. KMO ranges from 0.00 to 1.00; values closer to 1.00 indicate that the patterns of correlations are relatively compact, and that factor analysis should yield distinct and reliable factors (Field, 2012). In our dataset, the KMO value was .85, indicating acceptable sampling adequacy. The Barlett’s Test of Sphericity examines whether the population correlation matrix resembles an identity matrix (Field, 2012). When the p value for the Bartlett’s test is < .05, we are fairly certain we have clusters of correlated variables. In our dataset, \(\chi^{2}(300)=1217.508, p < .001\), indicating the correlations between items are sufficiently large enough for principal axis factoring. The determinant of the correlation matrix alerts us to any issues of multicollinearity or singularity and should be larger than 0.00001. Our determinant was 0.0075, supporting the suitability of our data for analysis.

Note: If this looks familiar, it is! The same diagnostics are used in PAF and PCA.

9.5.2 Principal Axis Factoring (PAF)

Here’s a snip of our location in the PAF workflow.

We can use the fa() function, specifying fm = “pa” from the psych package with raw or matrix data.

One difference from PCA is that factor analysis will not (cannot) calculate as many factors as there are items. This means that we should select a reasonable number, like 20 (since there are 25 items). However, I received a number of errors/warnings and 13 is the first number that would run. I also received the warning, “maximum iteration exceeded.” Therefore, I increased “max.iter” to 100.

Our goal is to begin to get an idea of the cumulative variance explained, and number of factors to extract. If we think there are four factors, we simply need to specify more than four factors on the nfactors = ## command. As long as that number is less than the total number of items, it does not matter what that number is.

# grmsPAF1 <- psych::fa(GRMSr, nfactors=10, fm = 'pa', max.iter =

# 100, rotate='none')# using the matrix data and specifying the # of

# factors.

grmsPAF1 <- psych::fa(dfGRMS, nfactors = 13, fm = "pa", max.iter = 100,

rotate = "none") # using raw data and specifying the max number of factors

# I received the warning 'maximum iteration exceeded'. It gave

# output, but it's best if we don't get that warning, so I increased

# it to 100.

grmsPAF1 #this object holds a great deal of information Factor Analysis using method = pa

Call: psych::fa(r = dfGRMS, nfactors = 13, rotate = "none", max.iter = 100,

fm = "pa")

Standardized loadings (pattern matrix) based upon correlation matrix

PA1 PA2 PA3 PA4 PA5 PA6 PA7 PA8 PA9 PA10 PA11 PA12

Obj1 0.49 -0.20 -0.01 0.13 -0.01 0.11 -0.17 -0.16 0.01 -0.09 0.21 0.04

Obj2 0.51 -0.19 -0.03 0.13 0.07 -0.02 -0.04 0.00 0.06 -0.08 0.09 -0.01

Obj3 0.45 -0.17 -0.01 0.05 0.03 0.09 0.00 0.12 -0.04 -0.04 0.14 0.10

Obj4 0.49 -0.25 -0.11 0.03 -0.06 0.04 0.04 0.03 -0.09 0.18 -0.09 0.19

Obj5 0.54 -0.46 0.06 -0.50 -0.46 0.01 -0.09 0.08 0.04 -0.01 -0.06 -0.07

Obj6 0.38 -0.15 0.00 0.39 -0.08 0.07 -0.06 0.36 -0.08 0.02 0.09 -0.18

Obj7 0.51 -0.04 -0.04 0.16 0.06 0.03 -0.02 -0.22 0.05 0.02 -0.05 -0.12

Obj8 0.46 -0.29 -0.17 -0.02 0.15 0.05 -0.08 -0.25 -0.03 0.24 0.09 -0.11

Obj9 0.40 -0.30 0.16 0.09 0.06 -0.44 0.35 0.01 -0.27 -0.09 -0.03 -0.04

Obj10 0.39 -0.23 -0.04 0.21 0.00 -0.01 0.12 -0.15 0.33 -0.04 -0.19 0.20

Marg1 0.56 0.33 -0.24 -0.12 0.11 -0.12 -0.15 -0.06 -0.07 0.05 -0.11 -0.07

Marg2 0.55 0.30 0.03 -0.07 0.02 -0.14 -0.21 0.06 0.00 -0.07 0.05 0.15

Marg3 0.48 0.18 -0.09 -0.17 0.08 -0.19 0.11 0.01 0.12 -0.17 -0.01 0.00

Marg4 0.51 0.21 -0.47 -0.20 0.03 0.32 0.38 0.00 -0.08 -0.08 0.14 -0.02

Marg5 0.52 0.15 -0.09 -0.10 0.04 -0.10 -0.13 0.14 -0.13 0.03 -0.08 0.09

Marg6 0.51 0.02 -0.13 0.08 0.09 0.00 -0.01 0.12 0.15 0.08 -0.24 -0.16

Marg7 0.37 0.17 -0.10 0.05 0.08 -0.22 -0.12 0.12 0.06 0.13 0.12 0.08

Str1 0.40 0.02 0.34 -0.14 0.18 0.01 -0.07 -0.14 0.02 -0.18 0.07 -0.14

Str2 0.36 0.01 0.17 0.07 0.07 0.14 0.17 0.04 -0.01 -0.14 -0.05 0.11

Str3 0.30 0.10 0.38 -0.02 0.13 0.13 -0.01 -0.03 -0.03 -0.05 -0.17 -0.07

Str4 0.27 -0.03 0.36 -0.13 0.28 0.32 0.00 0.16 -0.10 0.16 -0.09 0.08

Str5 0.30 0.07 0.27 -0.02 -0.07 0.01 -0.11 0.04 0.13 -0.06 0.16 0.04

Ang1 0.34 0.32 0.38 -0.03 -0.17 -0.10 0.28 -0.11 0.09 0.34 0.16 -0.01

Ang2 0.30 0.28 0.00 0.15 -0.24 0.08 0.12 0.16 0.22 -0.02 -0.03 -0.08

Ang3 0.38 0.32 0.06 0.25 -0.39 0.12 -0.10 -0.24 -0.29 -0.07 -0.12 0.03

PA13 h2 u2 com

Obj1 -0.05 0.42 0.5768 2.8

Obj2 -0.15 0.36 0.6397 1.9

Obj3 -0.07 0.30 0.7008 2.1

Obj4 0.11 0.43 0.5749 2.7

Obj5 0.00 1.00 0.0031 4.1

Obj6 0.05 0.51 0.4897 4.2

Obj7 0.12 0.38 0.6219 1.9

Obj8 -0.07 0.50 0.4962 4.2

Obj9 -0.01 0.69 0.3115 5.1

Obj10 0.05 0.48 0.5243 5.2

Marg1 -0.07 0.58 0.4213 2.9

Marg2 0.01 0.50 0.5037 2.4

Marg3 -0.20 0.44 0.5594 3.2

Marg4 0.12 0.86 0.1379 4.9

Marg5 0.12 0.41 0.5908 2.2

Marg6 -0.03 0.42 0.5816 2.5

Marg7 0.10 0.31 0.6869 4.2

Str1 0.11 0.43 0.5733 4.2

Str2 -0.02 0.25 0.7472 3.3

Str3 0.15 0.34 0.6630 3.7

Str4 -0.17 0.51 0.4923 6.2

Str5 0.10 0.25 0.7537 4.0

Ang1 -0.02 0.64 0.3585 6.3

Ang2 -0.07 0.35 0.6509 5.8

Ang3 -0.09 0.66 0.3388 6.2

PA1 PA2 PA3 PA4 PA5 PA6 PA7 PA8 PA9 PA10 PA11

SS loadings 4.86 1.25 1.04 0.76 0.68 0.62 0.58 0.51 0.45 0.39 0.36

Proportion Var 0.19 0.05 0.04 0.03 0.03 0.02 0.02 0.02 0.02 0.02 0.01

Cumulative Var 0.19 0.24 0.29 0.32 0.34 0.37 0.39 0.41 0.43 0.45 0.46

Proportion Explained 0.40 0.10 0.09 0.06 0.06 0.05 0.05 0.04 0.04 0.03 0.03

Cumulative Proportion 0.40 0.51 0.60 0.66 0.72 0.77 0.82 0.86 0.89 0.93 0.96

PA12 PA13

SS loadings 0.27 0.24

Proportion Var 0.01 0.01

Cumulative Var 0.47 0.48

Proportion Explained 0.02 0.02

Cumulative Proportion 0.98 1.00

Mean item complexity = 3.8

Test of the hypothesis that 13 factors are sufficient.

df null model = 300 with the objective function = 4.89 with Chi Square = 1217.51

df of the model are 53 and the objective function was 0.1

The root mean square of the residuals (RMSR) is 0.01

The df corrected root mean square of the residuals is 0.03

The harmonic n.obs is 259 with the empirical chi square 17.64 with prob < 1

The total n.obs was 259 with Likelihood Chi Square = 24.56 with prob < 1

Tucker Lewis Index of factoring reliability = 1.184

RMSEA index = 0 and the 90 % confidence intervals are 0 0

BIC = -269.95

Fit based upon off diagonal values = 1

Measures of factor score adequacy

PA1 PA2 PA3 PA4 PA5 PA6

Correlation of (regression) scores with factors 0.96 0.89 0.85 0.87 0.85 0.79

Multiple R square of scores with factors 0.92 0.79 0.72 0.75 0.72 0.62

Minimum correlation of possible factor scores 0.83 0.58 0.44 0.51 0.44 0.25

PA7 PA8 PA9 PA10 PA11

Correlation of (regression) scores with factors 0.80 0.71 0.71 0.67 0.64

Multiple R square of scores with factors 0.64 0.50 0.50 0.45 0.42

Minimum correlation of possible factor scores 0.28 0.01 0.00 -0.09 -0.17

PA12 PA13

Correlation of (regression) scores with factors 0.58 0.56

Multiple R square of scores with factors 0.34 0.31

Minimum correlation of possible factor scores -0.33 -0.38The total variance for a particular variable will have two factors: some variance will be shared with other variables (common variance) and some variance will be specific to that measure (unique variance). Random variance is also specific to one item, but not reliably so. We can examine this most easily by examining the matrix (second screen).

The columns PA1 thru PA10 are the (uninteresting at this point) unrotated loadings. These are the loading from each factor to each variable. PA stands for “principal axis.”

Scrolling to the far right we are interested in:

Communalities are represented as \(h^2\). These are the proportions of common variance present in the variables. A variable that has no specific (or random) variance would have a communality of 1.0. If a variable shares none of its variance with any other variable, its communality would be 0.0. As a point of comparison, in PCA these started as 1.0 because we extracted the same number of components as items. In PAF, because we must extract fewer factors than items, these will have unique values.

**Uniquenesses* are represented as \(u2\). These are the amount of unique variance for each variable. They are calculated as \(1 - h^2\) (or 1 minus the communality).

The final column, com represents item complexity. This is an indication of how well an item reflects a single construct. If it is 1.0 then the item loads only on one factor, if it is 2.0, it loads evenly on two factors, and so forth. For now, we can ignore this. I mostly wanted to reassure you that “com” is not “communality” – h2 is communality.

Let’s switch to the first screen of output.

Eigenvalues are displayed in the row called, SS loadings (i.e., the sum of squared loadings). They represent the variance explained by the particular linear factor. PA1 explains 4.86 units of variance (out of a possible 25; the # of potential factors). As a proportion, this is 4.86/25 = 0.1944 (reported in the Proportion Var row). We inspect the eigenvalues to see how many are > 1.0 (Kaiser’s eigenvalue > 1 criteria criteria). We see there are three that meet Kaiser’s critera and four that meet Joliffe’s criteria (eigenvalues > .70).

[1] 0.1944Cumulative Var is helpful to determine how many factors we’d like to retain to balance parsimony (few as possible) with the amount of variance we want to explain. Directly related to the eigenvalues, we can see how including each additional factor accounts for a greater proportion of variance.

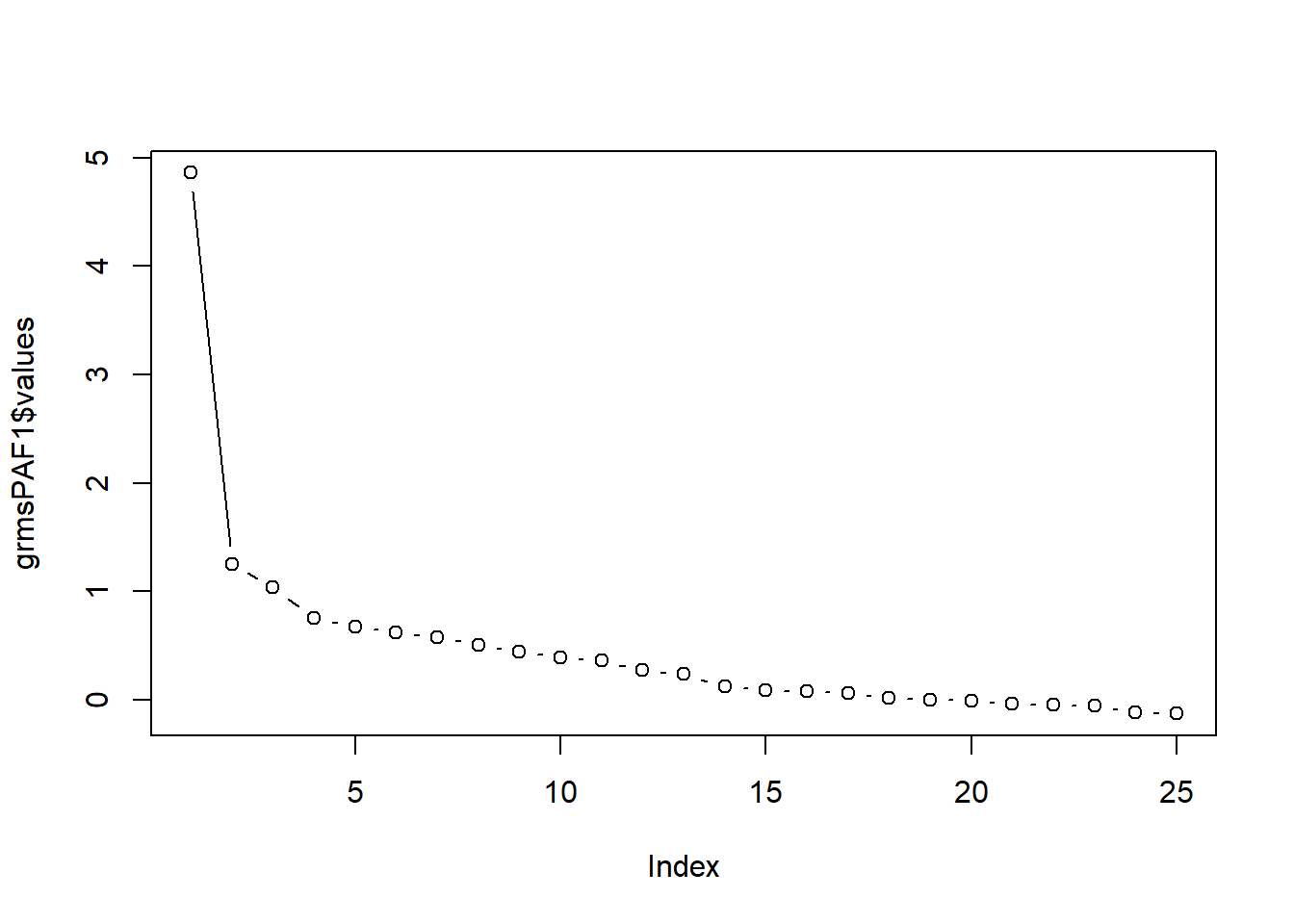

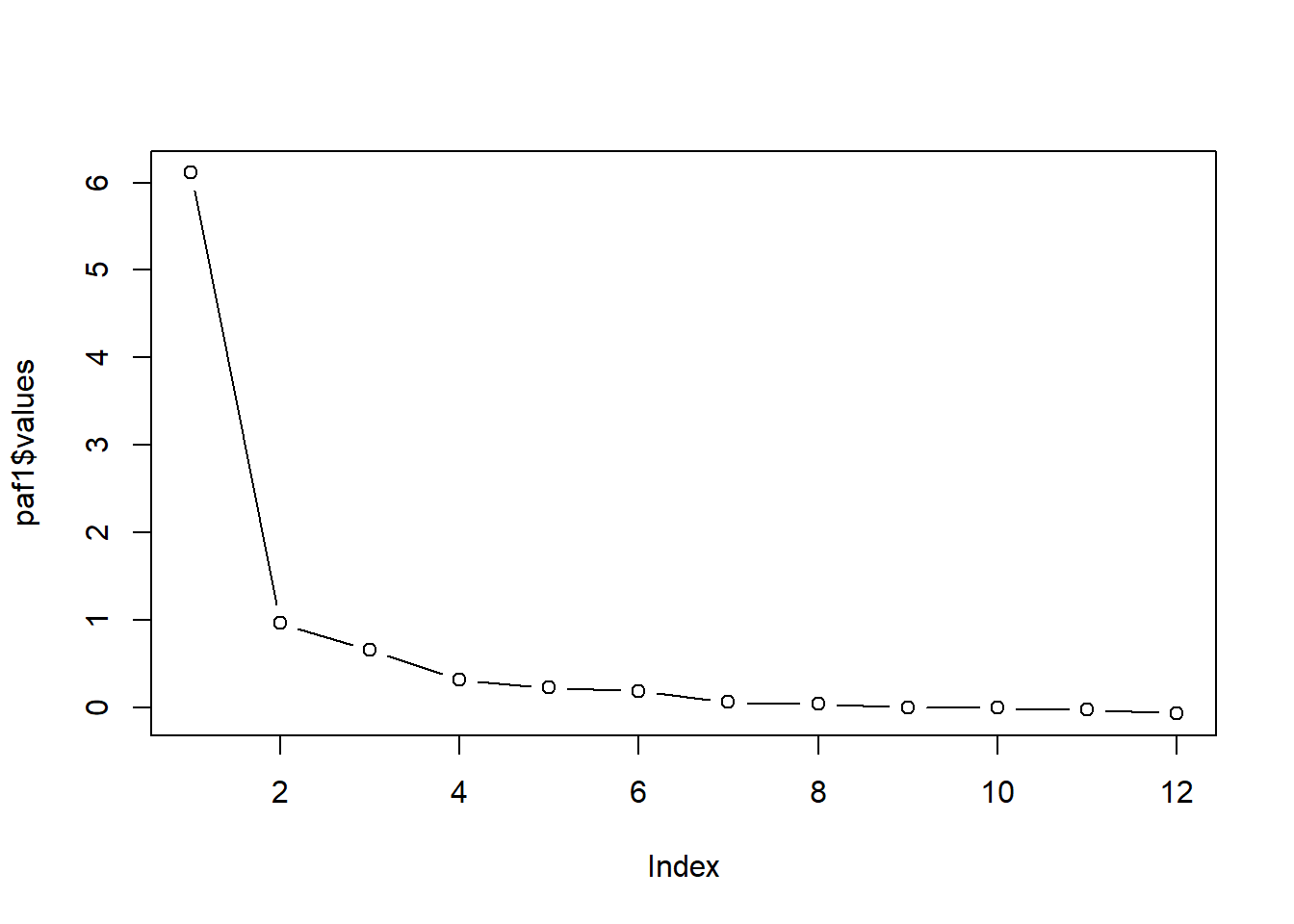

Scree plots can help us visualize the relationship between the eigenvalues. A rule of thumb is to select the number of factors that is associated with the number of dots before the flattening of the curve. Eigenvalues are stored in the grmsPAF1 object’s variable, “values”. We can see all the values captured by this object with the names() function:

[1] "residual" "dof" "chi"

[4] "nh" "rms" "EPVAL"

[7] "crms" "EBIC" "ESABIC"

[10] "fit" "fit.off" "sd"

[13] "factors" "complexity" "n.obs"

[16] "objective" "criteria" "STATISTIC"

[19] "PVAL" "Call" "null.model"

[22] "null.dof" "null.chisq" "TLI"

[25] "F0" "RMSEA" "BIC"

[28] "SABIC" "r.scores" "R2"

[31] "valid" "score.cor" "weights"

[34] "rotation" "hyperplane" "communality"

[37] "communalities" "uniquenesses" "values"

[40] "e.values" "loadings" "model"

[43] "fm" "Structure" "communality.iterations"

[46] "method" "scores" "R2.scores"

[49] "r" "np.obs" "fn"

[52] "Vaccounted" plot(grmsPAF1$values, type = "b") #type = 'b' gives us 'both' lines and points; type = 'l' gives lines and is relatively worthless

We look for the point of inflexion. That is, where the baseline levels out into a plateau. I can see inflections after 1, 2, 3, and 4.

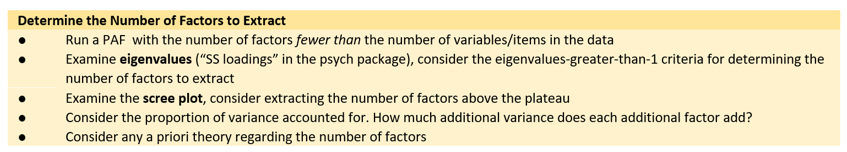

9.5.2.1 Specifying the number of factors

Here’s a snip of our location in the PAF workflow.

Having determined the number of factors, we must rerun the analysis with this specification. Especially when researchers may not have a clear theoretical structure that guides the process, researchers may do this iteratively with varying numbers of factors. Lewis and Neville (J. A. Lewis & Neville, 2015) examined solutions with 2, 3, 4, and 5 factors (they conducted a parallel factor analysis; in contrast this lesson demonstrates principal axis factoring).

# grmsPAF2 <- psych::fa(GRMSr, nfactors=4, fm = 'pa', rotate='none')

grmsPAF2 <- psych::fa(dfGRMS, nfactors = 4, fm = "pa", rotate = "none") #can copy prior script, but change nfactors and object name

grmsPAF2Factor Analysis using method = pa

Call: psych::fa(r = dfGRMS, nfactors = 4, rotate = "none", fm = "pa")

Standardized loadings (pattern matrix) based upon correlation matrix

PA1 PA2 PA3 PA4 h2 u2 com

Obj1 0.48 -0.24 0.02 0.02 0.29 0.71 1.5

Obj2 0.52 -0.22 -0.02 0.06 0.32 0.68 1.4

Obj3 0.45 -0.20 0.02 0.05 0.25 0.75 1.4

Obj4 0.49 -0.27 -0.12 0.03 0.33 0.67 1.7

Obj5 0.47 -0.23 0.03 -0.05 0.28 0.72 1.5

Obj6 0.37 -0.15 0.00 0.26 0.22 0.78 2.2

Obj7 0.51 -0.09 -0.02 0.02 0.27 0.73 1.1

Obj8 0.45 -0.31 -0.16 -0.16 0.35 0.65 2.4

Obj9 0.37 -0.21 0.08 -0.02 0.19 0.81 1.7

Obj10 0.38 -0.23 -0.02 0.14 0.22 0.78 2.0

Marg1 0.58 0.36 -0.31 -0.23 0.62 0.38 2.6

Marg2 0.55 0.31 0.00 -0.09 0.41 0.59 1.6

Marg3 0.48 0.19 -0.09 -0.09 0.28 0.72 1.5

Marg4 0.46 0.11 -0.24 0.01 0.28 0.72 1.6

Marg5 0.53 0.16 -0.11 -0.11 0.33 0.67 1.4

Marg6 0.50 0.01 -0.12 0.06 0.27 0.73 1.2

Marg7 0.37 0.16 -0.13 -0.03 0.18 0.82 1.6

Str1 0.40 0.04 0.38 -0.24 0.36 0.64 2.6

Str2 0.36 -0.01 0.21 0.08 0.18 0.82 1.7

Str3 0.30 0.10 0.41 -0.08 0.28 0.72 2.1

Str4 0.26 -0.03 0.29 -0.11 0.17 0.83 2.3

Str5 0.31 0.09 0.27 0.04 0.18 0.82 2.2

Ang1 0.32 0.24 0.24 0.08 0.22 0.78 3.0

Ang2 0.31 0.31 0.01 0.49 0.43 0.57 2.5

Ang3 0.35 0.21 0.03 0.17 0.19 0.81 2.1

PA1 PA2 PA3 PA4

SS loadings 4.67 1.03 0.83 0.57

Proportion Var 0.19 0.04 0.03 0.02

Cumulative Var 0.19 0.23 0.26 0.28

Proportion Explained 0.66 0.15 0.12 0.08

Cumulative Proportion 0.66 0.80 0.92 1.00

Mean item complexity = 1.9

Test of the hypothesis that 4 factors are sufficient.

df null model = 300 with the objective function = 4.89 with Chi Square = 1217.51

df of the model are 206 and the objective function was 0.77

The root mean square of the residuals (RMSR) is 0.04

The df corrected root mean square of the residuals is 0.04

The harmonic n.obs is 259 with the empirical chi square 202.41 with prob < 0.56

The total n.obs was 259 with Likelihood Chi Square = 189.19 with prob < 0.79

Tucker Lewis Index of factoring reliability = 1.027

RMSEA index = 0 and the 90 % confidence intervals are 0 0.018

BIC = -955.52

Fit based upon off diagonal values = 0.97

Measures of factor score adequacy

PA1 PA2 PA3 PA4

Correlation of (regression) scores with factors 0.93 0.79 0.74 0.70

Multiple R square of scores with factors 0.87 0.62 0.55 0.48

Minimum correlation of possible factor scores 0.75 0.24 0.11 -0.03Our eigenvalues/SS loadings wiggle around a bit from the initial run. With four factors, we now, cumulatively, explain 28% of the variance.

Communality is the proportion of common variance within a variable. Changing from 13 to 4 factors changed these values (\(h2\)) as well as their associated uniquenesses (\(u2\)), which are calculated as “1.0 minus the communality.”

Now we see that 29% of the variance associated with Obj1 is common/shared (the \(h2\) value).

As a reminder of what we are doing, recall that we are looking for a more parsimonious explanation than 25 items on the GRMS. By respecifying a smaller number of factors, we lose some information. That is, the retained factors (now 4) cannot explain all of the variance present in the data (as we saw, it explains about 28%, cumulatively). The amount of variance explained in each variable is represented by the communalities after extraction.

We can also inspect the communalities through the lens of Kaiser’s criterion (the eigenvalue > 1 criteria) to see if we think that four was a good number of factors to extract.

Kaiser’s criterion is believed to be accurate:

- when there are fewer than 30 variables (we had 25) and, after extraction, the communalities are greater than .70

- looking at our data, none of the communalities is > .70, so, this does not support extracting four factors

- When the sample size is greater than 250 (ours was 259) and the average communality is > .60

- calculated below, ours was .28.

Using the names() function again, we see that “communality” is available for manipulation.

[1] "residual" "dof" "chi"

[4] "nh" "rms" "EPVAL"

[7] "crms" "EBIC" "ESABIC"

[10] "fit" "fit.off" "sd"

[13] "factors" "complexity" "n.obs"

[16] "objective" "criteria" "STATISTIC"

[19] "PVAL" "Call" "null.model"

[22] "null.dof" "null.chisq" "TLI"

[25] "F0" "RMSEA" "BIC"

[28] "SABIC" "r.scores" "R2"

[31] "valid" "score.cor" "weights"

[34] "rotation" "hyperplane" "communality"

[37] "communalities" "uniquenesses" "values"

[40] "e.values" "loadings" "model"

[43] "fm" "Structure" "communality.iterations"

[46] "method" "scores" "R2.scores"

[49] "r" "np.obs" "fn"

[52] "Vaccounted" We can use this value to calculate their mean.

[1] 0.2840516We see that our average communality is 0.28. These two criteria suggest that we may not have the best solution. That said (in our defense):

- We used the scree plot as a guide, and it was very clear.

- We have an adequate sample size and that was supported with the KMO.

- Are the number of factors consistent with theory? We have not yet inspected the factor loadings. This will provide us with more information.

We could do several things:

- rerun with a different number of factors (recall Lewis and Neville (2015) ran models with 2, 3, 4, and 5 factors)

- conduct more diagnostics tests

- reproduced correlation matrix

- the difference between the reproduced correlation matrix and the correlation matrix from the data

The factor.model() function in psych produces the reproduced correlation matrix by using the loadings in our extracted object. Conceptually, this matrix is the correlations that should be produced if we did not have the raw data, but we only had the factor loadings.

The questions, though, is: How close did we get? How different is the reproduced correlation matrix from GRMSmatrix – the \(R\)-matrix produced from our raw data.

Obj1 Obj2 Obj3 Obj4 Obj5 Obj6 Obj7 Obj8 Obj9 Obj10 Marg1 Marg2

Obj1 0.293 0.304 0.270 0.300 0.284 0.219 0.270 0.287 0.229 0.242 0.187 0.193

Obj2 0.304 0.319 0.282 0.317 0.291 0.239 0.286 0.294 0.232 0.256 0.215 0.212

Obj3 0.270 0.282 0.250 0.277 0.260 0.209 0.252 0.258 0.209 0.225 0.178 0.185

Obj4 0.300 0.317 0.277 0.326 0.288 0.229 0.279 0.317 0.225 0.255 0.219 0.186

Obj5 0.284 0.291 0.260 0.288 0.280 0.196 0.261 0.287 0.226 0.225 0.193 0.194

Obj6 0.219 0.239 0.209 0.229 0.196 0.225 0.207 0.169 0.161 0.210 0.097 0.131

Obj7 0.270 0.286 0.252 0.279 0.261 0.207 0.273 0.260 0.205 0.218 0.271 0.256

Obj8 0.287 0.294 0.258 0.317 0.287 0.169 0.260 0.353 0.221 0.223 0.242 0.170

Obj9 0.229 0.232 0.209 0.225 0.226 0.161 0.205 0.221 0.186 0.183 0.118 0.140

Obj10 0.242 0.256 0.225 0.255 0.225 0.210 0.218 0.223 0.183 0.217 0.113 0.126

Marg1 0.187 0.215 0.178 0.219 0.193 0.097 0.271 0.242 0.118 0.113 0.619 0.455

Marg2 0.193 0.212 0.185 0.186 0.194 0.131 0.256 0.170 0.140 0.126 0.455 0.411

Marg3 0.182 0.201 0.173 0.191 0.182 0.121 0.228 0.187 0.129 0.126 0.393 0.329

Marg4 0.195 0.221 0.186 0.226 0.185 0.155 0.234 0.212 0.127 0.158 0.378 0.287

Marg5 0.213 0.233 0.201 0.225 0.213 0.140 0.257 0.225 0.153 0.151 0.424 0.350

Marg6 0.239 0.263 0.227 0.259 0.227 0.197 0.261 0.233 0.169 0.199 0.322 0.276

Marg7 0.140 0.159 0.134 0.154 0.136 0.105 0.180 0.145 0.093 0.104 0.320 0.257

Str1 0.188 0.177 0.171 0.136 0.206 0.082 0.191 0.148 0.177 0.103 0.187 0.258

Str2 0.184 0.190 0.174 0.160 0.178 0.155 0.184 0.122 0.151 0.147 0.124 0.189

Str3 0.128 0.119 0.120 0.072 0.137 0.075 0.136 0.053 0.126 0.071 0.105 0.207

Str4 0.135 0.126 0.122 0.097 0.144 0.070 0.126 0.097 0.128 0.082 0.075 0.145

Str5 0.134 0.136 0.128 0.097 0.132 0.111 0.145 0.061 0.116 0.097 0.115 0.193

Ang1 0.101 0.110 0.103 0.066 0.098 0.101 0.138 0.016 0.083 0.070 0.176 0.242

Ang2 0.087 0.123 0.102 0.085 0.052 0.193 0.143 -0.037 0.038 0.114 0.177 0.223

Ang3 0.125 0.146 0.126 0.120 0.111 0.141 0.165 0.063 0.084 0.109 0.231 0.243

Marg3 Marg4 Marg5 Marg6 Marg7 Str1 Str2 Str3 Str4 Str5 Ang1 Ang2

Obj1 0.182 0.195 0.213 0.239 0.140 0.188 0.184 0.128 0.135 0.134 0.101 0.087

Obj2 0.201 0.221 0.233 0.263 0.159 0.177 0.190 0.119 0.126 0.136 0.110 0.123

Obj3 0.173 0.186 0.201 0.227 0.134 0.171 0.174 0.120 0.122 0.128 0.103 0.102

Obj4 0.191 0.226 0.225 0.259 0.154 0.136 0.160 0.072 0.097 0.097 0.066 0.085

Obj5 0.182 0.185 0.213 0.227 0.136 0.206 0.178 0.137 0.144 0.132 0.098 0.052

Obj6 0.121 0.155 0.140 0.197 0.105 0.082 0.155 0.075 0.070 0.111 0.101 0.193

Obj7 0.228 0.234 0.257 0.261 0.180 0.191 0.184 0.136 0.126 0.145 0.138 0.143

Obj8 0.187 0.212 0.225 0.233 0.145 0.148 0.122 0.053 0.097 0.061 0.016 -0.037

Obj9 0.129 0.127 0.153 0.169 0.093 0.177 0.151 0.126 0.128 0.116 0.083 0.038

Obj10 0.126 0.158 0.151 0.199 0.104 0.103 0.147 0.071 0.082 0.097 0.070 0.114

Marg1 0.393 0.378 0.424 0.322 0.320 0.187 0.124 0.105 0.075 0.115 0.176 0.177

Marg2 0.329 0.287 0.350 0.276 0.257 0.258 0.189 0.207 0.145 0.193 0.242 0.223

Marg3 0.277 0.260 0.300 0.247 0.220 0.185 0.143 0.132 0.100 0.133 0.165 0.161

Marg4 0.260 0.281 0.286 0.264 0.219 0.098 0.117 0.052 0.045 0.087 0.116 0.182

Marg5 0.300 0.286 0.326 0.274 0.238 0.201 0.156 0.137 0.110 0.140 0.168 0.160

Marg6 0.247 0.264 0.274 0.271 0.203 0.142 0.160 0.097 0.085 0.123 0.136 0.189

Marg7 0.220 0.219 0.238 0.203 0.181 0.113 0.103 0.077 0.056 0.091 0.122 0.151

Str1 0.185 0.098 0.201 0.142 0.113 0.363 0.206 0.300 0.241 0.221 0.209 0.025

Str2 0.143 0.117 0.156 0.160 0.103 0.206 0.179 0.186 0.146 0.170 0.167 0.146

Str3 0.132 0.052 0.137 0.097 0.077 0.300 0.186 0.276 0.205 0.210 0.212 0.087

Str4 0.100 0.045 0.110 0.085 0.056 0.241 0.146 0.205 0.167 0.152 0.136 0.018

Str5 0.133 0.087 0.140 0.123 0.091 0.221 0.170 0.210 0.152 0.178 0.187 0.145

Ang1 0.165 0.116 0.168 0.136 0.122 0.209 0.167 0.212 0.136 0.187 0.223 0.215

Ang2 0.161 0.182 0.160 0.189 0.151 0.025 0.146 0.087 0.018 0.145 0.215 0.431

Ang3 0.187 0.180 0.196 0.186 0.155 0.120 0.142 0.123 0.073 0.140 0.181 0.256

Ang3

Obj1 0.125

Obj2 0.146

Obj3 0.126

Obj4 0.120

Obj5 0.111

Obj6 0.141

Obj7 0.165

Obj8 0.063

Obj9 0.084

Obj10 0.109

Marg1 0.231

Marg2 0.243

Marg3 0.187

Marg4 0.180

Marg5 0.196

Marg6 0.186

Marg7 0.155

Str1 0.120

Str2 0.142

Str3 0.123

Str4 0.073

Str5 0.140

Ang1 0.181

Ang2 0.256

Ang3 0.195We’re not really interested in this matrix. We just need it to compare it to the GRMSmatrix to produce the residuals. We do that next.

Residuals are the difference between the reproduced (i.e., those created from our factor loadings) and \(R\)-matrix produced by the raw data.

If we look at the \(r_{_{Obj1Obj2}}\) in our original correlation matrix (theoretically from the raw data [although we simulated data]), the value is 0.35. The reproduced correlation for this pair is 0.304. The difference is 0.046. The residuals table below shows 0.051 (rounding error).

[1] 0.046By using the factor.residuals() function we can calculate the residuals. Here we will see this difference calculated for us, for all the elements in the matrix.

Obj1 Obj2 Obj3 Obj4 Obj5 Obj6 Obj7 Obj8 Obj9 Obj10

Obj1 0.707 0.051 -0.020 -0.030 -0.002 0.031 0.011 0.059 -0.076 -0.001

Obj2 0.051 0.681 0.030 -0.069 -0.023 -0.005 0.026 -0.012 0.030 -0.011

Obj3 -0.020 0.030 0.750 -0.036 0.023 0.070 -0.051 -0.009 0.000 -0.010

Obj4 -0.030 -0.069 -0.036 0.674 0.099 0.003 0.006 -0.020 0.031 0.024

Obj5 -0.002 -0.023 0.023 0.099 0.720 -0.043 -0.084 0.004 0.026 -0.021

Obj6 0.031 -0.005 0.070 0.003 -0.043 0.775 -0.008 -0.028 0.046 -0.089

Obj7 0.011 0.026 -0.051 0.006 -0.084 -0.008 0.727 0.047 -0.012 0.065

Obj8 0.059 -0.012 -0.009 -0.020 0.004 -0.028 0.047 0.647 -0.028 0.007

Obj9 -0.076 0.030 0.000 0.031 0.026 0.046 -0.012 -0.028 0.814 0.016

Obj10 -0.001 -0.011 -0.010 0.024 -0.021 -0.089 0.065 0.007 0.016 0.783

Marg1 -0.002 0.001 -0.009 0.001 -0.027 0.005 0.025 0.025 -0.017 -0.019

Marg2 0.053 -0.005 0.045 -0.009 0.003 0.007 -0.042 -0.034 -0.023 -0.003

Marg3 -0.013 0.045 0.001 -0.054 0.044 -0.069 -0.030 -0.048 0.086 0.039

Marg4 -0.007 -0.041 0.056 0.030 0.013 -0.054 0.017 0.026 -0.060 -0.036

Marg5 -0.042 -0.014 0.011 0.043 0.039 0.021 -0.026 -0.038 0.036 -0.042

Marg6 -0.061 0.006 -0.067 -0.031 -0.002 0.066 0.024 0.027 -0.019 0.057

Marg7 -0.010 0.032 0.008 0.039 -0.079 0.069 -0.024 -0.004 0.011 0.005

Str1 0.027 0.008 -0.029 -0.074 0.025 -0.016 0.055 0.018 0.014 -0.002

Str2 0.004 -0.006 0.015 0.030 -0.061 -0.003 -0.049 -0.059 0.032 0.040

Str3 -0.030 -0.031 -0.029 0.008 -0.028 0.020 0.052 -0.007 -0.010 0.028

Str4 -0.041 0.015 0.061 0.054 -0.023 0.013 -0.056 0.031 -0.075 -0.060

Str5 0.064 0.009 0.024 -0.017 0.058 0.002 0.003 -0.024 -0.051 -0.006

Ang1 -0.038 -0.042 -0.030 0.026 0.019 -0.061 0.013 0.057 0.086 -0.008

Ang2 -0.028 0.022 -0.019 -0.025 0.035 0.003 -0.012 0.010 -0.042 0.024

Ang3 0.081 -0.019 -0.015 0.020 -0.006 0.015 0.061 0.002 -0.022 -0.025

Marg1 Marg2 Marg3 Marg4 Marg5 Marg6 Marg7 Str1 Str2 Str3

Obj1 -0.002 0.053 -0.013 -0.007 -0.042 -0.061 -0.010 0.027 0.004 -0.030

Obj2 0.001 -0.005 0.045 -0.041 -0.014 0.006 0.032 0.008 -0.006 -0.031

Obj3 -0.009 0.045 0.001 0.056 0.011 -0.067 0.008 -0.029 0.015 -0.029

Obj4 0.001 -0.009 -0.054 0.030 0.043 -0.031 0.039 -0.074 0.030 0.008

Obj5 -0.027 0.003 0.044 0.013 0.039 -0.002 -0.079 0.025 -0.061 -0.028

Obj6 0.005 0.007 -0.069 -0.054 0.021 0.066 0.069 -0.016 -0.003 0.020

Obj7 0.025 -0.042 -0.030 0.017 -0.026 0.024 -0.024 0.055 -0.049 0.052

Obj8 0.025 -0.034 -0.048 0.026 -0.038 0.027 -0.004 0.018 -0.059 -0.007

Obj9 -0.017 -0.023 0.086 -0.060 0.036 -0.019 0.011 0.014 0.032 -0.010

Obj10 -0.019 -0.003 0.039 -0.036 -0.042 0.057 0.005 -0.002 0.040 0.028

Marg1 0.381 -0.030 0.013 -0.002 -0.017 0.026 -0.011 0.003 -0.004 0.026

Marg2 -0.030 0.589 0.019 -0.057 0.053 -0.009 0.069 -0.005 -0.007 -0.027

Marg3 0.013 0.019 0.723 0.063 -0.049 0.007 -0.016 0.018 0.030 -0.036

Marg4 -0.002 -0.057 0.063 0.719 0.013 -0.006 -0.055 0.001 0.094 -0.004

Marg5 -0.017 0.053 -0.049 0.013 0.674 0.016 0.044 -0.043 -0.023 0.020

Marg6 0.026 -0.009 0.007 -0.006 0.016 0.729 -0.005 -0.012 0.024 0.050

Marg7 -0.011 0.069 -0.016 -0.055 0.044 -0.005 0.819 0.027 -0.051 -0.036

Str1 0.003 -0.005 0.018 0.001 -0.043 -0.012 0.027 0.637 0.009 0.001

Str2 -0.004 -0.007 0.030 0.094 -0.023 0.024 -0.051 0.009 0.821 0.012

Str3 0.026 -0.027 -0.036 -0.004 0.020 0.050 -0.036 0.001 0.012 0.724

Str4 0.009 -0.027 -0.021 0.018 0.031 0.048 -0.039 -0.015 0.057 0.063

Str5 -0.015 0.035 -0.010 -0.054 0.037 -0.040 0.032 0.010 -0.048 -0.029

Ang1 -0.014 -0.010 0.016 0.002 -0.053 -0.031 0.049 -0.028 -0.007 -0.013

Ang2 -0.007 -0.038 0.031 0.041 -0.018 0.024 -0.018 0.025 -0.025 -0.012

Ang3 0.048 0.036 -0.077 -0.007 0.012 -0.069 -0.069 -0.018 0.013 0.024

Str4 Str5 Ang1 Ang2 Ang3

Obj1 -0.041 0.064 -0.038 -0.028 0.081

Obj2 0.015 0.009 -0.042 0.022 -0.019

Obj3 0.061 0.024 -0.030 -0.019 -0.015

Obj4 0.054 -0.017 0.026 -0.025 0.020

Obj5 -0.023 0.058 0.019 0.035 -0.006

Obj6 0.013 0.002 -0.061 0.003 0.015

Obj7 -0.056 0.003 0.013 -0.012 0.061

Obj8 0.031 -0.024 0.057 0.010 0.002

Obj9 -0.075 -0.051 0.086 -0.042 -0.022

Obj10 -0.060 -0.006 -0.008 0.024 -0.025

Marg1 0.009 -0.015 -0.014 -0.007 0.048

Marg2 -0.027 0.035 -0.010 -0.038 0.036

Marg3 -0.021 -0.010 0.016 0.031 -0.077

Marg4 0.018 -0.054 0.002 0.041 -0.007

Marg5 0.031 0.037 -0.053 -0.018 0.012

Marg6 0.048 -0.040 -0.031 0.024 -0.069

Marg7 -0.039 0.032 0.049 -0.018 -0.069

Str1 -0.015 0.010 -0.028 0.025 -0.018

Str2 0.057 -0.048 -0.007 -0.025 0.013

Str3 0.063 -0.029 -0.013 -0.012 0.024

Str4 0.833 -0.034 0.017 0.013 -0.051

Str5 -0.034 0.822 0.036 0.009 -0.034

Ang1 0.017 0.036 0.777 0.025 0.051

Ang2 0.013 0.009 0.025 0.569 -0.006

Ang3 -0.051 -0.034 0.051 -0.006 0.805There are several strategies to evaluate this matrix.

- Compare the size of the residuals to the original correlations.

- The worst possible model would occur if we extracted no factors, and the residuals are the size of the original correlations.

- If the correlations were small to start with, we expect small residuals.

- If the correlations were large to start with, the residuals will be relatively larger (this is not terribly problematic).

- Comparing residuals requires squaring them first (because residuals can be both positive and negative)

- The sum of the squared residuals divided by the sum of the squared correlations is an estimate of model fit.

- Subtracting this from 1.0 means that it ranges from 0 to 1.

- Values > .95 are an indication of good fit.

- Subtracting this from 1.0 means that it ranges from 0 to 1.

- The sum of the squared residuals divided by the sum of the squared correlations is an estimate of model fit.

Analyzing the residuals means we need to extract only the upper right of the triangle them into an object. We can do this in steps.

grmsPAF2_resids <- psych::factor.residuals(GRMSr, grmsPAF2$loadings) #first extract the resids

grmsPAF2_resids <- as.matrix(grmsPAF2_resids[upper.tri(grmsPAF2_resids)]) #the object has the residuals in a single column

head(grmsPAF2_resids) [,1]

[1,] 0.05128890

[2,] -0.01969873

[3,] 0.03041703

[4,] -0.02971380

[5,] -0.06927144

[6,] -0.03556489One criteria of residual analysis is to see how many residuals there are that are greater than an absolute value of 0.05. The result will be a single column with TRUE if it is > |0.05| and false if it is smaller. The sum function will tell us how many TRUE responses are in the matrix. Further, we can write script to obtain the proportion of total number of residuals.

[1] 57[1] 0.19We learn that there are 57 residuals greater than the absolute value of 0.05. This represents 19% of the total number of residuals.

There are no hard rules about what proportion of residuals can be greater than 0.05. Field recommends that it stay below 50% (Field, 2012).

Another approach to analyzing residuals is to look at their mean. Because of the +/- valences, we need to square them (to eliminate the negative), take the average, then take the square root.

[1] 0.036While there are no clear guidelines to interpret these, one recommendation is to consider extracting more factors if the value is higher than 0.08 (Field, 2012).

Finally, we expect our residuals to be normally distributed. A histogram can help us inspect the distribution.

Not bad! It looks reasonably normal. No outliers.

9.5.2.2 Quick recap of how to evaluate the # of factors we extracted

- If fewer than 30 variables, the eigenvalue > 1 (Kaiser’s) critera is fine, so long as communalities are all > .70.

- If sample size > 250 and the average communalities are .6 or greater, this is acceptable

- When N > 200, the scree plot can be used.

- Regarding residuals:

- Fewer than 50% should have absolute values > 0.05.

- Model fit should be > 0.90.

9.5.3 Factor Rotation

Here’s a snip of our location in the PAF workflow.

The original solution of a principal components or principal axis factor analysis is a set of vectors that best account for the observed covariance or correlation matrix. Each additional component or factor accounts for progressively less and less variance. The solution is efficient (yay) but difficult to interpret (boo).

Thanks to Thurstone’s five rules toward a simple structure (circa 1947), interpretation of a matrix is facilitated by rotation (multiplying a matrix by a matrix of orthogonal vectors that preserve the communalities of each variable). Both the original matrix and the solution will be orthogonal.

Parsimony becomes a statistical consideration (an equation, in fact) and goal and is maximized when each variable has a 1.0 loading on one factor and the rest are zero.

Different rotation strategies emphasize different goals related to parsimony:

Quartimax seeks to maximize the notion of variable parsimony (each variable is associated with one factor) and permits the rotation toward a general factor (ignoring smaller factors). Varimax maximizes the variance of squared loadings taken over items instead of over factors and avoids a general factor.

Rotation improves the interpretation of the factor by maximizing the loading on each variable on one of the extracted factors while minimizing the loading on all other factors. Rotation works by changing the absolute values of the variables while keeping their differential values constant.

There are two big choices (to be made on theoretical grounds):

- Orthogonal rotation if you think that the factors are independent/unrelated.

- varimax is the most common orthogonal rotation

- Oblique rotation if you think that the factors are related/correlated.

- oblimin and promax are common oblique rotations

9.5.3.1 Orthogonal rotation

# grmsPAF2ORTH <- psych::fa(GRMSr, nfactors = 4, fm = 'pa', rotate =

# 'varimax')

grmsPAF2ORTH <- psych::fa(dfGRMS, nfactors = 4, fm = "pa", rotate = "varimax")

grmsPAF2ORTHFactor Analysis using method = pa

Call: psych::fa(r = dfGRMS, nfactors = 4, rotate = "varimax", fm = "pa")

Standardized loadings (pattern matrix) based upon correlation matrix

PA1 PA2 PA3 PA4 h2 u2 com

Obj1 0.49 0.14 0.16 0.04 0.29 0.71 1.4

Obj2 0.51 0.18 0.13 0.09 0.32 0.68 1.4

Obj3 0.45 0.14 0.15 0.07 0.25 0.75 1.5

Obj4 0.54 0.19 0.04 0.03 0.33 0.67 1.3

Obj5 0.47 0.15 0.19 -0.02 0.28 0.72 1.6

Obj6 0.39 0.05 0.06 0.26 0.22 0.78 1.8

Obj7 0.41 0.27 0.16 0.10 0.27 0.73 2.2

Obj8 0.52 0.23 0.03 -0.18 0.35 0.65 1.7

Obj9 0.38 0.07 0.19 -0.01 0.19 0.81 1.5

Obj10 0.44 0.06 0.06 0.12 0.22 0.78 1.2

Marg1 0.13 0.77 0.08 0.02 0.62 0.38 1.1

Marg2 0.13 0.53 0.30 0.15 0.41 0.59 1.9

Marg3 0.18 0.46 0.16 0.08 0.28 0.72 1.6

Marg4 0.26 0.45 -0.01 0.13 0.28 0.72 1.8

Marg5 0.23 0.49 0.16 0.07 0.33 0.67 1.7

Marg6 0.35 0.35 0.08 0.16 0.27 0.73 2.5

Marg7 0.14 0.38 0.07 0.10 0.18 0.82 1.5

Str1 0.16 0.16 0.55 -0.08 0.36 0.64 1.4

Str2 0.24 0.09 0.30 0.16 0.18 0.82 2.7

Str3 0.07 0.07 0.51 0.06 0.28 0.72 1.1

Str4 0.14 0.04 0.38 -0.03 0.17 0.83 1.3

Str5 0.11 0.09 0.36 0.16 0.18 0.82 1.8

Ang1 0.02 0.18 0.35 0.25 0.22 0.78 2.4

Ang2 0.05 0.20 0.06 0.62 0.43 0.57 1.2

Ang3 0.10 0.26 0.15 0.31 0.19 0.81 2.7

PA1 PA2 PA3 PA4

SS loadings 2.60 2.26 1.41 0.83

Proportion Var 0.10 0.09 0.06 0.03

Cumulative Var 0.10 0.19 0.25 0.28

Proportion Explained 0.37 0.32 0.20 0.12

Cumulative Proportion 0.37 0.68 0.88 1.00

Mean item complexity = 1.7

Test of the hypothesis that 4 factors are sufficient.

df null model = 300 with the objective function = 4.89 with Chi Square = 1217.51

df of the model are 206 and the objective function was 0.77

The root mean square of the residuals (RMSR) is 0.04

The df corrected root mean square of the residuals is 0.04

The harmonic n.obs is 259 with the empirical chi square 202.41 with prob < 0.56

The total n.obs was 259 with Likelihood Chi Square = 189.19 with prob < 0.79

Tucker Lewis Index of factoring reliability = 1.027

RMSEA index = 0 and the 90 % confidence intervals are 0 0.018

BIC = -955.52

Fit based upon off diagonal values = 0.97

Measures of factor score adequacy

PA1 PA2 PA3 PA4

Correlation of (regression) scores with factors 0.84 0.85 0.76 0.72

Multiple R square of scores with factors 0.71 0.72 0.58 0.52

Minimum correlation of possible factor scores 0.42 0.45 0.17 0.03Essentially, we have the same information as before, except that loadings are calculated after rotation (which adjusts the absolute values of the factor loadings while keeping their differential vales constant). Our communality and uniqueness values remain the same. The eigenvalues (SS loadings) should even out, but the proportion of variance explained and cumulative variance (28%) will remain the same.

The print.psych() function facilitates interpretation and prioritizes the information about which we care most:

- cut displays loadings above .3, this allows us to see

- if some items load on no factors

- if some items have cross-loadings (and their relative weights)

- sort reorders the loadings to make it clearer (considering ties, to the best of its ability) to which factor/scale it belongs

Factor Analysis using method = pa

Call: psych::fa(r = dfGRMS, nfactors = 4, rotate = "varimax", fm = "pa")

Standardized loadings (pattern matrix) based upon correlation matrix

item PA1 PA2 PA3 PA4 h2 u2 com

Obj4 4 0.54 0.33 0.67 1.3

Obj8 8 0.52 0.35 0.65 1.7

Obj2 2 0.51 0.32 0.68 1.4

Obj1 1 0.49 0.29 0.71 1.4

Obj5 5 0.47 0.28 0.72 1.6

Obj3 3 0.45 0.25 0.75 1.5

Obj10 10 0.44 0.22 0.78 1.2

Obj7 7 0.41 0.27 0.73 2.2

Obj6 6 0.39 0.22 0.78 1.8

Obj9 9 0.38 0.19 0.81 1.5

Marg1 11 0.77 0.62 0.38 1.1

Marg2 12 0.53 0.41 0.59 1.9

Marg5 15 0.49 0.33 0.67 1.7

Marg3 13 0.46 0.28 0.72 1.6

Marg4 14 0.45 0.28 0.72 1.8

Marg7 17 0.38 0.18 0.82 1.5

Marg6 16 0.35 0.35 0.27 0.73 2.5

Str1 18 0.55 0.36 0.64 1.4

Str3 20 0.51 0.28 0.72 1.1

Str4 21 0.38 0.17 0.83 1.3

Str5 22 0.36 0.18 0.82 1.8

Ang1 23 0.35 0.22 0.78 2.4

Str2 19 0.30 0.18 0.82 2.7

Ang2 24 0.62 0.43 0.57 1.2

Ang3 25 0.31 0.19 0.81 2.7

PA1 PA2 PA3 PA4

SS loadings 2.60 2.26 1.41 0.83

Proportion Var 0.10 0.09 0.06 0.03

Cumulative Var 0.10 0.19 0.25 0.28

Proportion Explained 0.37 0.32 0.20 0.12

Cumulative Proportion 0.37 0.68 0.88 1.00

Mean item complexity = 1.7

Test of the hypothesis that 4 factors are sufficient.

df null model = 300 with the objective function = 4.89 with Chi Square = 1217.51

df of the model are 206 and the objective function was 0.77

The root mean square of the residuals (RMSR) is 0.04

The df corrected root mean square of the residuals is 0.04

The harmonic n.obs is 259 with the empirical chi square 202.41 with prob < 0.56

The total n.obs was 259 with Likelihood Chi Square = 189.19 with prob < 0.79

Tucker Lewis Index of factoring reliability = 1.027

RMSEA index = 0 and the 90 % confidence intervals are 0 0.018

BIC = -955.52

Fit based upon off diagonal values = 0.97

Measures of factor score adequacy

PA1 PA2 PA3 PA4

Correlation of (regression) scores with factors 0.84 0.85 0.76 0.72

Multiple R square of scores with factors 0.71 0.72 0.58 0.52

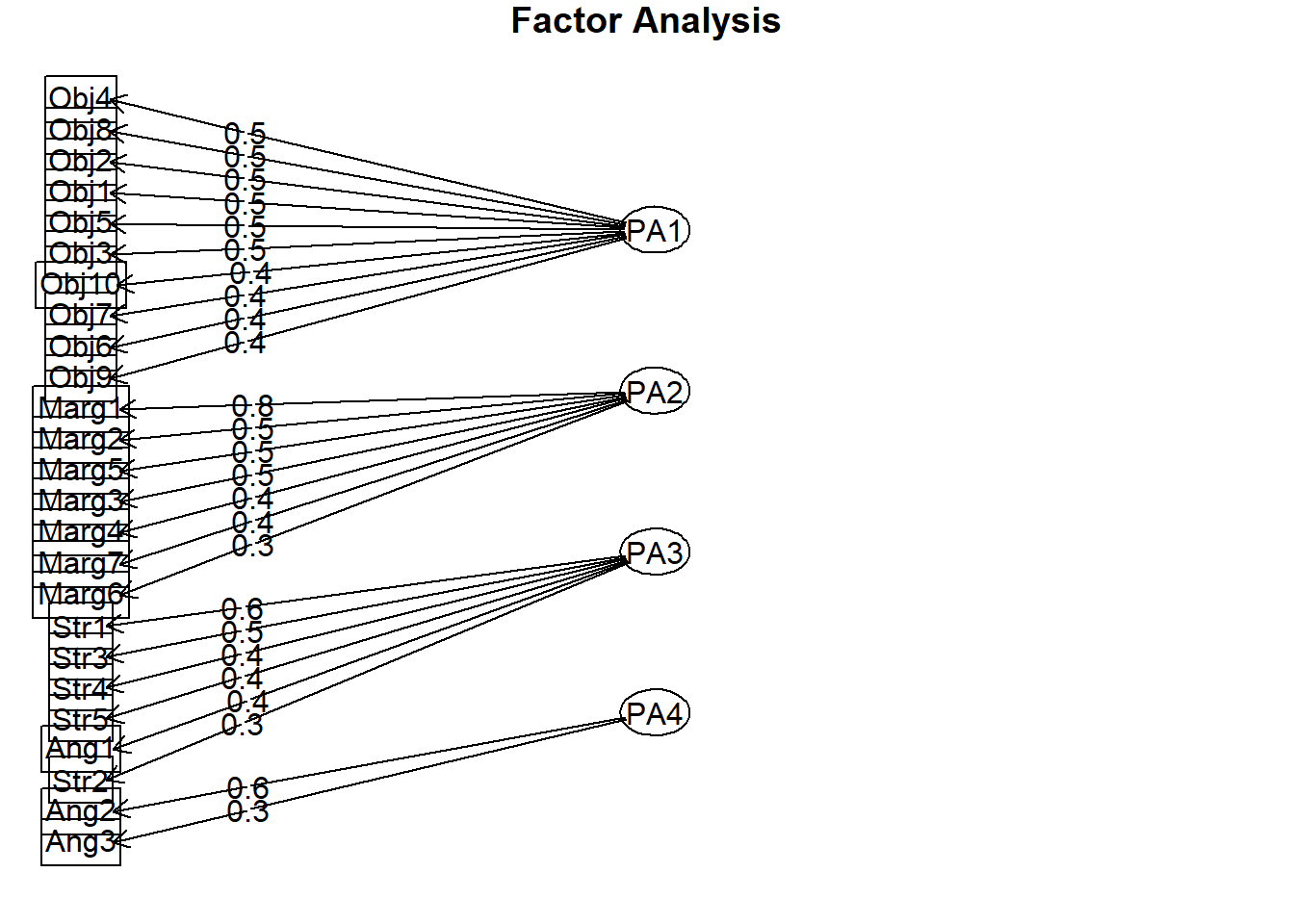

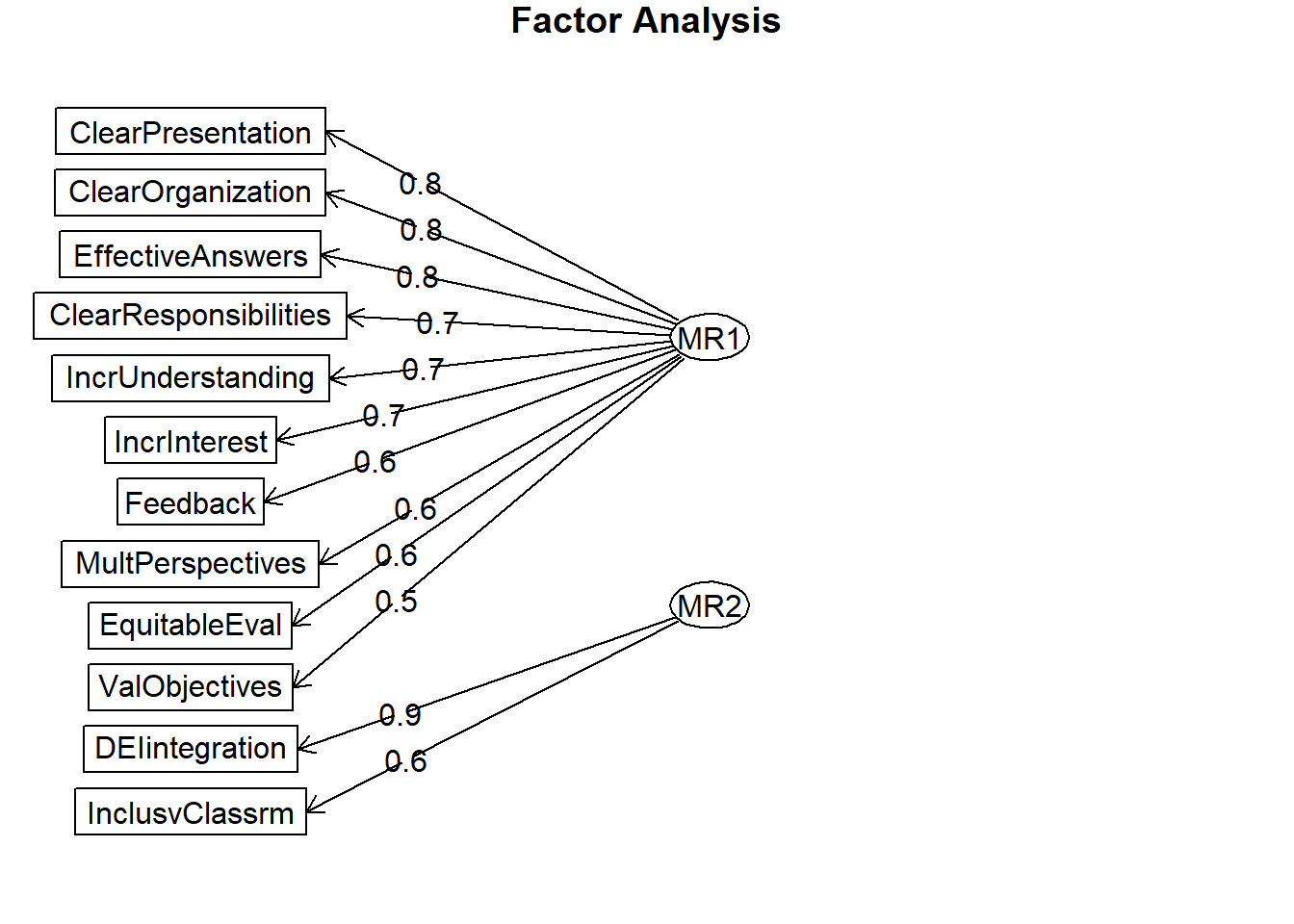

Minimum correlation of possible factor scores 0.42 0.45 0.17 0.03In the unrotated solution, most variables loaded on the first factor. After rotation, there are four clear factors/scales. Further, there is clear (or at least reasonable) factor/scale membership for each item and few cross-loadings. As with the PAC in the previous lesson, Ang1 is not clearly loading on the Angry scale.

If this were a new scale and we had not yet established ideas for subscales, the next step would be to look back at the items, themselves, and try to name the scales/factors. If our scale construction included a priori/planned subscales, we would hope the items would where they were hypothesized to do so. As we noted with the Ang1 item, our simulated data nearly replicated the item membership onto the four scales that Lewis and Neville (J. A. Lewis & Neville, 2015) reported in the article.

- Assumptions of Beauty and Sexual Objectification

- Silenced and Marginalized

- Strong Woman Stereotype

- Angry Woman Stereotype

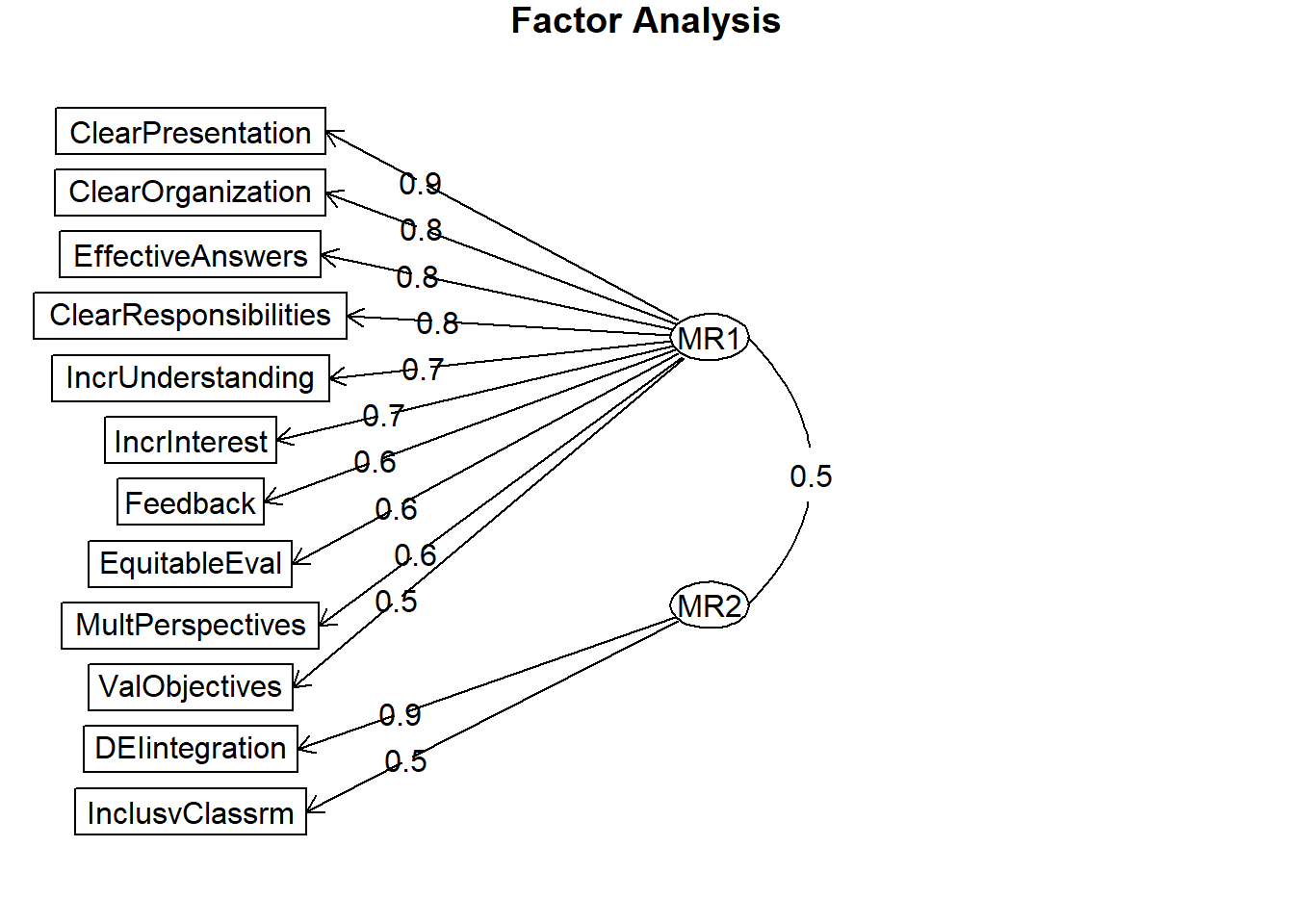

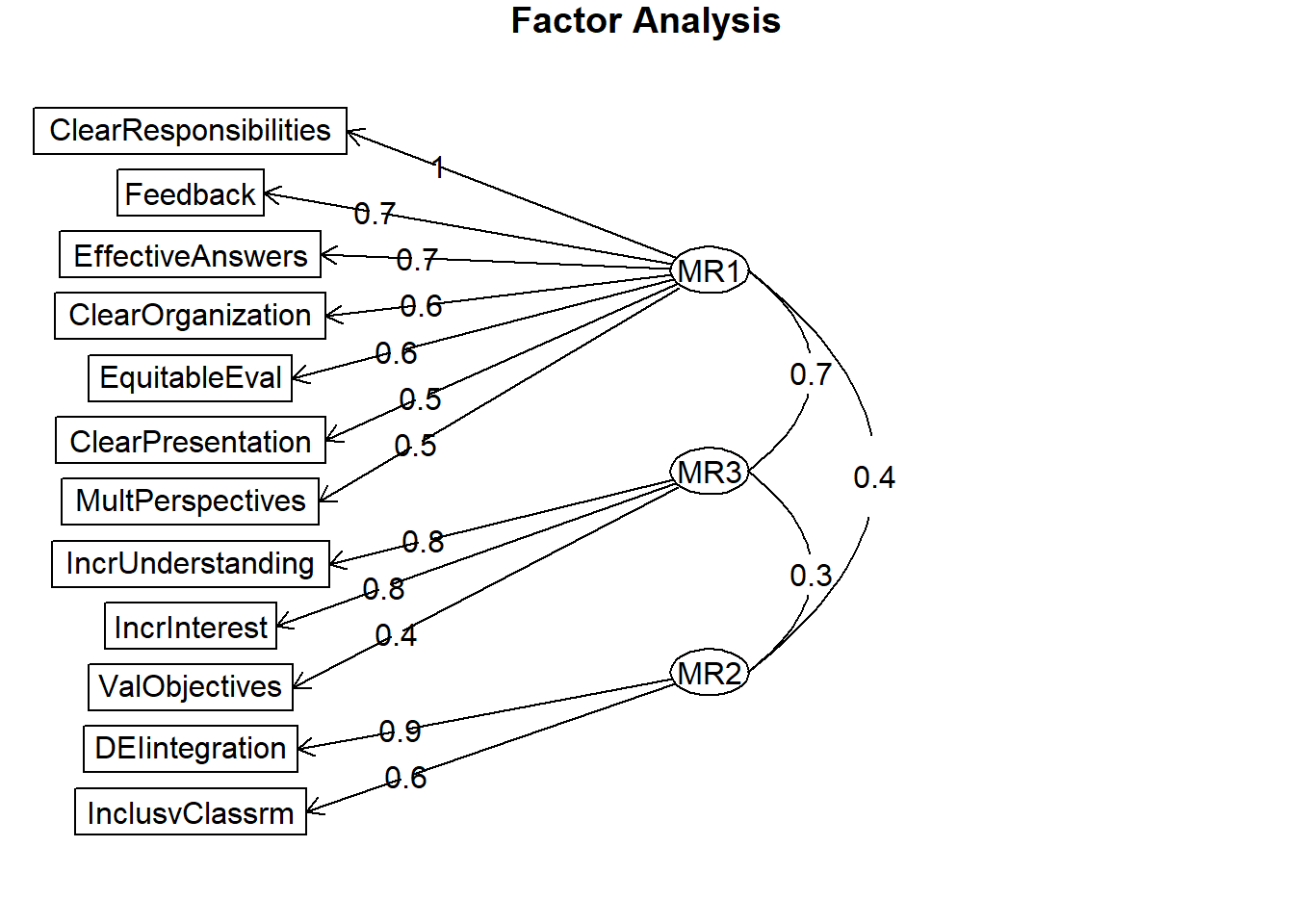

We can also create a figure of the result. Note the direction of the arrows from the factor (latent variable) to the items in PAF – in PCA the arrows went from item to component.

We can extract the factor loadings and write them to a table. This can be useful in preparing an APA style table for a manuscript or presentation.

# names(grmsPAF2ORTH)

pafORTH_table <- round(grmsPAF2ORTH$loadings, 3)

write.table(pafORTH_table, file = "pafORTH_table.csv", sep = ",", col.names = TRUE,

row.names = FALSE)

pafORTH_table

Loadings:

PA1 PA2 PA3 PA4

Obj1 0.495 0.144 0.160

Obj2 0.513 0.179 0.131

Obj3 0.452 0.140 0.147

Obj4 0.536 0.191

Obj5 0.469 0.154 0.189

Obj6 0.391 0.257

Obj7 0.408 0.265 0.164

Obj8 0.517 0.232 -0.177

Obj9 0.382 0.187

Obj10 0.442 0.122

Marg1 0.126 0.772

Marg2 0.126 0.534 0.296 0.151

Marg3 0.175 0.462 0.161

Marg4 0.256 0.446 0.130

Marg5 0.230 0.493 0.162

Marg6 0.345 0.348 0.157

Marg7 0.142 0.381 0.104

Str1 0.160 0.161 0.553

Str2 0.236 0.301 0.160

Str3 0.512

Str4 0.141 0.381

Str5 0.115 0.362 0.161

Ang1 0.182 0.354 0.254

Ang2 0.198 0.621

Ang3 0.104 0.258 0.154 0.307

PA1 PA2 PA3 PA4

SS loadings 2.600 2.257 1.414 0.830

Proportion Var 0.104 0.090 0.057 0.033

Cumulative Var 0.104 0.194 0.251 0.2849.5.3.2 Oblique rotation

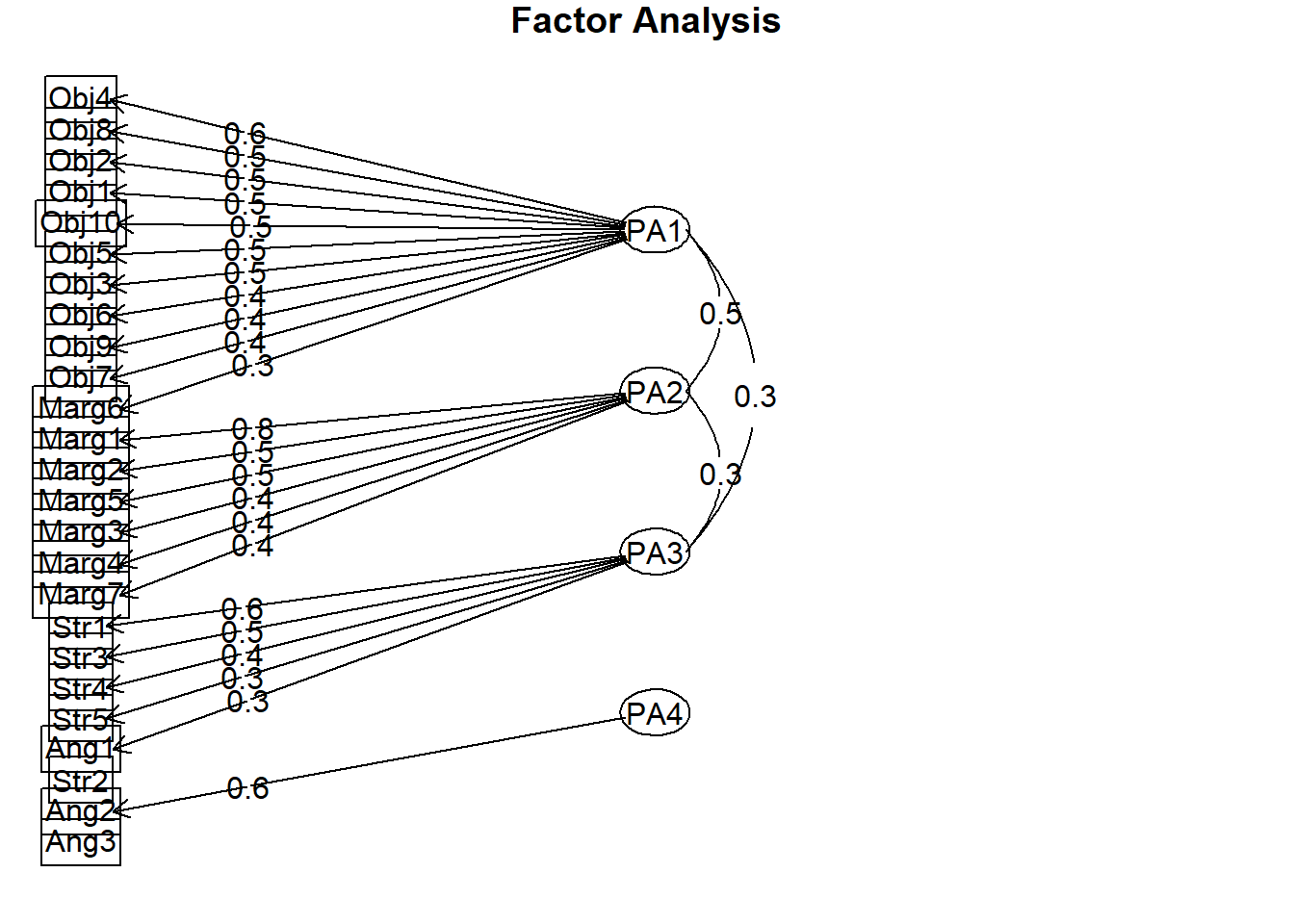

Whereas the orthogonal rotation sought to maximize the independence/unrelatedness of the factors, an oblique rotation will allow them to be correlated. Researchers often explore both solutions but only report one.

# grmsPAF2obl <- psych::fa(GRMSr, nfactors = 4, fm = 'pa', rotate =

# 'oblimin')

grmsPAF2obl <- psych::fa(dfGRMS, nfactors = 4, fm = "pa", rotate = "oblimin")Loading required namespace: GPArotationFactor Analysis using method = pa

Call: psych::fa(r = dfGRMS, nfactors = 4, rotate = "oblimin", fm = "pa")

Standardized loadings (pattern matrix) based upon correlation matrix

PA1 PA2 PA3 PA4 h2 u2 com

Obj1 0.51 0.00 0.08 0.00 0.29 0.71 1.1

Obj2 0.53 0.02 0.04 0.05 0.32 0.68 1.0

Obj3 0.46 0.00 0.07 0.04 0.25 0.75 1.1

Obj4 0.56 0.05 -0.05 0.00 0.33 0.67 1.0

Obj5 0.47 0.02 0.12 -0.06 0.28 0.72 1.2

Obj6 0.43 -0.11 -0.02 0.25 0.22 0.78 1.8

Obj7 0.38 0.14 0.08 0.06 0.27 0.73 1.4

Obj8 0.53 0.15 -0.05 -0.22 0.35 0.65 1.5

Obj9 0.39 -0.05 0.14 -0.04 0.19 0.81 1.3

Obj10 0.48 -0.08 -0.02 0.10 0.22 0.78 1.2

Marg1 -0.03 0.81 -0.03 -0.02 0.62 0.38 1.0

Marg2 -0.01 0.49 0.22 0.12 0.41 0.59 1.5

Marg3 0.08 0.43 0.09 0.05 0.28 0.72 1.2

Marg4 0.20 0.40 -0.11 0.11 0.28 0.72 1.8

Marg5 0.14 0.45 0.08 0.03 0.33 0.67 1.3

Marg6 0.31 0.25 -0.02 0.13 0.27 0.73 2.3

Marg7 0.07 0.36 0.00 0.08 0.18 0.82 1.2

Str1 0.07 0.08 0.56 -0.12 0.36 0.64 1.2

Str2 0.21 -0.04 0.27 0.14 0.18 0.82 2.5

Str3 -0.01 -0.02 0.53 0.04 0.28 0.72 1.0

Str4 0.10 -0.04 0.39 -0.06 0.17 0.83 1.2

Str5 0.06 -0.01 0.35 0.14 0.18 0.82 1.4

Ang1 -0.07 0.10 0.33 0.24 0.22 0.78 2.1

Ang2 0.01 0.07 -0.02 0.64 0.43 0.57 1.0

Ang3 0.04 0.18 0.09 0.30 0.19 0.81 1.9

PA1 PA2 PA3 PA4

SS loadings 2.79 2.03 1.37 0.91

Proportion Var 0.11 0.08 0.05 0.04

Cumulative Var 0.11 0.19 0.25 0.28

Proportion Explained 0.39 0.29 0.19 0.13

Cumulative Proportion 0.39 0.68 0.87 1.00

With factor correlations of

PA1 PA2 PA3 PA4

PA1 1.00 0.46 0.34 0.17

PA2 0.46 1.00 0.32 0.27

PA3 0.34 0.32 1.00 0.19

PA4 0.17 0.27 0.19 1.00

Mean item complexity = 1.4

Test of the hypothesis that 4 factors are sufficient.

df null model = 300 with the objective function = 4.89 with Chi Square = 1217.51

df of the model are 206 and the objective function was 0.77

The root mean square of the residuals (RMSR) is 0.04

The df corrected root mean square of the residuals is 0.04

The harmonic n.obs is 259 with the empirical chi square 202.41 with prob < 0.56

The total n.obs was 259 with Likelihood Chi Square = 189.19 with prob < 0.79

Tucker Lewis Index of factoring reliability = 1.027

RMSEA index = 0 and the 90 % confidence intervals are 0 0.018

BIC = -955.52

Fit based upon off diagonal values = 0.97

Measures of factor score adequacy

PA1 PA2 PA3 PA4

Correlation of (regression) scores with factors 0.89 0.89 0.80 0.75

Multiple R square of scores with factors 0.79 0.79 0.65 0.57

Minimum correlation of possible factor scores 0.59 0.58 0.30 0.14We can make it a little easier to interpret by removing all factor loadings below .30.

Factor Analysis using method = pa

Call: psych::fa(r = dfGRMS, nfactors = 4, rotate = "oblimin", fm = "pa")

Standardized loadings (pattern matrix) based upon correlation matrix

item PA1 PA2 PA3 PA4 h2 u2 com

Obj4 4 0.56 0.33 0.67 1.0

Obj8 8 0.53 0.35 0.65 1.5

Obj2 2 0.53 0.32 0.68 1.0

Obj1 1 0.51 0.29 0.71 1.1

Obj10 10 0.48 0.22 0.78 1.2

Obj5 5 0.47 0.28 0.72 1.2

Obj3 3 0.46 0.25 0.75 1.1

Obj6 6 0.43 0.22 0.78 1.8

Obj9 9 0.39 0.19 0.81 1.3

Obj7 7 0.38 0.27 0.73 1.4

Marg6 16 0.31 0.27 0.73 2.3

Marg1 11 0.81 0.62 0.38 1.0

Marg2 12 0.49 0.41 0.59 1.5

Marg5 15 0.45 0.33 0.67 1.3

Marg3 13 0.43 0.28 0.72 1.2

Marg4 14 0.40 0.28 0.72 1.8

Marg7 17 0.36 0.18 0.82 1.2

Str1 18 0.56 0.36 0.64 1.2

Str3 20 0.53 0.28 0.72 1.0

Str4 21 0.39 0.17 0.83 1.2

Str5 22 0.35 0.18 0.82 1.4

Ang1 23 0.33 0.22 0.78 2.1

Str2 19 0.18 0.82 2.5

Ang2 24 0.64 0.43 0.57 1.0

Ang3 25 0.19 0.81 1.9

PA1 PA2 PA3 PA4

SS loadings 2.79 2.03 1.37 0.91

Proportion Var 0.11 0.08 0.05 0.04

Cumulative Var 0.11 0.19 0.25 0.28

Proportion Explained 0.39 0.29 0.19 0.13

Cumulative Proportion 0.39 0.68 0.87 1.00

With factor correlations of

PA1 PA2 PA3 PA4

PA1 1.00 0.46 0.34 0.17

PA2 0.46 1.00 0.32 0.27

PA3 0.34 0.32 1.00 0.19

PA4 0.17 0.27 0.19 1.00

Mean item complexity = 1.4

Test of the hypothesis that 4 factors are sufficient.

df null model = 300 with the objective function = 4.89 with Chi Square = 1217.51

df of the model are 206 and the objective function was 0.77

The root mean square of the residuals (RMSR) is 0.04

The df corrected root mean square of the residuals is 0.04

The harmonic n.obs is 259 with the empirical chi square 202.41 with prob < 0.56

The total n.obs was 259 with Likelihood Chi Square = 189.19 with prob < 0.79

Tucker Lewis Index of factoring reliability = 1.027

RMSEA index = 0 and the 90 % confidence intervals are 0 0.018

BIC = -955.52

Fit based upon off diagonal values = 0.97

Measures of factor score adequacy

PA1 PA2 PA3 PA4

Correlation of (regression) scores with factors 0.89 0.89 0.80 0.75

Multiple R square of scores with factors 0.79 0.79 0.65 0.57

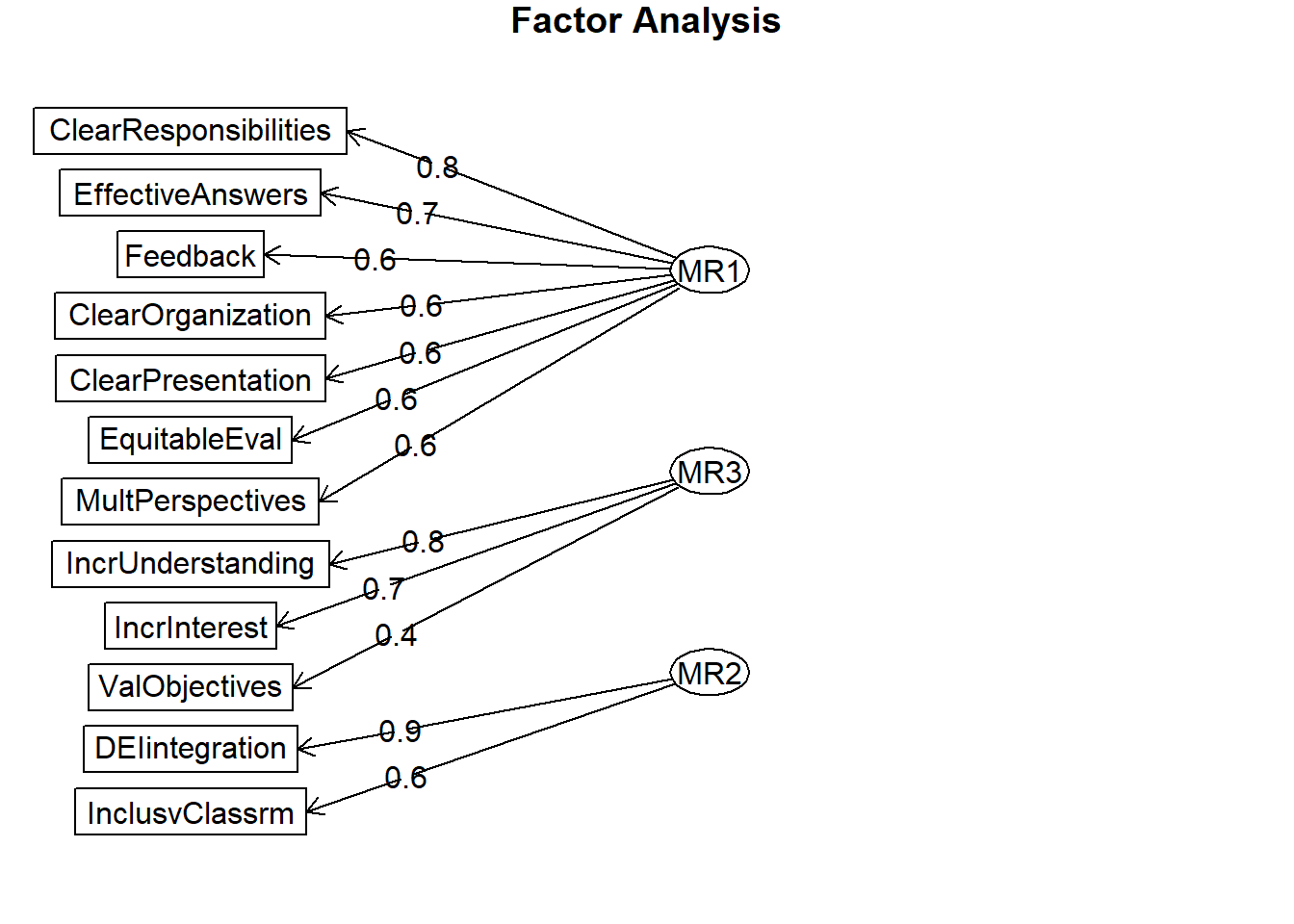

Minimum correlation of possible factor scores 0.59 0.58 0.30 0.14In this rotation, the Angry scale falls apart. As before, Ang1 is loading onto Strong. Additionally, Ang3 has factor loadings that fall below .30, and therefore do not appear in this table. Additionally, because our specification included “sort=TRUE”, the relative weights wiggled around and so the items are listed in a different order than in the orthogonal rotation.

The oblique rotation allows us to see the correlation between the factors/scales. This was not available in the orthogonal rotation because the assumption of the orthogonal/varimax rotation is that the scales/factors are uncorrelated; hence in the analysis they were fixed to 0.0. The correlations from our simulated data range from .19 to .46.

Of course there is always a little complexity. In oblique rotations, there is a distinction between the pattern matrix (which reports factor loadings and is comparable to the matrix we interpreted for the orthogonal rotation) and the structure matrix (takes into account the relationship between the factors/scales – it is a product of the pattern matrix and the matrix containing the correlation coefficients between the factors/scales). Most interpret the pattern matrix because it is simpler; however, it could be that values in the pattern matrix are suppressed because of relations between the factors. Therefore, the structure matrix can be a useful check and some editors will request it.

Obtaining the structure matrix requires two steps. First, multiply the factor loadings with the phi matrix.

PA1 PA2 PA3 PA4

Obj1 0.5351558 0.2587471 0.2578729 0.10672133

Obj2 0.5596959 0.2939415 0.2397101 0.15920737

Obj3 0.4931574 0.2456837 0.2386994 0.13178696

Obj4 0.5676937 0.2946510 0.1536862 0.09619880

Obj5 0.5143469 0.2639663 0.2797209 0.05320390

Obj6 0.4083011 0.1433380 0.1363673 0.28476763

Obj7 0.4891359 0.3627793 0.2697523 0.18376868

Obj8 0.5462750 0.3181931 0.1337799 -0.09863944

Obj9 0.4098436 0.1673842 0.2524221 0.04306615

Obj10 0.4526040 0.1614974 0.1403757 0.15874466

Marg1 0.3385023 0.7854134 0.2197285 0.18936979

Marg2 0.3164379 0.5904981 0.3994360 0.29125182

Marg3 0.3190254 0.5084002 0.2613340 0.19797897

Marg4 0.3686089 0.4872840 0.1082789 0.22714662

Marg5 0.3780702 0.5477661 0.2747713 0.19177240

Marg6 0.4417130 0.4228182 0.1911285 0.24703507

Marg7 0.2525475 0.4115888 0.1520707 0.19123240

Str1 0.2752766 0.2583504 0.5843472 0.02598261

Str2 0.3031342 0.1787399 0.3515298 0.21686734

Str3 0.1645811 0.1543190 0.5235449 0.13423477

Str4 0.1999438 0.1116093 0.3967457 0.02531912

Str5 0.1976930 0.1656974 0.3929920 0.21880162

Ang1 0.1344340 0.2417716 0.3896315 0.32188168

Ang2 0.1467619 0.2423578 0.1284210 0.65279494

Ang3 0.2088530 0.3073000 0.2225652 0.37115411Next, use Field’s (2012) function to produce the matrix.

# Field's function to produce the structure matrix

factor.structure <- function(fa, cut = 0.2, decimals = 2) {

structure.matrix <- psych::fa.sort(fa$loadings %*% fa$Phi)

structure.matrix <- data.frame(ifelse(abs(structure.matrix) < cut,

"", round(structure.matrix, decimals)))

return(structure.matrix)

}

factor.structure(grmsPAF2obl, cut = 0.3) PA1 PA2 PA3 PA4

Obj4 0.57

Obj2 0.56

Obj8 0.55 0.32

Obj1 0.54

Obj5 0.51

Obj3 0.49

Obj7 0.49 0.36

Obj10 0.45

Marg6 0.44 0.42

Obj9 0.41

Obj6 0.41

Marg1 0.34 0.79

Marg2 0.32 0.59 0.4

Marg5 0.38 0.55

Marg3 0.32 0.51

Marg4 0.37 0.49

Marg7 0.41

Str1 0.58

Str3 0.52

Str4 0.4

Str5 0.39

Ang1 0.39 0.32

Str2 0.3 0.35

Ang2 0.65

Ang3 0.31 0.37Here we see some instability. Marg6 had cross-loadings with two scales and “hopped” membership onto Objectification. All three Ang items are showing factor loadings on their own scale. However, Ang1 is still loading on Strong and Ang3 has some cross-loading.

9.5.4 Factor Scores

Factor scores (PA scores) can be created for each case (row) on each factor (column). These can be used to assess the relative standing of one person on the construct/variable to another. We can also use them in regression (in place of means or sums) when groups of predictors correlate so highly that there is multicollinearity.

Computation involves multiplying an individual’s item-level response by the factor loadings we obtained through the PAF process. The results will be one score per factor for each row/case.

# in all of this, don't forget to be specifying the datset that has

# the reverse-coded item replaced

grmsPAF2obl <- psych::fa(dfGRMS, nfactors = 4, fm = "pa", rotate = "oblimin",

scores = TRUE)

head(grmsPAF2obl$scores, 10) #shows us only the first 10 (of N = 2571) PA1 PA2 PA3 PA4

[1,] -0.8113951 -1.2471438 0.12524275 -0.5260185

[2,] 0.2984396 -0.7314827 0.50376631 0.2511270

[3,] 0.3834222 0.4035418 0.18143350 1.0737292

[4,] -0.9982416 -1.1583980 -0.01836819 -1.4226140

[5,] -0.1985534 0.4507445 -0.78705628 0.1813746

[6,] -0.4233586 0.3917061 -0.17730679 0.7556499

[7,] 0.2528621 0.4465398 -0.78836577 -0.4751612

[8,] -1.3823984 -0.5908492 0.36410712 0.5085523

[9,] -0.5534479 -1.2460939 -0.65935346 0.4168911

[10,] 0.1212895 -0.4771782 -0.60054957 0.1698606To bring this full circle, we can see the correlation of the factor scores; the pattern maps onto what we saw previously in the correlations between factors in the oblique rotation.

Call:psych::corr.test(x = dfGRMS[c("PA1", "PA2", "PA3", "PA4")])

Correlation matrix

PA1 PA2 PA3 PA4

PA1 1.00 0.58 0.48 0.28

PA2 0.58 1.00 0.45 0.41

PA3 0.48 0.45 1.00 0.33

PA4 0.28 0.41 0.33 1.00

Sample Size

[1] 259

Probability values (Entries above the diagonal are adjusted for multiple tests.)

PA1 PA2 PA3 PA4

PA1 0 0 0 0

PA2 0 0 0 0

PA3 0 0 0 0

PA4 0 0 0 0

To see confidence intervals of the correlations, print with the short=FALSE optionWe can extract the factor loadings and write them to a table. This can be useful in preparing an APA style table for a manuscript or presentation.

# names(grmsPAF2obl)

pafOBL_table <- round(grmsPAF2obl$loadings, 3)

write.table(pafOBL_table, file = "pafOBL_table.csv", sep = ",", col.names = TRUE,

row.names = FALSE)

pafOBL_table

Loadings:

PA1 PA2 PA3 PA4

Obj1 0.508

Obj2 0.526

Obj3 0.463

Obj4 0.563

Obj5 0.473 0.123

Obj6 0.426 -0.114 0.246

Obj7 0.385 0.141

Obj8 0.535 0.146 -0.220

Obj9 0.391 0.142

Obj10 0.479 0.102

Marg1 0.811

Marg2 0.492 0.223 0.117

Marg3 0.429

Marg4 0.202 0.400 -0.108 0.106

Marg5 0.136 0.451

Marg6 0.310 0.250 0.130

Marg7 0.356

Str1 0.558 -0.116

Str2 0.206 0.266 0.140

Str3 0.526

Str4 0.388

Str5 0.348 0.144

Ang1 0.103 0.334 0.241

Ang2 0.635

Ang3 0.178 0.298

PA1 PA2 PA3 PA4

SS loadings 2.523 1.762 1.190 0.794

Proportion Var 0.101 0.070 0.048 0.032

Cumulative Var 0.101 0.171 0.219 0.251We can also obtain a figure of this PAF with oblique rotation.

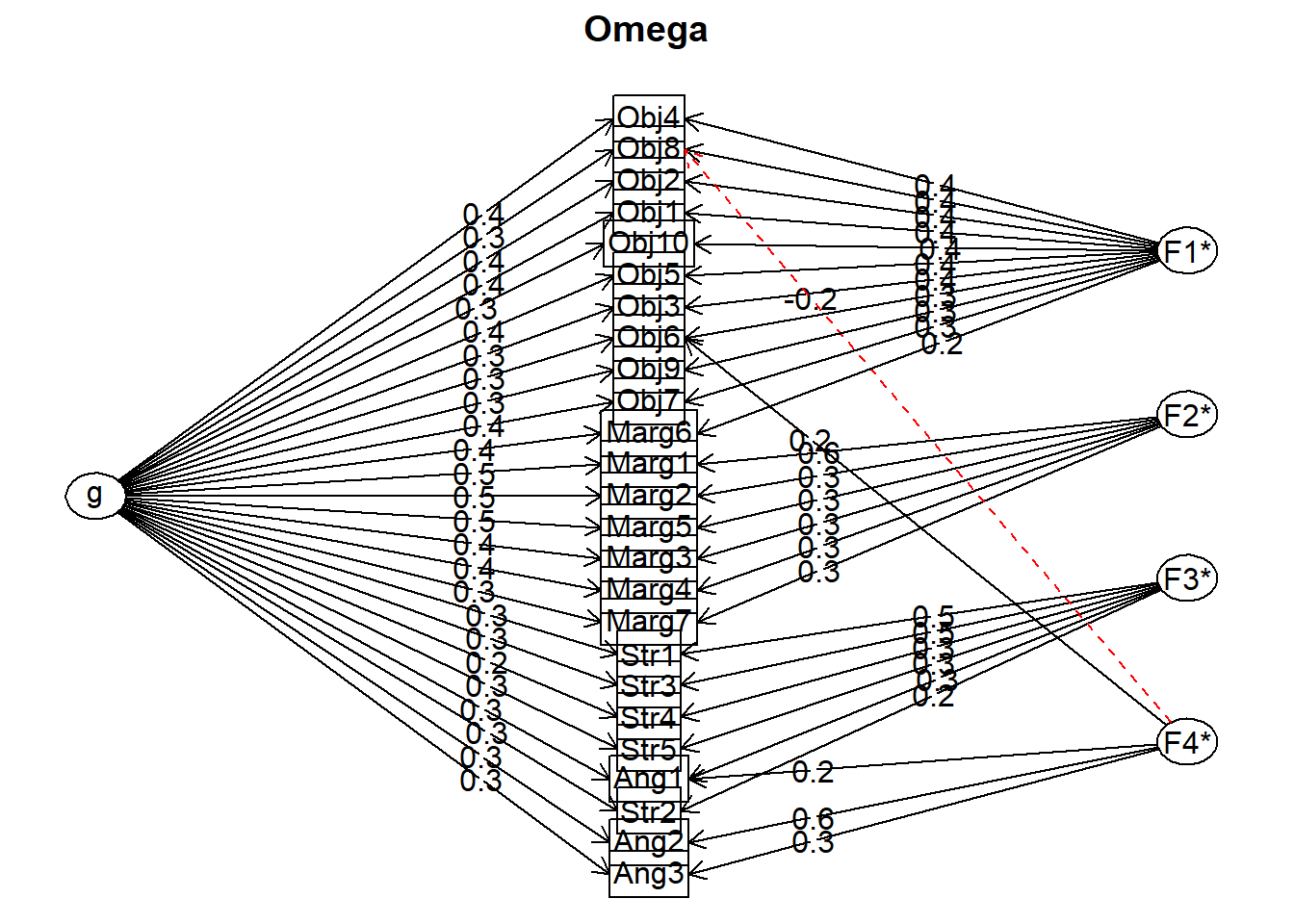

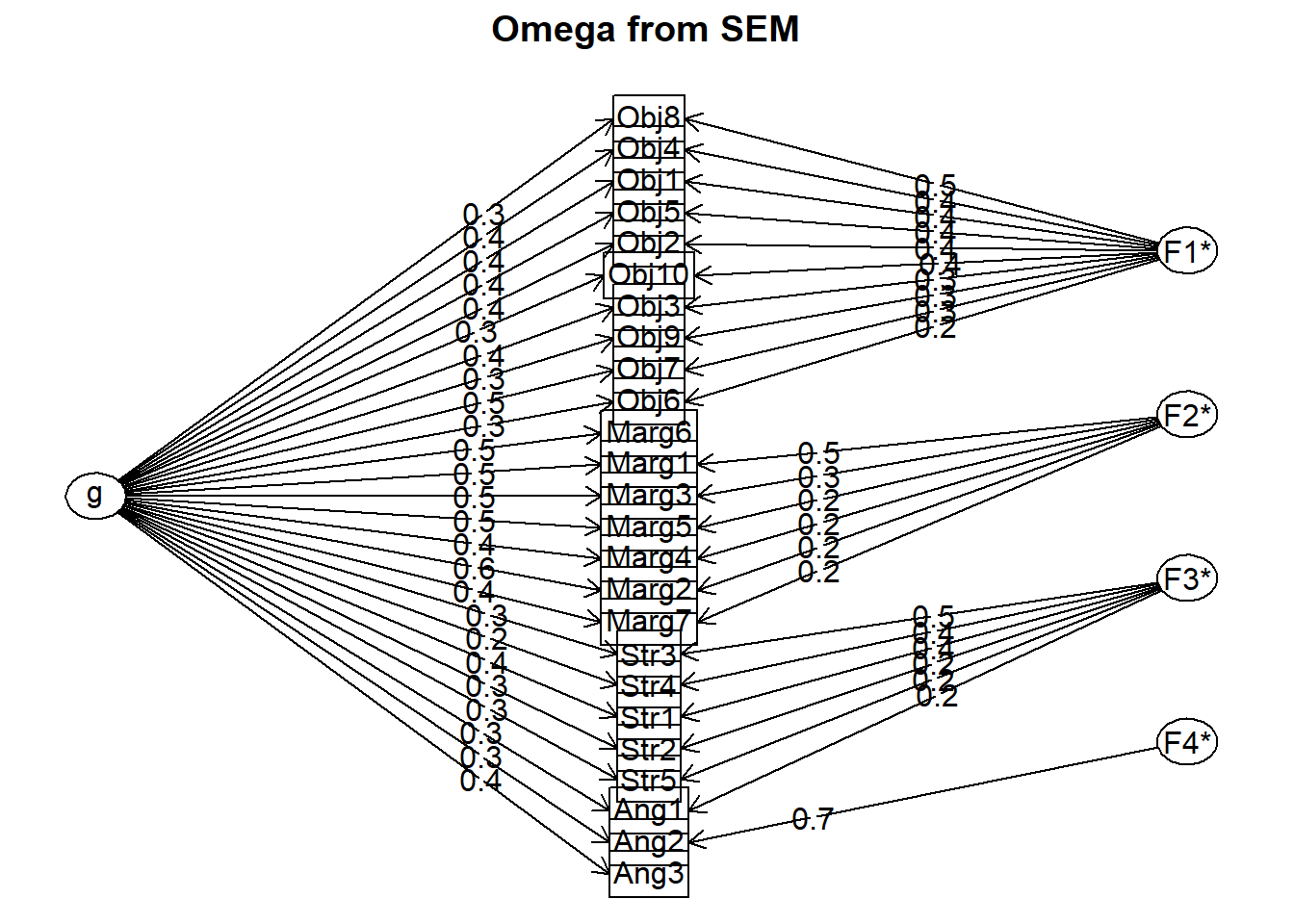

9.6 APA Style Results

Results